People with weak critical thinking miss these 11 dangerous flaws in their beliefs

These mental mistakes are ruining your judgment without you even knowing..

Think your beliefs are rock-solid? Think again. You might be shocked to find that even your most confident opinions could be based on weak critical thinking. It’s easy to fall into mental traps that feel logical but actually cloud your judgment.

If you’re ready to spot the red flags, let’s dive into the hidden flaws shaping your views.

1. You always agree with people who think just like you.

If everyone in your circle shares the same opinions, it’s easy to assume they must be right. But surrounding yourself with like-minded voices can create an echo chamber. It feels comfortable, sure, but it stops you from questioning your beliefs. True critical thinking involves hearing and considering viewpoints that might challenge or even shake up what you believe.

2. You shut down the moment someone disagrees with you.

Ever notice how defensive you get when someone sees things differently? If you instantly dismiss opposing views, you might be missing an opportunity to sharpen your thinking. Defensiveness is often a red flag that you’re not as certain as you thought. Instead of brushing others off, try digging deeper and asking yourself why you disagree.

3. You rely on gut feelings more than facts.

There’s nothing wrong with intuition—until it takes over reason. If you catch yourself saying, “I just know I’m right,” that’s a sign you’re skipping the critical step of fact-checking. Intuition alone can steer you in the wrong direction. Balancing your instincts with evidence makes your opinions stronger and more reliable.

4. You think everyone who disagrees is misinformed or “just doesn’t get it.”

Believing that only you have the full picture is a dangerous mindset. When you label others as uninformed, you close yourself off to valuable perspectives. Critical thinking means acknowledging that even people with different beliefs might have a piece of the truth. If your gut reaction is to discredit them, you’re ignoring key insights.

5. You base your opinions on headlines or social media soundbites.

Quick reads and catchy headlines are designed to grab attention, but they rarely tell the whole story. If you’re basing your beliefs on what you scroll past, you’re likely missing out on context, nuance, and depth. True understanding comes from digging a bit deeper and checking the sources—no one’s view should rest on a headline alone.

6. You feel uncomfortable when people ask you to explain your views.

Ever felt like you’re stumbling over your words when someone challenges you? That’s often a sign your ideas need more solid grounding. If you can’t clearly articulate why you believe something, your thinking might be weaker than you realize. Try practicing how you’d explain your beliefs to someone who disagrees—it can reveal where you need more clarity.

7. You think your life experience is proof enough that you’re right.

It’s easy to fall into the trap of assuming, “If it’s true for me, it’s true for everyone.” But your experience is just one slice of reality. Assuming it applies universally can lead to misguided beliefs. Broaden your perspective by learning from others’ stories—they may not match yours, but they’re equally valid and worth considering.

8. You avoid changing your mind—even when faced with solid evidence.

If changing your mind feels like a defeat, you’re likely holding onto beliefs for the wrong reasons. Real critical thinkers aren’t afraid to adapt when new information arises. Staying open to new insights is what makes your beliefs more resilient. Refusing to budge, even with good reason, is often a sign of weak critical thinking.

9. You believe in “either/or” thinking without considering the gray areas.

Life is rarely black-and-white, but it’s easy to think that way. When you believe that there are only two sides to an issue, you’re limiting your understanding. This type of thinking blocks out nuance, which is where most truth resides. Embracing the gray areas can strengthen your views and lead to more balanced opinions.

10. You never check if your sources are credible—or if they’re biased.

Relying on sources without checking their reliability can be a huge red flag. If your beliefs hinge on information from unverified or biased sources, they’re on shaky ground. Spend a bit of time evaluating the origins of what you read. It’s worth knowing if there’s a hidden agenda shaping what you’re being told.

11. You think anyone who questions your beliefs is attacking you personally.

When you take every disagreement as a personal affront, it’s harder to view things objectively. Being challenged doesn’t mean someone is out to get you; often, it’s a chance to sharpen your thoughts. If criticism makes you feel attacked, try stepping back to see if there’s any truth in it—it might just make your beliefs stronger.

- Get started with computers

- Learn Microsoft Office

- Apply for a job

- Improve my work skills

- Design nice-looking docs

- Getting Started

- Smartphones & Tablets

- Typing Tutorial

- Online Learning

- Basic Internet Skills

- Online Safety

- Social Media

- Zoom Basics

- Google Docs

- Google Sheets

- Career Planning

- Resume Writing

- Cover Letters

- Job Search and Networking

- Business Communication

- Entrepreneurship 101

- Careers without College

- Job Hunt for Today

- 3D Printing

- Freelancing 101

- Personal Finance

- Sharing Economy

- Decision-Making

- Graphic Design

- Photography

- Image Editing

- Learning WordPress

- Language Learning

- Critical Thinking

- For Educators

- Translations

- Staff Picks

- English expand_more expand_less

Critical Thinking and Decision-Making - Logical Fallacies

Critical thinking and decision-making -, logical fallacies, critical thinking and decision-making logical fallacies.

Critical Thinking and Decision-Making: Logical Fallacies

Lesson 7: logical fallacies.

/en/problem-solving-and-decision-making/how-critical-thinking-can-change-the-game/content/

Logical fallacies

If you think about it, vegetables are bad for you. I mean, after all, the dinosaurs ate plants, and look at what happened to them...

Let's pause for a moment: That argument was pretty ridiculous. And that's because it contained a logical fallacy .

A logical fallacy is any kind of error in reasoning that renders an argument invalid . They can involve distorting or manipulating facts, drawing false conclusions, or distracting you from the issue at hand. In theory, it seems like they'd be pretty easy to spot, but this isn't always the case.

Watch the video below to learn more about logical fallacies.

Sometimes logical fallacies are intentionally used to try and win a debate. In these cases, they're often presented by the speaker with a certain level of confidence . And in doing so, they're more persuasive : If they sound like they know what they're talking about, we're more likely to believe them, even if their stance doesn't make complete logical sense.

False cause

One common logical fallacy is the false cause . This is when someone incorrectly identifies the cause of something. In my argument above, I stated that dinosaurs became extinct because they ate vegetables. While these two things did happen, a diet of vegetables was not the cause of their extinction.

Maybe you've heard false cause more commonly represented by the phrase "correlation does not equal causation ", meaning that just because two things occurred around the same time, it doesn't necessarily mean that one caused the other.

A straw man is when someone takes an argument and misrepresents it so that it's easier to attack . For example, let's say Callie is advocating that sporks should be the new standard for silverware because they're more efficient. Madeline responds that she's shocked Callie would want to outlaw spoons and forks, and put millions out of work at the fork and spoon factories.

A straw man is frequently used in politics in an effort to discredit another politician's views on a particular issue.

Begging the question

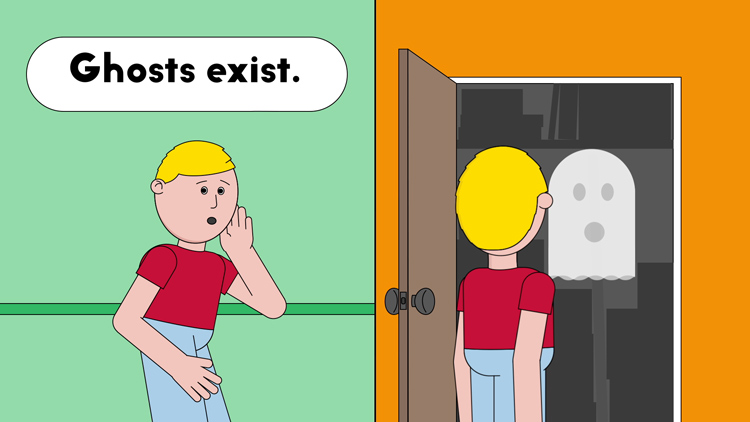

Begging the question is a type of circular argument where someone includes the conclusion as a part of their reasoning. For example, George says, “Ghosts exist because I saw a ghost in my closet!"

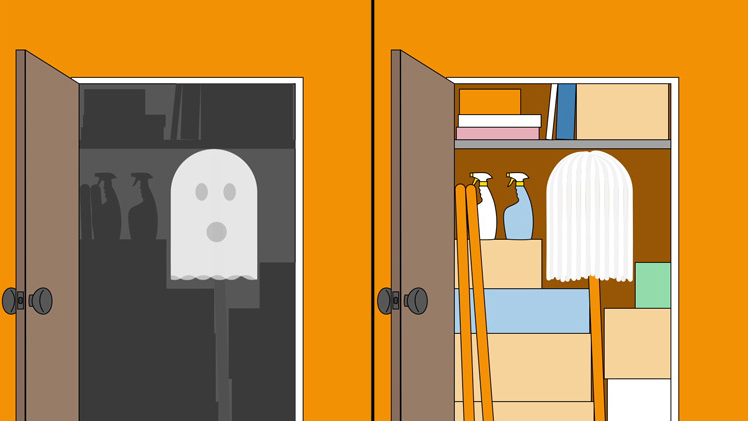

George concluded that “ghosts exist”. His premise also assumed that ghosts exist. Rather than assuming that ghosts exist from the outset, George should have used evidence and reasoning to try and prove that they exist.

Since George assumed that ghosts exist, he was less likely to see other explanations for what he saw. Maybe the ghost was nothing more than a mop!

False dilemma

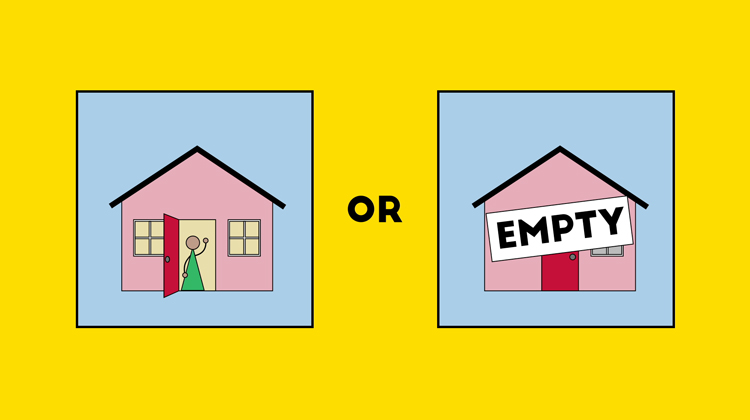

The false dilemma (or false dichotomy) is a logical fallacy where a situation is presented as being an either/or option when, in reality, there are more possible options available than just the chosen two. Here's an example: Rebecca rings the doorbell but Ethan doesn't answer. She then thinks, "Oh, Ethan must not be home."

Rebecca posits that either Ethan answers the door or he isn't home. In reality, he could be sleeping, doing some work in the backyard, or taking a shower.

Most logical fallacies can be spotted by thinking critically . Make sure to ask questions: Is logic at work here or is it simply rhetoric? Does their "proof" actually lead to the conclusion they're proposing? By applying critical thinking, you'll be able to detect logical fallacies in the world around you and prevent yourself from using them as well.

- Search Search Search …

- Search Search …

12 Cognitive Biases and How to Overcome Them with Critical Thinking: A Guide to Better Decision Making

In everyday life, cognitive biases can significantly impact our decision-making processes and overall perception of reality. These biases are mental shortcuts that can lead to systematic errors in thinking, causing us to draw incorrect conclusions or make irrational decisions.

Understanding and overcoming these biases is crucial to developing more logical and effective thinking patterns. This article explores 12 common cognitive biases and offers practical strategies to counteract them using critical thinking techniques. By recognizing and addressing these biases, individuals can enhance their ability to evaluate information objectively and make more informed decisions.

1) Anchoring Bias

Anchoring bias occurs when people rely too heavily on the first piece of information they receive, even if it is irrelevant.

For instance, if you see a shirt on sale for $50 after initially seeing it priced at $150, you might think it’s a great deal, even if $50 is still more than you would typically spend.

This bias can affect various decisions, from shopping to negotiations. People often make judgments and decisions anchored to the initial information.

To combat anchoring bias, it’s important to recognize its presence. Start by questioning the initial information and seek out multiple perspectives before making decisions.

Anchoring bias can also be reduced by delaying decisions until more information is gathered. Taking time to analyze different factors can help lessen the influence of the initial anchor.

Regularly practicing critical thinking skills can also aid in minimizing anchoring bias. Evaluate information critically and consider alternative viewpoints.

In business settings, team collaborations can help counteract anchoring bias. Diverse opinions can reduce reliance on a single piece of data.

For more tips on detecting and overcoming anchoring bias, check out this article by BetterUp.

2) Confirmation Bias

Confirmation bias is the tendency to favor information that aligns with existing beliefs. For example, if someone thinks left-handed people are more creative , they’ll likely notice and remember examples that support this idea. Other evidence may be overlooked or dismissed.

This bias can lead to poor decision-making because it limits exposure to differing perspectives. It can reinforce incorrect views and hinder learning.

To combat confirmation bias, individuals should actively seek information that challenges their beliefs. This can involve reading diverse sources and engaging in discussions with people who hold different opinions.

Another strategy is to question the reliability of preferred information. By evaluating evidence critically, it’s possible to form a more balanced view. Encouraging open-mindedness is crucial in minimizing the influence of confirmation bias.

Understanding that everyone has biases can also help. Acknowledging them is the first step towards addressing and reducing their impact. Being conscious of this bias allows for more objective thinking and better decision-making.

For more information, visit confirmation bias and its effects or ways to recognize and overcome it .

3) Hindsight Bias

Hindsight Bias is the tendency to believe that an event was predictable after it has happened. People often claim they “knew it all along” once the outcome is clear. This bias distorts their memory of their former opinions and judgments.

A common example is attending a baseball game and insisting you knew the winning team would win after the game ends. High school and college students often experience this cognitive bias when they think they initially predicted a test question correctly.

Hindsight Bias can be problematic. It can lead to overconfidence in one’s ability to predict events. This can affect decision-making, as people may not learn from past mistakes or may misjudge their forecasting abilities.

Overcoming Hindsight Bias involves acknowledging that this bias exists. Reflecting on past decisions and writing down predictions before outcomes can also help. This way, individuals can compare their initial thoughts with the actual results, promoting a more accurate self-assessment.

Hindsight Bias can impact learning and growth. Accepting that not all events are predictable is crucial. This helps maintain a realistic view of one’s decision-making skills and fosters better critical thinking .

4) Self-Serving Bias

Self-serving bias is a cognitive bias where individuals attribute their successes to internal factors and their failures to external factors. This means they might credit themselves for a team’s win but blame a bad outcome on external conditions.

This bias serves as a defense mechanism, helping protect self-esteem by deflecting blame for failures. For example, if a student does well on a test, they might say it’s because they studied hard. If they do poorly, they might blame the test’s difficulty.

In the workplace, this bias can affect team dynamics. Employees may take credit for successful projects but blame others when things go wrong. It can harm relationships and reduce trust within the team.

Recognizing this bias is the first step in managing it. Accepting that everyone has cognitive biases can help in overcoming it. Being mindful during evaluations of both successes and failures can make assessments more balanced and fair. Learning to accept personal responsibility and acknowledging contributions from others can foster healthier interactions.

For more insights on self-serving bias, including examples, visit this Business Insider article . This can help in understanding how this bias manifests in different scenarios.

5) Optimism Bias

Optimism bias is a cognitive bias where people believe that they are less likely to experience negative events and more likely to experience positive ones. This bias can make individuals think they are invincible or overly lucky.

A classic example is when people underestimate their risk of getting into a car accident. They believe it won’t happen to them, even if statistics suggest otherwise. This can lead to risky behaviors such as not wearing seat belts.

Optimism bias often motivates people to pursue goals ambitiously. By believing in positive outcomes, they may work harder and take more risks, increasing their chances of success. Optimism bias can act as a self-fulfilling prophecy.

It’s essential to recognize when optimism bias might be skewing your perception. Critical thinking can help balance this bias. One approach is to consider possible challenges or setbacks and prepare for them accordingly. By acknowledging potential risks, individuals can make more informed decisions.

Being aware of optimism bias can also improve problem-solving skills. By assessing both positive and negative outcomes realistically, individuals can create more balanced plans and strategies for their goals.

6) Negativity Bias

Negativity bias is a tendency to focus more on negative experiences than positive ones. This can shape how people perceive events and make decisions. For example, they might remember a single criticism more than multiple compliments.

This bias can affect mental health. People who have a strong negativity bias often feel more stress and anxiety. This happens because their minds are tuned to notice and dwell on negative outcomes.

To overcome negativity bias, one can use critical thinking strategies. Start by questioning negative thoughts and looking for evidence that supports or refutes them. This helps create a more balanced view.

Another way is to consciously focus on positive experiences. Keeping a gratitude journal can help. Writing down positive events every day makes them easier to recall.

Using these strategies can diminish the impact of negativity bias and lead to better decision-making. Steps like these can help anyone challenge their own biases and see a more complete picture of their experiences. For more tips on overcoming cognitive biases, check out this resource .

7) Bandwagon Effect

The bandwagon effect is a cognitive bias where individuals adopt behaviors, styles, or attitudes simply because others are doing so. It’s a tendency to follow the crowd, often ignoring one’s own beliefs or values.

This effect is deeply rooted in human nature. People feel a desire to conform to the majority as it provides a sense of security and acceptance.

It often leads to poor decision-making. For instance, a person might buy a product just because it’s popular, rather than evaluating its actual usefulness or quality.

This cognitive bias can be particularly influential in areas like politics, fashion, and technology. Seeing many others adopt a certain behavior or belief can make it appear more valid or correct.

To overcome the bandwagon effect, individuals can practice critical thinking . Questioning why they are making a choice and evaluating the pros and cons independently can help.

Awareness is the first step in combating this bias. By recognizing when they are being influenced by the crowd, people can make more informed and rational decisions.

Educational resources like Verywell Mind provide more insights on this cognitive bias and how to manage it. Understanding such biases can significantly improve one’s decision-making process.

8) Status Quo Bias

Status quo bias is a tendency to prefer things to stay the same. People often stick with their current situation, even if better options are available. This behavior is seen in various aspects of life, including finances, health, and workplace decisions.

One reason for this bias is loss aversion. People fear losing what they currently have more than they value potential gains. This makes them avoid changes.

Regret avoidance also plays a role. People worry about making a wrong choice and feeling regret. So, they choose to keep things as they are to avoid this possibility.

Another reason is the feeling of being overwhelmed by too many choices. Sticking with the familiar is simpler and less stressful.

In the workplace, status quo bias can prevent organizations from embracing new opportunities and innovations. Employees may resist changes that could improve efficiency or profit.

To overcome status quo bias, awareness is key. Recognizing that this bias exists can help individuals and organizations make more rational decisions. Encouraging a culture of change and continuous improvement can also help reduce the impact of this bias.

For more information, you can read about how the status quo bias affects decisions on Verywell Mind or how it impacts the workplace at Wharton .

9) Sunk Cost Fallacy

The Sunk Cost Fallacy is a cognitive bias that makes people continue investing in a losing proposition because of the resources already committed. This might include money, time, or effort.

People often think, “I’ve already spent so much; I can’t stop now.” This thinking ignores the reality that the invested resources cannot be recovered.

For example, a company might keep funding an unprofitable project because of the money already spent. However, future investments should be based on potential returns, not past costs.

To avoid this bias, individuals can reflect on their emotions and get an outside opinion. Looking towards future benefits rather than past investments can lead to better decisions.

Incorporating critical thinking helps. Ask questions like, “Is this still the best option?” or “What are the potential future gains?” This can shift focus from past costs to future outcomes.

For more details on the effects, visit The Decision Lab or BetterUp . Dealing with this bias lets people make more rational and beneficial choices.

10) Gambler’s Fallacy

The gambler’s fallacy is a common cognitive bias that people experience when they believe that past random events influence future random events. This belief is incorrect, as each event is independent.

For example, if a coin is flipped and lands on heads multiple times, someone might think it is more likely to land on tails next. This belief is false because each flip is independent of the previous ones.

This fallacy often appears in gambling settings. A person might believe that a losing streak in roulette must end soon, leading them to bet more money. This thinking can lead to poor decisions and financial losses.

Understanding the gambler’s fallacy helps individuals make better decisions. Recognizing that past events do not affect future outcomes is crucial. Instead of relying on faulty logic, one should focus on the actual probabilities of events.

To avoid this fallacy, people should educate themselves about the nature of randomness. By doing so, they can approach situations with a clearer mindset and make more informed choices. A deep awareness of how randomness works can prevent the influence of the gambler’s fallacy in decision-making contexts.

For more on this bias, visit Effectiviology’s overview or Statistics by Jim .

11) Availability Heuristic

The availability heuristic is a mental shortcut where people base decisions on recent information or examples that come easily to mind. This might lead to skewed judgments, as what’s most available in memory isn’t always the most accurate or typical.

For example, if someone recently heard about a plane crash, they might overestimate the risk of flying. Despite air travel being statistically safer than driving, the vivid memory of the crash sways their judgment.

This cognitive bias can influence various areas of life, including health choices and financial decisions. People might ignore long-term data and focus on memorable but rare events, potentially leading to poor choices.

Understanding the availability heuristic is crucial. By recognizing its impact, individuals can make more rational and balanced decisions. They should seek out broader information and avoid relying solely on recent or dramatic examples.

For those looking to reduce the effect of this bias, practicing critical thinking and questioning first impressions can help. It’s important to verify information from multiple sources to ensure a well-rounded perspective.

The availability heuristic reminds us that what’s most memorable isn’t always what’s most representative. Making decisions based on a wider range of information can lead to better outcomes.

12) Dunning-Kruger Effect

The Dunning-Kruger Effect is a cognitive bias where individuals with low ability in a task overestimate their competence. This happens because they lack the skills to recognize their own incompetence. They believe they are performing well when they are not.

People affected by this bias often have poor self-awareness. They are unable to evaluate their own performance accurately. This leads to overconfidence in their skills and knowledge.

To overcome this, one can improve meta-cognition. This involves thinking about one’s own thinking and learning processes. Developing self-reflection skills can help individuals assess their abilities more accurately.

Critical thinking plays a crucial role here. Questioning assumptions and seeking feedback are essential steps. This helps in gaining a realistic understanding of one’s capabilities.

Some practical steps include ongoing learning and seeking constructive criticism from others. Engaging in activities that challenge one’s current skill level can also be beneficial.

For more detailed insights, you can refer to this article on Verywell Mind.

Awareness of this bias can lead to better decision-making. It encourages continuous self-improvement and realistic self-assessment.

Understanding Cognitive Biases

Cognitive biases are patterns of thinking that can distort our perception and decision-making. Recognizing and addressing these biases is crucial for making more rational and objective choices.

Definition and Importance

Cognitive biases are systematic errors in thinking that affect decisions and judgments. They often occur when the brain relies on shortcuts, known as heuristics, to process information quickly. These biases can lead to irrational decisions and faulty reasoning.

Understanding these biases helps individuals make more informed and rational choices. By recognizing these mental shortcuts, one can actively work to mitigate their effects and improve critical thinking skills .

Why is it important? Because it can affect areas such as business, healthcare, and personal decision-making. Identifying and addressing these biases can lead to better outcomes in various aspects of life.

Common Examples

Several common cognitive biases affect daily thinking and decision-making:

- Confirmation Bias: Seeking out information that supports one’s own beliefs while ignoring contrary evidence.

- Anchoring Bias: Relying too heavily on the first piece of information received (the “anchor”) when making decisions.

- Hindsight Bias: Believing, after an event has occurred, that one would have predicted or expected the outcome.

- Availability Heuristic: Overestimating the importance of information that is readily available, often because it is memorable or recent.

Recognizing these examples can help in actively countering them. For instance, being aware of confirmation bias can lead to seeking out diverse viewpoints. Similarly, knowing about anchoring bias encourages considering a wider range of information before making decisions.

Role of Critical Thinking

Critical thinking plays a crucial role in recognizing cognitive biases and mitigating their impact. It involves essential components that foster clear analysis and significant benefits in making decisions.

Key Components

Objectivity: Critical thinking requires examining all sides of an issue without letting personal feelings or biases influence the judgment. This neutral stance helps in evaluating evidence logically.

Analysis: Breaking down complex information into simpler parts enables better understanding and assessment. Analyzing arguments and claims helps uncover hidden assumptions and faulty reasoning.

Evaluation: Assessing the validity and reliability of information sources is crucial. This practice ensures that only credible and relevant data is considered, which reduces the influence of biases.

Reflection: Thinking about one’s own thought processes allows individuals to identify and correct cognitive biases. Reflection promotes self-awareness and considers alternative viewpoints.

Improved Decision-Making: By recognizing and addressing cognitive biases, critical thinking enhances the ability to make well-informed decisions. This leads to more accurate and fair outcomes.

Enhanced Problem-Solving: Critical thinking equips individuals with skills to tackle complex problems effectively. It encourages looking beyond initial impressions and exploring different solutions.

Better Communication: Clear thinking translates into clear communication. Critical thinkers can articulate their ideas more effectively and understand others’ perspectives, leading to improved interpersonal interactions.

Increased Creativity: Evaluating ideas critically doesn’t stifle creativity; it can actually enhance it. By questioning assumptions and exploring alternatives, critical thinkers often come up with innovative solutions.

Applying these key components and understanding their benefits helps mitigate the influence of cognitive biases, resulting in improved reasoning and outcomes.

Strategies to Overcome Cognitive Biases

Overcoming cognitive biases is crucial for making sound decisions. Key strategies include recognizing biases when they occur and using critical thinking techniques to analyze and mitigate them.

Awareness and Recognition

Recognizing cognitive biases is the first step in overcoming them. Individuals need to be aware of common biases like confirmation bias, anchoring, and availability heuristic. By identifying these biases, people can take proactive measures to mitigate their effects.

Common Biases :

- Confirmation Bias : Favoring information that confirms existing beliefs.

- Anchoring : Relying too heavily on the first piece of information received.

- Availability Heuristic : Overestimating the importance of information that comes to mind quickly.

Educating oneself on these biases and actively reflecting during decision-making processes helps in recognizing them. Keeping a checklist of common biases and reviewing past decisions can also lead to better awareness.

Techniques for Critical Analysis

Once biases are recognized, critical thinking techniques can help to overcome them. Using structured approaches like asking open-ended questions, considering alternative viewpoints, and seeking out data from multiple sources are effective strategies.

Techniques :

- Question Assumptions : Regularly challenge personal assumptions and seek evidence that disproves them.

- Diversify Information Sources : Use varied and credible sources to gather information, reducing reliance on a single perspective.

- Employ Checklists : Use decision-making checklists to ensure all aspects are considered without unconscious bias.

Applying these techniques systematically can improve judgment and decision-making. For example, using algorithms and predefined criteria can help in situations where emotional investments are high, as suggested by Harvard Business Review .

You may also like

Best Decision Making Books: Top Picks for Strategic Minds

In today’s fast-paced world, making informed and effective decisions is a skill that can have a profound impact on both personal and […]

Hedgehog Concept: Guiding Your Business Strategy for Success

The Hedgehog Concept is a simple yet powerful idea introduced by Jim Collins in his acclaimed book “Good to Great.” At its […]

Critical Thinking and Effective Communication: Enhancing Interpersonal Skills for Success

In today’s fast-paced world, effective communication and critical thinking have become increasingly important skills for both personal and professional success. Critical thinking […]

Critical Thinking vs. Strategic Thinking (Strategy As a Critical Thinker)

When it comes to critical thinking vs. strategic thinking, the best way to explain it is to say that one is something […]

Main Challenges When Developing Your Critical Thinking

Written by Argumentful

Every day we are constantly bombarded with information and opinions from all directions. The ability to think critically is more important now than it ever was.

Critical thinking allows us to evaluate arguments, identify biases, and make informed decisions based on evidence and reasoning.

However, developing this skill is not easy, and there are many challenges that can stand in our way.

In this article, we will explore the main challenges that people face when trying to develop their critical thinking skills and provide some tips and strategies for overcoming them.

• Challenge #1: Confirmation Bias

• Challenge #2: Logical Fallacies

• Challenge #3: Emotions

• Challenge #4: Lack of Information or Misinformation

• Challenge #5: Groupthink

• Challenge #6: Overconfidence Bias

• Challenge #7: Cognitive dissonance

Challenge #1: Confirmation Bias

What is confirmation bias.

Confirmation bias is a tendency to seek out information that supports your existing beliefs and ignore information that contradicts those beliefs . It can be a major obstacle to critical thinking, as it can lead us to only consider evidence that confirms our preconceived notions and dismiss evidence that challenges them.

Raymond S. Nickerson, a psychology professor considers that confirmation bias is a common human tendency that can have negative consequences for decision making and information processing.

For example, in politics, people may only consume news from sources that align with their political ideology and ignore information that challenges their beliefs.

Or in the workplace, managers may only seek out feedback that confirms their leadership style and ignore feedback that suggests they need to make changes.

How do critical thinkers fight confirmation bias?

To overcome confirmation bias, it is important to actively seek out information from a variety of sources and perspectives .

This can involve reading news articles and opinion pieces from a range of sources, engaging in discussions with people who hold different opinions, and being open to changing our own beliefs based on new evidence.

It can also be helpful to regularly question our own assumptions and biases.

Another strategy is to practice “ steel manning ” which involves actively trying to understand and strengthen arguments that challenge our own beliefs, rather than just attacking weaker versions of those arguments.

Nickerson suggests the following strategies that can be used to mitigate confirmation bias:

- Considering alternative explanations : You can make a conscious effort to consider alternative explanations for a given set of data or evidence, rather than simply focusing on information that supports your pre-existing beliefs.

- Seeking out disconfirming evidence : Try to actively seek out evidence that contradicts your pre-existing beliefs, rather than simply ignoring or discounting it.

- Using formal decision-making tools : Use formal decision-making tools, such as decision trees or decision matrices, to help structure your thinking and reduce the influence of biases.

- Encouraging group decision making : Groups can be more effective at mitigating confirmation bias than individuals, since group members can challenge each other’s assumptions and biases.

- Adopting a scientific mindset : You can adopt a more scientific mindset, which involves a willingness to consider multiple hypotheses, test them rigorously, and revise them based on evidence.

Nickerson suggests that these strategies may be effective at mitigating confirmation bias, but notes that they may require effort and practice to implement successfully.

By being aware of confirmation bias and actively working to overcome it, we can all develop a more open-minded approach to critical thinking and make more informed decisions.

Challenge #2: Logical Fallacies

Critical thinking requires the ability to identify and analyze arguments for their strengths and weaknesses. One major obstacle to this process is the presence of logical fallacies.

What are logical fallacies?

Logical fallacies are errors in reasoning that can make an argument appear convincing, even if it is flawed .

There are many types of logical fallacies, including ad hominem attacks , false dichotomies , strawman arguments , and appeals to emotion . These fallacies can appear in everyday discourse, from political debates to advertising campaigns, and can lead to flawed conclusions and decisions.

An example of a logical fallacy is when a politician might use an ad hominem attack to undermine their opponent’s credibility rather than addressing their argument directly.

Similarly, an advertisement might use emotional appeals to distract consumers from the actual merits of a product.

For an engaging introduction into the topic, check out Ali Almossawi’s book on logical fallacies-“ An Illustrated Book of Bad Arguments “. It provides a visually appealing perspective, using illustrations and examples to explain many common fallacies. It is aimed at a general audience, but provides a good overview of the topic for beginners.

How do critical thinkers fight logical fallacies?

To avoid being swayed by logical fallacies, it is important to be able to recognize them.

• One strategy is to familiarize yourself with common fallacies and their definitions .

• Additionally, it is important to analyse an argument’s premises and conclusions to identify any flaws in its reasoning.

• Finally, it can be helpful to question assumptions and consider alternative perspectives to ensure that your thinking is not influenced by logical fallacies.

A good source to do a deep dive into logical fallacies is The Fallacy Files by Gary N. Curtis – This website provides an extensive list of common logical fallacies, along with explanations and examples of each. It emphasizes the importance of being able to identify and avoid fallacies, and provides resources for improving critical thinking skills.

By developing the ability to identify and avoid logical fallacies, you can become a more effective critical thinker and make more informed decisions.

Challenge #3: Emotions

Emotions can have a significant impact on critical thinking and decision-making. Our emotional responses to information can affect our perception of it and bias our judgments. For example, if we have a strong emotional attachment to a particular belief or idea, we may be more likely to dismiss information that contradicts it and accept information that supports it, even if the information is flawed or unreliable.

Additionally, emotional reactions can also lead to impulsive decision-making, where we may act without fully considering all available information or weighing the potential consequences. This can be particularly problematic in high-stakes situations, such as in the workplace or in personal relationships.

Jennifer S. Lerner, Ye Li, Piercarlo Valdesolo, and Karim S. Kassam explore the relationship between emotions and decision making, including the role of emotions in shaping cognitive processes such as attention, memory, and judgment. They suggest that emotions can influence decision making in both positive and negative ways, and that understanding how emotions affect decision making is an important area of research.

How do critical thinkers manage emotions?

To manage the role of emotions in critical thinking, it is important to first become aware of our emotional reactions and biases. This can be done through mindfulness practices, such as meditation or journaling, where we can reflect on our thoughts and feelings without judgment.

It can also be helpful to actively seek out diverse perspectives and information, as exposure to new and varied ideas can help to broaden our understanding and reduce emotional attachments to particular beliefs. Additionally, taking a pause before making a decision or responding to information can provide time to reflect on our emotional reactions and consider all available information in a more rational and objective manner.

Overall, recognizing the impact of emotions on critical thinking and developing strategies for managing them can lead to more informed and effective decision-making.

Challenge #4: Lack of Information or Misinformation

Critical thinking relies heavily on having accurate and reliable information. However, in today’s age of rapid information sharing, it is easy to be inundated with an overwhelming amount of information, and distinguishing fact from fiction can be a daunting task. Additionally, misinformation and propaganda can be intentionally spread to manipulate opinions and beliefs.

Pew Research Center found that many Americans are concerned about the impact of misinformation on democracy and that fake news can erode trust in institutions and hinder critical thinking.

One example of the impact of misinformation is the spread of conspiracy theories, such as the belief that climate change is a hoax. These beliefs can lead to negative consequences for us and society as a whole, such as a lack of action on climate change.

How do critical thinkers overcome the lack of information or misinformation?

To overcome the challenge of misinformation and a lack of information, critical thinkers must develop a habit of fact-checking and verifying information. This means seeking out multiple sources of information and analyzing the credibility and biases of each source. Critical thinkers must also be willing to adjust their beliefs based on new evidence and be open to changing their opinions.

Pew Research Center suggests that media literacy education can help people become more discerning consumers of information.

• A good source for developing media literacy is Unesco’s “ Media and Information Literacy: Curriculum for Teachers “: The publication emphasizes the importance of teaching students to critically evaluate information in order to become informed and responsible citizens. It provides a framework for teaching media and information literacy skills, including critical thinking, and emphasizes the need to teach students how to recognize and avoid misinformation.

• Another source worth checking out is New York Times Events’ video on How to Teach Critical Thinking in an Age of Misinformation . The speakers suggest that educators should focus on teaching students to ask probing questions, evaluate evidence, and consider alternative perspectives. They also note that critical thinking skills are especially important in an age of information overload and misinformation.

• Furthermore, it is important to be aware of your own biases and limitations when seeking out and evaluating information. Confirmation bias, discussed in Challenge #1, can also play a role in accepting misinformation or overlooking important information that does not align with our pre-existing beliefs.

By being diligent and thorough in our information gathering and evaluation, we can overcome the challenge of misinformation and make more informed decisions.

Challenge #5: Groupthink

What is groupthink.

According to Sunstein and Hastie , groupthink occurs when members of a group prioritize consensus and social harmony over critical evaluation of alternative ideas. They suggest that groupthink can lead to a narrowing of perspectives and a lack of consideration for alternative viewpoints, which can result in flawed decision-making. They argue that groupthink is particularly dangerous in situations where group members are highly cohesive, where there is a strong leader or dominant voice, or where the group lacks diverse perspectives.

The desire for group cohesion can lead to a reluctance to challenge the consensus or express dissenting opinions, resulting in flawed decision-making and missed opportunities for innovation.

One example of groupthink is the space shuttle Challenger disaster in 1986 , where NASA engineers failed to recognize and address the risk of launching the shuttle in cold weather due to pressure from superiors and a culture of overconfidence. This led to a catastrophic failure that claimed the lives of all seven crew members.

How do critical thinkers overcome groupthink?

To overcome groupthink, it is important to encourage diversity of thought and promote constructive disagreement.

There are several strategies for avoiding groupthink, including promoting independent thinking and dissenting opinions, encouraging diverse perspectives, and engaging in active listening and critical evaluation of alternative ideas.

This can be achieved by seeking out dissenting views and challenging assumptions, creating a culture of open communication and feedback, and avoiding hierarchies that can stifle innovation and creativity. It is also important to value and reward independent thinking, even if it goes against the prevailing consensus.

For more ways to overcome group think, check out this comprehensive list of strategies from Northwestern school of education and social policy .

Developing critical thinking skills can help you to overcome groupthink and make more informed and effective decisions. By being aware of the challenges of group dynamics and actively seeking out diverse perspectives, you can cultivate a more independent and objective approach to critical thinking, ultimately leading to better outcomes and a more robust and resilient society.

Challenge #6: Overconfidence Bias

Another challenge to developing critical thinking is overconfidence bias, which is the tendency to overestimate our own abilities and knowledge. This bias can lead us to make hasty decisions or overlook important information, which can ultimately hinder our critical thinking skills.

Kahneman explains how the human mind has two modes of thinking: System 1, which is fast and intuitive, and System 2, which is slow and deliberative. He argues that overconfidence bias is a common flaw in System 1 thinking, which can lead us to overestimate our knowledge and abilities. Kahneman suggests that improving critical thinking requires training to recognize and control our overconfidence bias.

Overconfidence bias can occur in various contexts, such as in the workplace, academic settings, or even in personal relationships. For instance, you may be overconfident in your ability to complete a task at work without seeking help or feedback from colleagues, which could result in suboptimal outcomes.

Lichtenstein and Fischhoff conducted a study on overconfidence bias, in which they found that people tend to overestimate their knowledge and abilities in areas where they have limited expertise.

Tversky and Kahneman’s seminal paper on heuristics and biases discusses overconfidence bias as a common flaw in human decision-making. They suggest that overconfidence bias can lead us to make inaccurate judgments and can contribute to a wide range of cognitive biases.

How do critical thinkers overcome overconfidence bias?

To overcome overconfidence bias, you should take a more humble and reflective approach to your own abilities and knowledge. This can involve seeking feedback from others, taking the time to consider different perspectives, and being open to constructive criticism.

Kahneman suggests that improving critical thinking requires training to recognize and control our overconfidence bias.

Moore and Healy offer several strategies for reducing overconfidence bias , including increasing feedback, considering alternative explanations, and using probabilistic reasoning.

Another strategy is to cultivate a growth mindset , which emphasizes the belief that your abilities can be developed through effort and persistence. By adopting this mindset, you can avoid becoming complacent and continue to challenge yourself to develop your critical thinking skills.

Overall, overcoming overconfidence bias requires a willingness to acknowledge our own limitations and to actively seek out opportunities for growth and learning.

Challenge #7: Cognitive dissonance

Cognitive dissonance is a psychological phenomenon that occurs when a person holds two or more conflicting beliefs, values, or ideas. This internal conflict can create feelings of discomfort, which can lead to irrational and inconsistent behaviour. Cognitive dissonance can pose a significant challenge to critical thinking by distorting our perceptions and leading us to accept information that confirms our existing beliefs while dismissing or rationalizing away information that challenges them.

For example, a person who believes that they are a good driver may become defensive and dismissive when presented with evidence of their unsafe driving habits, such as speeding or not using a turn signal. This person may experience cognitive dissonance, as their belief in their driving ability conflicts with the evidence presented to them.

Tavris and Aronson’s book- Mistakes were made (but not by me) examines the phenomenon of cognitive dissonance in everyday life, using real-life examples to illustrate how we justify our beliefs and actions, even in the face of evidence to the contrary. It’s a worthwhile read to understand the psychological mechanisms that underlie cognitive dissonance and the implications of dissonance for understanding interpersonal conflict, group behaviour, and decision-making.

How do critical thinkers overcome cognitive dissonance?

Overcoming cognitive dissonance requires a willingness to confront and examine our own beliefs and assumptions.

Tavris and Aronson offer several strategies for recognizing and overcoming cognitive dissonance.

• we should be aware of the potential for cognitive dissonance to arise in situations where our beliefs, attitudes, or behaviours are inconsistent . By recognizing the possibility of dissonance, we can be more prepared to manage the discomfort that may result.

• we should engage in self-reflection to examine our beliefs, attitudes, and behaviors more closely. By questioning assumptions and considering alternative perspectives, we may be able to reduce the cognitive dissonance we experience.

• we should seek out diverse perspectives and engage in constructive dialogue with others. By listening to and respecting different viewpoints, we can gain a deeper understanding of ourselves and others, which may help to reduce cognitive dissonance.

Finally, the authors emphasize the importance of taking responsibility for our own actions and decisions. By acknowledging mistakes and being accountable for them, we can avoid the temptation to justify our behaviour and maintain consistency with our beliefs and attitudes.

In conclusion, developing effective critical thinking skills is essential for making informed decisions and navigating complex issues. However, there are several challenges that can hinder the development of critical thinking.

Confirmation bias, logical fallacies, emotions, lack of information or misinformation, groupthink, overconfidence bias, and cognitive dissonance are all common challenges that you may face when attempting to engage in critical thinking.

To overcome these challenges, it is important to develop strategies such as seeking out diverse perspectives, fact-checking and verifying information, and managing emotions. Additionally, it is crucial to remain open-minded and willing to consider alternative viewpoints, even if they challenge your existing beliefs. By recognizing and addressing these challenges, you can continue to improve your critical thinking skills and become more effective problem-solvers and decision-makers in your personal and professional lives.

You May Also Like…

De ce votăm așa? Partea IV: Cultivarea gândirii critice și informate în alegerile viitoare

Când candidatul își începe discursul cu fraze precum „România este Grădina Maicii Domnului” sau „Carpații sunt...

De ce votăm așa? Partea III: Peisajul politic și media premergător alegerilor din 2024

De parcă ar fi fost la un maraton de manipulare care a început în anii '90 și încă mai ține, politicienii români au...

De ce votăm așa? Partea II: Analiza alegătorului român

Explorăm mai departe modul în care cele trei niveluri cognitive interacționează în cazul a trei tipuri de alegători,...

Leave a Reply Cancel reply

3 Fallacies

I. what a re fallacies 1.

Fallacies are mistakes of reasoning, as opposed to making mistakes that are of a factual nature. If I counted twenty people in the room when there were in fact twenty-one, then I made a factual mistake. On the other hand, if I believe that there are round squares I believe something that is contradictory. A belief in “round squares” is a mistake of reasoning and contains a fallacy because, if my reasoning were good, I would not believe something that is logically inconsistent with reality.

In some discussions, a fallacy is taken to be an undesirable kind of argument or inference. In our view, this definition of fallacy is rather narrow, since we might want to count certain mistakes of reasoning as fallacious even though they are not presented as arguments. For example, making a contradictory claim seems to be a case of fallacy, but a single claim is not an argument. Similarly, putting forward a question with an inappropriate presupposition might also be regarded as a fallacy, but a question is also not an argument. In both of these situations though, the person is making a mistake of reasoning since they are doing something that goes against one or more principles of correct reasoning. This is why we would like to define fallacies more broadly as violations of the principles of critical thinking , whether or not the mistakes take the form of an argument.

The study of fallacies is an application of the principles of critical thinking. Being familiar with typical fallacies can help us avoid them and help explain other people’s mistakes.

There are different ways of classifying fallacies. Broadly speaking, we might divide fallacies into four kinds:

- Fallacies of inconsistency: cases where something inconsistent or self-defeating has been proposed or accepted.

- Fallacies of relevance: cases where irrelevant reasons are being invoked or relevant reasons being ignored.

- Fallacies of insufficiency: cases where the evidence supporting a conclusion is insufficient or weak.

- Fallacies of inappropriate presumption: cases where we have an assumption or a question presupposing something that is not reasonable to accept in the relevant conversational context.

II. Fallacies of I nconsistency

Fallacies of inconsistency are cases where something inconsistent, self-contradictory or self-defeating is presented.

1. Inconsistency

Here are some examples:

- “One thing that we know for certain is that nothing is ever true or false.” – If there is something we know for certain, then there is at least one truth that we know. So it can’t be the case that nothing is true or false.

- “Morality is relative and is just a matter of opinion, and so it is always wrong to impose our opinions on other people.” – But if morality is relative, it is also a relative matter whether we should impose our opinions on other people. If we should not do that, there is at least one thing that is objectively wrong.

- “All general claims have exceptions.” – This claim itself is a general claim, and so if it is to be regarded as true we must presuppose that there is an exception to it, which would imply that there exists at least one general claim that does not have an exception. So the claim itself is inconsistent.

2. Self- D efeating C laims

A self-defeating statement is a statement that, strictly speaking, is not logically inconsistent but is instead obviously false. Consider these examples:

- Very young children are fond of saying “I am not here” when they are playing hide-and-seek. The statement itself is not logically consistent, since it is not logically possible for the child not to be where she is. What is impossible is to utter the sentence as a true sentence (unless it is used for example in a telephone recorded message.)

- Someone who says, “I cannot speak any English.”

- Here is an actual example: A TV program in Hong Kong was critical of the Government. When the Hong Kong Chief Executive Mr. Tung was asked about it, he replied, “I shall not comment on such distasteful programs.” Mr. Tung’s remark was not logically inconsistent, because what it describes is a possible state of affairs. But it is nonetheless self-defeating because calling the program “distasteful” is to pass a comment!

III. Fallacies of R elevance

1. taking irrelevant considerations into account.

This includes defending a conclusion by appealing to irrelevant reasons, e.g., inappropriate appeal to pity, popular opinion, tradition, authority, etc. An example would be when a student failed a course and asked the teacher to give him a pass instead, because “his parents will be upset.” Since grades should be given on the basis of performance, the reason being given is quite irrelevant.

Similarly, suppose someone criticizes the Democratic Party’s call for direct elections in Hong Kong as follows: “These arguments supporting direct elections have no merit because they are advanced by Democrats who naturally stand to gain from it.” This is again fallacious because whether the person advancing the argument has something to gain from direct elections is a completely different issue from whether there ought to be direct elections.

2. Failing to T ake R elevant C onsiderations into A ccount

For example, it is not unusual for us to ignore or downplay criticisms because we do not like them, even when those criticisms are justified. Or sometimes we might be tempted to make a snap decision, believing knee-jerk reactions are the best when, in fact, we should be investigating the situation more carefully and doing more research.

Of course, if we fail to consider a relevant fact simply because we are ignorant of it, then this lack of knowledge does not constitute a fallacy.

IV. Fallacies of Insufficiency

Fallacies of insufficiency are cases where insufficient evidence is provided in support of a claim. Most common fallacies fall within this category. Here are a few popular types:

1. Limited S ampling

- Momofuku Ando, the inventor of instant noodles, died at the age of 96. He said he ate instant noodles every day. So instant noodles cannot be bad for your health.

- A black cat crossed my path this morning, and I got into a traffic accident this afternoon. Black cats are really unlucky.

In both cases the observations are relevant to the conclusion, but a lot more data is needed to support the conclusion, e.g., studies show that many other people who eat instant noodles live longer, and those who encounter black cats are more likely to suffer from accidents.

2. Appeal to I gnorance

- We have no evidence showing that he is innocent. So he must be guilty.

If someone is guilty, it would indeed be hard to find evidence showing that he is innocent. But perhaps there is no evidence to point either way, so a lack of evidence is not enough to prove guilt.

3. Naturalistic F allacy

- Many children enjoy playing video games, so we should not stop them from playing.

Many naturalistic fallacies are examples of fallacy of insufficiency. Empirical facts by themselves are not sufficient for normative conclusions, even if they are relevant.

There are many other kinds of fallacy of insufficiency. See if you can identify some of them.

V. Fallacies of Inappropriate Presumption

Fallacies of inappropriate presumption are cases where we have explicitly or implicitly made an assumption that is not reasonable to accept in the relevant context. Some examples include:

- Many people like to ask whether human nature is good or evil. This presupposes that there is such a thing as human nature and that it must be either good or bad. But why should these assumptions be accepted, and are they the only options available? What if human nature is neither good nor bad? Or what if good or bad nature applies only to individual human beings?

- Consider the question “Have you stopped being an idiot?” Whether you answer “yes” or “no,” you admit that you are, or have been, an idiot. Presumably you do not want to make any such admission. We can point out that this question has a false assumption.

- “Same-sex marriage should not be allowed because by definition a marriage should be between a man and a woman.” This argument assumes that only a heterosexual conception of marriage is correct. But this begs the question against those who defend same-sex marriages and is not an appropriate assumption to make when debating this issue.

VI. List of Common Fallacies

A theory is discarded not because of any evidence against it or lack of evidence for it, but because of the person who argues for it. Example:

A: The Government should enact minimum-wage legislation so that workers are not exploited. B: Nonsense. You say that only because you cannot find a good job.

ad ignorantiam (appeal to ignorance)

The truth of a claim is established only on the basis of lack of evidence against it. A simple obvious example of such fallacy is to argue that unicorns exist because there is no evidence against their existence. At first sight it seems that many theories that we describe as “scientific” involve such a fallacy. For example, the first law of thermodynamics holds because so far there has not been any negative instance that would serve as evidence against it. But notice, as in cases like this, there is evidence for the law, namely positive instances. Notice also that this fallacy does not apply to situations where there are only two rival claims and one has already been falsified. In situations such as this, we may justly establish the truth of the other even if we cannot find evidence for or against it.

ad misericordiam (appeal to pity)

In offering an argument, pity is appealed to. Usually this happens when people argue for special treatment on the basis of their need, e.g., a student argues that the teacher should let them pass the examination because they need it in order to graduate. Of course, pity might be a relevant consideration in certain conditions, as in contexts involving charity.

ad populum (appeal to popularity)

The truth of a claim is established only on the basis of its popularity and familiarity. This is the fallacy committed by many commercials. Surely you have heard of commercials implying that we should buy a certain product because it has made to the top of a sales rank, or because the brand is the city’s “favorite.”

Affirming the consequent

Inferring that P is true solely because Q is true and it is also true that if P is true, Q is true.

The problem with this type of reasoning is that it ignores the possibility that there are other conditions apart from P that might lead to Q. For example, if there is a traffic jam, a colleague may be late for work. But if we argue from his being late to there being a traffic jam, we are guilty of this fallacy – the colleague may be late due to a faulty alarm clock.

Of course, if we have evidence showing that P is the only or most likely condition that leads to Q, then we can infer that P is likely to be true without committing a fallacy.

Begging the question ( petito principii )

In arguing for a claim, the claim itself is already assumed in the premise. Example: “God exists because this is what the Bible says, and the Bible is reliable because it is the word of God.”

Complex question or loaded question

A question is posed in such a way that a person, no matter what answer they give to the question, will inevitably commit themselves to some other claim, which should not be presupposed in the context in question.

A common tactic is to ask a yes-no question that tricks people into agreeing to something they never intended to say. For example, if you are asked, “Are you still as self-centered as you used to be?”, no matter whether you answer “yes” or ”no,” you are bound to admit that you were self-centered in the past. Of course, the same question would not count as a fallacy if the presupposition of the question were indeed accepted in the conversational context, i.e., that the person being asked the question had been verifiably self-centered in the past.

Composition (opposite of division)

The whole is assumed to have the same properties as its parts. Anne might be humorous and fun-loving and an excellent person to invite to the party. The same might be true of Ben, Chris and David, considered individually. But it does not follow that it will be a good idea to invite all of them to the party. Perhaps they hate each other and the party will be ruined.

Denying the antecedent

Inferring that Q is false just because if P is true, Q is also true, but P is false.

This fallacy is similar to the fallacy of affirming the consequent. Again the problem is that some alternative explanation or cause might be overlooked. Although P is false, some other condition might be sufficient to make Q true.

Example: If there is a traffic jam, a colleague may be late for work. But it is not right to argue in the light of smooth traffic that the colleague will not be late. Again, his alarm clock may have stopped working.

Division (opposite of composition)

The parts of a whole are assumed to have the same properties as the whole. It is possible that, on a whole, a company is very effective, while some of its departments are not. It would be inappropriate to assume they all are.

Equivocation

Putting forward an argument where a word changes meaning without having it pointed out. For example, some philosophers argue that all acts are selfish. Even if you strive to serve others, you are still acting selfishly because your act is just to satisfy your desire to serve others. But surely the word “selfish” has different meanings in the premise and the conclusion – when we say a person is selfish we usually mean that he does not strive to serve others. To say that a person is selfish because he is doing something he wants, even when what he wants is to help others, is to use the term “selfish” with a different meaning.

False dilemma

Presenting a limited set of alternatives when there are others that are worth considering in the context. Example: “Every person is either my enemy or my friend. If they are my enemy, I should hate them. If they’re my friend, I should love them. So I should either love them or hate them.” Obviously, the conclusion is too extreme because most people are neither your enemy nor your friend.

Gambler’s fallacy

Assumption is made to take some independent statistics as dependent. The untrained mind tends to think that, for example, if a fair coin is tossed five times and the results are all heads, then the next toss will more likely be a tail. It will not be, however. If the coin is fair, the result for each toss is completely independent of the others. Notice the fallacy hinges on the fact that the final result is not known. Had the final result been known already, the statistics would have been dependent.

Genetic fallacy

Thinking that because X derives from Y, and because Y has a certain property, that X must also possess that same property. Example: “His father is a criminal, so he must also be up to no good.”

Non sequitur

A conclusion is drawn that does not follow from the premise. This is not a specific fallacy but a very general term for a bad argument. So a lot of the examples above and below can be said to be non sequitur.

Post hoc, ergo propter hoc (literally, “ after this, therefore because of this ” )

Inferring that X must be the cause of Y just because X is followed by Y.

For example, having visited a graveyard, I fell ill and infer that graveyards are spooky places that cause illnesses. Of course, this inference is not warranted since this might just be a coincidence. However, a lot of superstitious beliefs commit this fallacy.

Red herring

Within an argument some irrelevant issue is raised that diverts attention from the main subject. The function of the red herring is sometimes to help express a strong, biased opinion. The red herring (the irrelevant issue) serves to increase the force of the argument in a very misleading manner.

For example, in a debate as to whether God exists, someone might argue that believing in God gives peace and meaning to many people’s lives. This would be an example of a red herring since whether religions can have a positive effect on people is irrelevant to the question of the existence of God. The positive psychological effect of a belief is not a reason for thinking that the belief is true.

Slippery slope

Arguing that if an opponent were to accept some claim C 1 , then they have to accept some other closely related claim C 2 , which in turn commits the opponent to a still further claim C 3 , eventually leading to the conclusion that the opponent is committed to something absurd or obviously unacceptable.

This style of argumentation constitutes a fallacy only when it is inappropriate to think if one were to accept the initial claim, one must accept all the other claims.

An example: “The government should not prohibit drugs. Otherwise the government should also ban alcohol or cigarettes. And then fatty food and junk food would have to be regulated too. The next thing you know, the government would force us to brush our teeth and do exercises every day.”

Attacking an opponent while falsely attributing to them an implausible position that is easily defeated.

Example: When many people argue for more democracy in Hong Kong, a typical “straw man” reply is to say that more democracy is not warranted because it is wrong to believe that democracy is the solution to all of Hong Kong’s problems. But those who support more democracy in Hong Kong never suggest that democracy can solve all problems (e.g., pollution), and those who support more democracy in Hong Kong might even agree that blindly accepting anything is rarely the correct course of action, whether it is democracy or not. Theses criticisms attack implausible “straw man” positions and do not address the real arguments for democracy.

Suppressed evidence

Where there is contradicting evidence, only confirming evidence is presented.

VII. Exercises

Identify any fallacy in each of these passages. If no fallacy is committed, select “no fallacy involved.”

1. Mr. Lee’s views on Japanese culture are wrong. This is because his parents were killed by the Japanese army during World War II and that made him anti-Japanese all his life.

2. Every ingredient of this soup is tasty. So this must be a very tasty soup.

3. Smoking causes cancer because my father was a smoker and he died of lung cancer.

4. Professor Lewis, the world authority on logic, claims that all wives cook for their husbands. But the fact is that his own wife does not cook for him. Therefore, his claim is false.

5. If Catholicism is right, then no women should be allowed to be priests. But Catholicism is wrong. Therefore, some women should be allowed to be priests.

6. God does not exist because every argument for the existence of God has been shown to be unsound.

7. The last three times I have had a cold I took large doses of vitamin C. On each occasion, the cold cleared up within a few days. So vitamin C helped me recover from colds.

8. The union’s case for more funding for higher education can be ignored because it is put forward by the very people – university staff – who would benefit from the increased money.

9. Children become able to solve complex problems and think of physical objects objectively at the same time that they learn language. Therefore, these abilities are caused by learning a language.

10. If cheap things are no good then this cheap watch is no good. But this watch is actually quite good. So some good things are cheap.

Critical Thinking Copyright © 2019 by Brian Kim is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

10 Thinking Errors That Can Crush Our Mental Strength

These thinking errors make it impossible to flex your mental muscle..

Posted January 24, 2015 | Reviewed by Matt Huston

Mental strength requires a three-pronged approach— managing our thoughts , regulating our emotions , and behaving productively despite our circumstances .

While all three areas can be a struggle, it's often our thoughts that make it most difficult to be mentally strong.

As we go about our daily routines, our internal monologue narrates our experience. Our self-talk guides our behavior and influences the way we interact with others. It also plays a major role in how you feel about yourself, other people, and the world in general.

Quite often, however, our conscious thoughts aren't realistic; they're irrational and inaccurate. Believing our irrational thoughts can lead to problems, including communication issues, relationship problems, and unhealthy decisions.

Whether you're striving to reach personal or professional goals, the key to success often starts with recognizing and replacing inaccurate thoughts. The most common thinking errors can be divided into these 10 categories, which are adapted from David Burns's book, Feeling Good: The New Mood Therapy .

1. All-or-Nothing Thinking. Sometimes we see things as being black or white: Perhaps you have two categories of coworkers in your mind—the good ones and the bad ones. Or maybe you look at each project as either a success or a failure. Recognize the shades of gray, rather than putting things in terms of all good or all bad.

2. Overgeneralizing. It's easy to take one particular event and generalize it to the rest of our life. If you failed to close one deal, you may decide, "I'm bad at closing deals." Or if you are treated poorly by one family member, you might think, "Everyone in my family is rude." Take notice of times when an incident may apply to only one specific situation, instead of all other areas of life.

3. Filtering Out the Positive. If nine good things happen, and one bad thing, sometimes we filter out the good and zoom in on the bad. Maybe we declare we had a bad day, despite the positive events that occurred. Or maybe we look back at our performance and declare it was terrible because we made a single mistake. Filtering out the positive can prevent you from establishing a realistic outlook on a situation. Develop a balanced outlook by noticing both the positive and the negative.

4. Mind-Reading . We can never be sure what someone else is thinking. Yet, everyone occasionally assumes they know what's going on in someone else's mind. Thinking things like "He must have thought I was stupid at the meeting" makes inferences that aren't necessarily based on reality. Remind yourself that you may not be making accurate guesses about other people's perceptions.

5. Catastrophizing . Sometimes we think things are much worse than they actually are. If you fall short on meeting your financial goals one month you may think, "I'm going to end up bankrupt," or "I'll never have enough money to retire," even though there's no evidence that the situation is nearly that dire. It can be easy to get swept up into catastrophizing a situation once your thoughts become negative. When you begin predicting doom and gloom, remind yourself that there are many other potential outcomes.

6. Emotional Reasoning. Our emotions aren't always based on reality but we often assume those feelings are rational. If you're worried about making a career change, you might assume, "If I'm this scared about it, I just shouldn't change jobs." Or, you may be tempted to assume, "If I feel like a loser, I must be a loser." It's essential to recognize that emotions, just like our thoughts, aren't always based on the facts.

7. Labeling. Labeling involves putting a name to something. Instead of thinking, "He made a mistake," you might label your neighbor as "an idiot." Labeling people and experiences places them into categories that are often based on isolated incidents. Notice when you try to categorize things and work to avoid placing mental labels on everything.

8. Fortune-telling. Although none of us knows what will happen in the future, we sometimes like to try our hand at fortune-telling. We think things like, "I'm going to embarrass myself tomorrow," or "If I go on a diet , I'll probably just gain weight." These types of thoughts can become self-fulfilling prophecies if you're not careful. When you're predicting doom and gloom, remind yourself of all the other possible outcomes.

9. Personalization. As much as we'd like to say we don't think the world revolves around us, it's easy to personalize everything. If a friend doesn't call back, you may assume, "She must be mad at me," or if a co-worker is grumpy, you might conclude, "He doesn't like me." When you catch yourself personalizing situations, take time to point out other possible factors that may be influencing the circumstances.

10. Unreal Ideal. Making unfair comparisons between ourselves and other people can ruin our motivation . Looking at someone who has achieved much success and thinking, "I should have been able to do that," isn't helpful, especially if that person had some lucky breaks or competitive advantages along the way. Rather than measuring your life against someone else's, commit to focusing on your own path to success.

Fixing Thinking Errors