Systematic Reviews

- Levels of Evidence

- Evidence Pyramid

- Joanna Briggs Institute

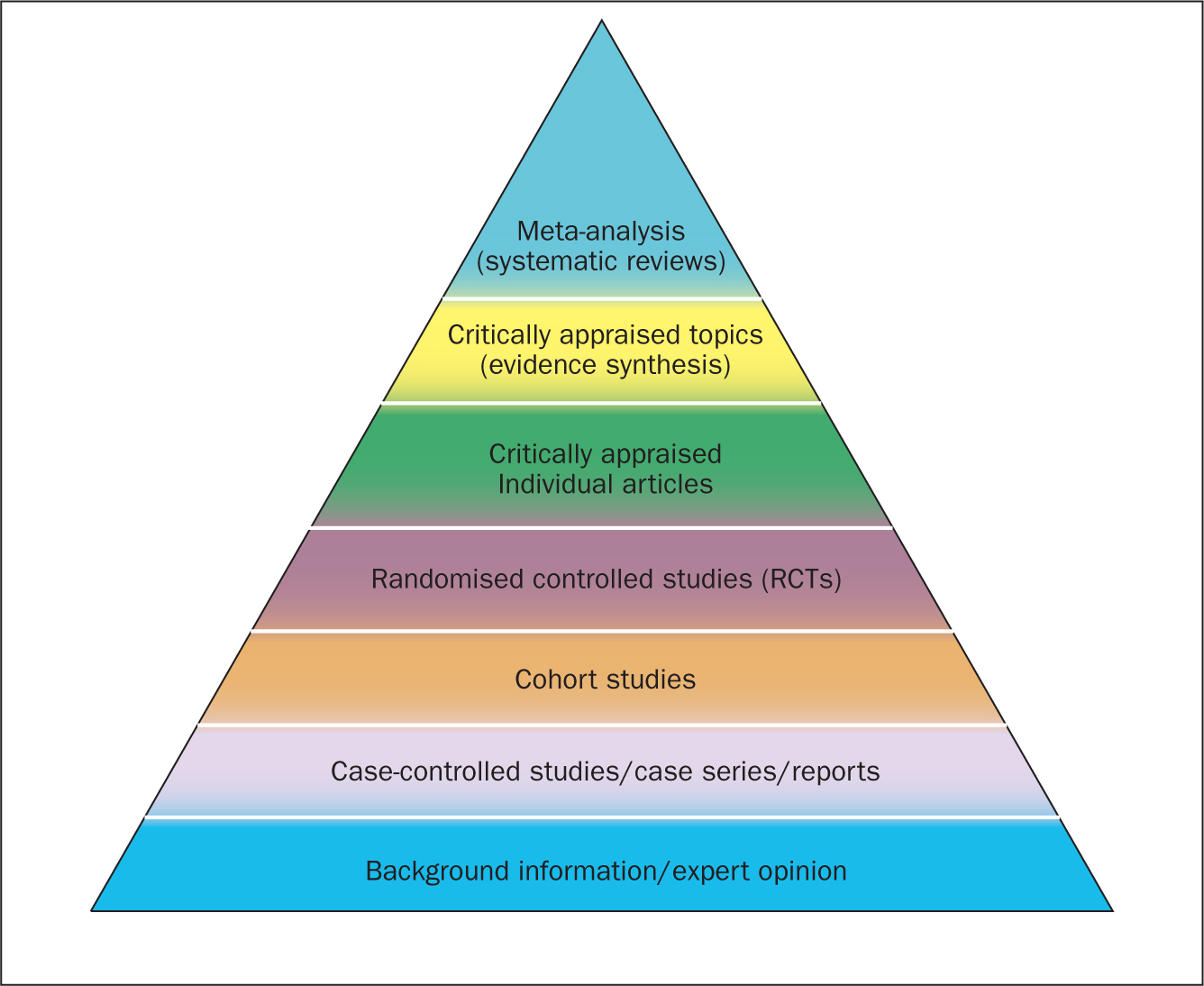

The evidence pyramid is often used to illustrate the development of evidence. At the base of the pyramid is animal research and laboratory studies – this is where ideas are first developed. As you progress up the pyramid the amount of information available decreases in volume, but increases in relevance to the clinical setting.

Meta Analysis – systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review – summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate st atistical techniques to combine these valid studies.

Randomized Controlled Trial – Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study – Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study – study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series – report on a series of patients with an outcome of interest. No control group is involved.

- Levels of Evidence from The Centre for Evidence-Based Medicine

- The JBI Model of Evidence Based Healthcare

- How to Use the Evidence: Assessment and Application of Scientific Evidence From the National Health and Medical Research Council (NHMRC) of Australia. Book must be downloaded; not available to read online.

When searching for evidence to answer clinical questions, aim to identify the highest level of available evidence. Evidence hierarchies can help you strategically identify which resources to use for finding evidence, as well as which search results are most likely to be "best".

Image source: Evidence-Based Practice: Study Design from Duke University Medical Center Library & Archives. This work is licensed under a Creativ e Commons Attribution-ShareAlike 4.0 International License .

The hierarchy of evidence (also known as the evidence-based pyramid) is depicted as a triangular representation of the levels of evidence with the strongest evidence at the top which progresses down through evidence with decreasing strength. At the top of the pyramid are research syntheses, such as Meta-Analyses and Systematic Reviews, the strongest forms of evidence. Below research syntheses are primary research studies progressing from experimental studies, such as Randomized Controlled Trials, to observational studies, such as Cohort Studies, Case-Control Studies, Cross-Sectional Studies, Case Series, and Case Reports. Non-Human Animal Studies and Laboratory Studies occupy the lowest level of evidence at the base of the pyramid.

- << Previous: What is a Systematic Review?

- Next: Locating Systematic Reviews >>

- Getting Started

- What is a Systematic Review?

- Locating Systematic Reviews

- Searching Systematically

- Developing Answerable Questions

- Identifying Synonyms & Related Terms

- Using Truncation and Wildcards

- Identifying Search Limits/Exclusion Criteria

- Keyword vs. Subject Searching

- Where to Search

- Search Filters

- Sensitivity vs. Precision

- Core Databases

- Other Databases

- Clinical Trial Registries

- Conference Presentations

- Databases Indexing Grey Literature

- Web Searching

- Handsearching

- Citation Indexes

- Documenting the Search Process

- Managing your Review

Research Support

- Last Updated: Nov 6, 2024 2:05 PM

- URL: https://guides.library.ucdavis.edu/systematic-reviews

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 21, Issue 4

- New evidence pyramid

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- M Hassan Murad ,

- Mouaz Alsawas ,

- http://orcid.org/0000-0001-5481-696X Fares Alahdab

- Rochester, Minnesota , USA

- Correspondence to : Dr M Hassan Murad, Evidence-based Practice Center, Mayo Clinic, Rochester, MN 55905, USA; murad.mohammad{at}mayo.edu

https://doi.org/10.1136/ebmed-2016-110401

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- EDUCATION & TRAINING (see Medical Education & Training)

- EPIDEMIOLOGY

- GENERAL MEDICINE (see Internal Medicine)

The first and earliest principle of evidence-based medicine indicated that a hierarchy of evidence exists. Not all evidence is the same. This principle became well known in the early 1990s as practising physicians learnt basic clinical epidemiology skills and started to appraise and apply evidence to their practice. Since evidence was described as a hierarchy, a compelling rationale for a pyramid was made. Evidence-based healthcare practitioners became familiar with this pyramid when reading the literature, applying evidence or teaching students.

Various versions of the evidence pyramid have been described, but all of them focused on showing weaker study designs in the bottom (basic science and case series), followed by case–control and cohort studies in the middle, then randomised controlled trials (RCTs), and at the very top, systematic reviews and meta-analysis. This description is intuitive and likely correct in many instances. The placement of systematic reviews at the top had undergone several alterations in interpretations, but was still thought of as an item in a hierarchy. 1 Most versions of the pyramid clearly represented a hierarchy of internal validity (risk of bias). Some versions incorporated external validity (applicability) in the pyramid by either placing N-1 trials above RCTs (because their results are most applicable to individual patients 2 ) or by separating internal and external validity. 3

Another version (the 6S pyramid) was also developed to describe the sources of evidence that can be used by evidence-based medicine (EBM) practitioners for answering foreground questions, showing a hierarchy ranging from studies, synopses, synthesis, synopses of synthesis, summaries and systems. 4 This hierarchy may imply some sort of increasing validity and applicability although its main purpose is to emphasise that the lower sources of evidence in the hierarchy are least preferred in practice because they require more expertise and time to identify, appraise and apply.

The traditional pyramid was deemed too simplistic at times, thus the importance of leaving room for argument and counterargument for the methodological merit of different designs has been emphasised. 5 Other barriers challenged the placement of systematic reviews and meta-analyses at the top of the pyramid. For instance, heterogeneity (clinical, methodological or statistical) is an inherent limitation of meta-analyses that can be minimised or explained but never eliminated. 6 The methodological intricacies and dilemmas of systematic reviews could potentially result in uncertainty and error. 7 One evaluation of 163 meta-analyses demonstrated that the estimation of treatment outcomes differed substantially depending on the analytical strategy being used. 7 Therefore, we suggest, in this perspective, two visual modifications to the pyramid to illustrate two contemporary methodological principles ( figure 1 ). We provide the rationale and an example for each modification.

- Download figure

- Open in new tab

- Download powerpoint

The proposed new evidence-based medicine pyramid. (A) The traditional pyramid. (B) Revising the pyramid: (1) lines separating the study designs become wavy (Grading of Recommendations Assessment, Development and Evaluation), (2) systematic reviews are ‘chopped off’ the pyramid. (C) The revised pyramid: systematic reviews are a lens through which evidence is viewed (applied).

Rationale for modification 1

In the early 2000s, the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group developed a framework in which the certainty in evidence was based on numerous factors and not solely on study design which challenges the pyramid concept. 8 Study design alone appears to be insufficient on its own as a surrogate for risk of bias. Certain methodological limitations of a study, imprecision, inconsistency and indirectness, were factors independent from study design and can affect the quality of evidence derived from any study design. For example, a meta-analysis of RCTs evaluating intensive glycaemic control in non-critically ill hospitalised patients showed a non-significant reduction in mortality (relative risk of 0.95 (95% CI 0.72 to 1.25) 9 ). Allocation concealment and blinding were not adequate in most trials. The quality of this evidence is rated down due to the methodological imitations of the trials and imprecision (wide CI that includes substantial benefit and harm). Hence, despite the fact of having five RCTs, such evidence should not be rated high in any pyramid. The quality of evidence can also be rated up. For example, we are quite certain about the benefits of hip replacement in a patient with disabling hip osteoarthritis. Although not tested in RCTs, the quality of this evidence is rated up despite the study design (non-randomised observational studies). 10

Rationale for modification 2

Another challenge to the notion of having systematic reviews on the top of the evidence pyramid relates to the framework presented in the Journal of the American Medical Association User's Guide on systematic reviews and meta-analysis. The Guide presented a two-step approach in which the credibility of the process of a systematic review is evaluated first (comprehensive literature search, rigorous study selection process, etc). If the systematic review was deemed sufficiently credible, then a second step takes place in which we evaluate the certainty in evidence based on the GRADE approach. 11 In other words, a meta-analysis of well-conducted RCTs at low risk of bias cannot be equated with a meta-analysis of observational studies at higher risk of bias. For example, a meta-analysis of 112 surgical case series showed that in patients with thoracic aortic transection, the mortality rate was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, p<0.01). Clearly, this meta-analysis should not be on top of the pyramid similar to a meta-analysis of RCTs. After all, the evidence remains consistent of non-randomised studies and likely subject to numerous confounders.

Therefore, the second modification to the pyramid is to remove systematic reviews from the top of the pyramid and use them as a lens through which other types of studies should be seen (ie, appraised and applied). The systematic review (the process of selecting the studies) and meta-analysis (the statistical aggregation that produces a single effect size) are tools to consume and apply the evidence by stakeholders.

Implications and limitations

Changing how systematic reviews and meta-analyses are perceived by stakeholders (patients, clinicians and stakeholders) has important implications. For example, the American Heart Association considers evidence derived from meta-analyses to have a level ‘A’ (ie, warrants the most confidence). Re-evaluation of evidence using GRADE shows that level ‘A’ evidence could have been high, moderate, low or of very low quality. 12 The quality of evidence drives the strength of recommendation, which is one of the last translational steps of research, most proximal to patient care.

One of the limitations of all ‘pyramids’ and depictions of evidence hierarchy relates to the underpinning of such schemas. The construct of internal validity may have varying definitions, or be understood differently among evidence consumers. A limitation of considering systematic review and meta-analyses as tools to consume evidence may undermine their role in new discovery (eg, identifying a new side effect that was not demonstrated in individual studies 13 ).

This pyramid can be also used as a teaching tool. EBM teachers can compare it to the existing pyramids to explain how certainty in the evidence (also called quality of evidence) is evaluated. It can be used to teach how evidence-based practitioners can appraise and apply systematic reviews in practice, and to demonstrate the evolution in EBM thinking and the modern understanding of certainty in evidence.

- Leibovici L

- Agoritsas T ,

- Vandvik P ,

- Neumann I , et al

- ↵ Resources for Evidence-Based Practice: The 6S Pyramid. Secondary Resources for Evidence-Based Practice: The 6S Pyramid Feb 18, 2016 4:58 PM. http://hsl.mcmaster.libguides.com/ebm

- Vandenbroucke JP

- Berlin JA ,

- Dechartres A ,

- Altman DG ,

- Trinquart L , et al

- Guyatt GH ,

- Vist GE , et al

- Coburn JA ,

- Coto-Yglesias F , et al

- Sultan S , et al

- Montori VM ,

- Ioannidis JP , et al

- Altayar O ,

- Bennett M , et al

- Nissen SE ,

Contributors MHM conceived the idea and drafted the manuscript. FA helped draft the manuscript and designed the new pyramid. MA and NA helped draft the manuscript.

Competing interests None declared.

Provenance and peer review Not commissioned; externally peer reviewed.

Linked Articles

- Editorial Pyramids are guides not rules: the evolution of the evidence pyramid Terrence Shaneyfelt BMJ Evidence-Based Medicine 2016; 21 121-122 Published Online First: 12 Jul 2016. doi: 10.1136/ebmed-2016-110498

- Perspective EBHC pyramid 5.0 for accessing preappraised evidence and guidance Brian S Alper R Brian Haynes BMJ Evidence-Based Medicine 2016; 21 123-125 Published Online First: 20 Jun 2016. doi: 10.1136/ebmed-2016-110447

Read the full text or download the PDF:

Welcome to the new OASIS website! We have academic skills, library skills, math and statistics support, and writing resources all together in one new home.

- Walden University

- Faculty Portal

Evidence-Based Research: Levels of Evidence Pyramid

Introduction.

One way to organize the different types of evidence involved in evidence-based practice research is the levels of evidence pyramid. The pyramid includes a variety of evidence types and levels.

- systematic reviews

- critically-appraised topics

- critically-appraised individual articles

- randomized controlled trials

- cohort studies

- case-controlled studies, case series, and case reports

- Background information, expert opinion

Levels of evidence pyramid

The levels of evidence pyramid provides a way to visualize both the quality of evidence and the amount of evidence available. For example, systematic reviews are at the top of the pyramid, meaning they are both the highest level of evidence and the least common. As you go down the pyramid, the amount of evidence will increase as the quality of the evidence decreases.

Text alternative for Levels of Evidence Pyramid diagram

EBM Pyramid and EBM Page Generator, copyright 2006 Trustees of Dartmouth College and Yale University. All Rights Reserved. Produced by Jan Glover, David Izzo, Karen Odato and Lei Wang.

Filtered Resources

Filtered resources appraise the quality of studies and often make recommendations for practice. The main types of filtered resources in evidence-based practice are:

Scroll down the page to the Systematic reviews , Critically-appraised topics , and Critically-appraised individual articles sections for links to resources where you can find each of these types of filtered information.

Systematic reviews

Authors of a systematic review ask a specific clinical question, perform a comprehensive literature review, eliminate the poorly done studies, and attempt to make practice recommendations based on the well-done studies. Systematic reviews include only experimental, or quantitative, studies, and often include only randomized controlled trials.

You can find systematic reviews in these filtered databases :

- Cochrane Database of Systematic Reviews Cochrane systematic reviews are considered the gold standard for systematic reviews. This database contains both systematic reviews and review protocols. To find only systematic reviews, select Cochrane Reviews in the Document Type box.

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) This database includes systematic reviews, evidence summaries, and best practice information sheets. To find only systematic reviews, click on Limits and then select Systematic Reviews in the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

You can also find systematic reviews in this unfiltered database :

To learn more about finding systematic reviews, please see our guide:

- Filtered Resources: Systematic Reviews

Critically-appraised topics

Authors of critically-appraised topics evaluate and synthesize multiple research studies. Critically-appraised topics are like short systematic reviews focused on a particular topic.

You can find critically-appraised topics in these resources:

- Annual Reviews This collection offers comprehensive, timely collections of critical reviews written by leading scientists. To find reviews on your topic, use the search box in the upper-right corner.

- Guideline Central This free database offers quick-reference guideline summaries organized by a new non-profit initiative which will aim to fill the gap left by the sudden closure of AHRQ’s National Guideline Clearinghouse (NGC).

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) To find critically-appraised topics in JBI, click on Limits and then select Evidence Summaries from the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

- National Institute for Health and Care Excellence (NICE) Evidence-based recommendations for health and care in England.

- Filtered Resources: Critically-Appraised Topics

Critically-appraised individual articles

Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

You can find critically-appraised individual articles in these resources:

- EvidenceAlerts Quality articles from over 120 clinical journals are selected by research staff and then rated for clinical relevance and interest by an international group of physicians. Note: You must create a free account to search EvidenceAlerts.

- ACP Journal Club This journal publishes reviews of research on the care of adults and adolescents. You can either browse this journal or use the Search within this publication feature.

- Evidence-Based Nursing This journal reviews research studies that are relevant to best nursing practice. You can either browse individual issues or use the search box in the upper-right corner.

To learn more about finding critically-appraised individual articles, please see our guide:

- Filtered Resources: Critically-Appraised Individual Articles

Unfiltered resources

You may not always be able to find information on your topic in the filtered literature. When this happens, you'll need to search the primary or unfiltered literature. Keep in mind that with unfiltered resources, you take on the role of reviewing what you find to make sure it is valid and reliable.

Note: You can also find systematic reviews and other filtered resources in these unfiltered databases.

The Levels of Evidence Pyramid includes unfiltered study types in this order of evidence from higher to lower:

You can search for each of these types of evidence in the following databases:

TRIP database

Background information & expert opinion.

Background information and expert opinions are not necessarily backed by research studies. They include point-of-care resources, textbooks, conference proceedings, etc.

- Family Physicians Inquiries Network: Clinical Inquiries Provide the ideal answers to clinical questions using a structured search, critical appraisal, authoritative recommendations, clinical perspective, and rigorous peer review. Clinical Inquiries deliver best evidence for point-of-care use.

- Harrison, T. R., & Fauci, A. S. (2009). Harrison's Manual of Medicine . New York: McGraw-Hill Professional. Contains the clinical portions of Harrison's Principles of Internal Medicine .

- Lippincott manual of nursing practice (8th ed.). (2006). Philadelphia, PA: Lippincott Williams & Wilkins. Provides background information on clinical nursing practice.

- Medscape: Drugs & Diseases An open-access, point-of-care medical reference that includes clinical information from top physicians and pharmacists in the United States and worldwide.

- Virginia Henderson Global Nursing e-Repository An open-access repository that contains works by nurses and is sponsored by Sigma Theta Tau International, the Honor Society of Nursing. Note: This resource contains both expert opinion and evidence-based practice articles.

- Previous Page: Phrasing Research Questions

- Next Page: Evidence Types

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Certification, Licensure and Compliance

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Cost of Attendance

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

This website is intended for healthcare professionals

- { $refs.search.focus(); })" aria-controls="searchpanel" :aria-expanded="open" class="hidden lg:inline-flex justify-end text-gray-800 hover:text-primary py-2 px-4 lg:px-0 items-center text-base font-medium"> Search

Search menu

Bashir Y, Conlon KC. Step by step guide to do a systematic review and meta-analysis for medical professionals. Ir J Med Sci. 2018; 187:(2)447-452 https://doi.org/10.1007/s11845-017-1663-3

Bettany-Saltikov J. How to do a systematic literature review in nursing: a step-by-step guide.Maidenhead: Open University Press; 2012

Bowers D, House A, Owens D. Getting started in health research.Oxford: Wiley-Blackwell; 2011

Hierarchies of evidence. 2016. http://cjblunt.com/hierarchies-evidence (accessed 23 July 2019)

Braun V, Clarke V. Using thematic analysis in psychology. Qualitative Research in Psychology. 2008; 3:(2)37-41 https://doi.org/10.1191/1478088706qp063oa

Developing a framework for critiquing health research. 2005. https://tinyurl.com/y3nulqms (accessed 22 July 2019)

Cognetti G, Grossi L, Lucon A, Solimini R. Information retrieval for the Cochrane systematic reviews: the case of breast cancer surgery. Ann Ist Super Sanita. 2015; 51:(1)34-39 https://doi.org/10.4415/ANN_15_01_07

Dixon-Woods M, Cavers D, Agarwal S Conducting a critical interpretive synthesis of the literature on access to healthcare by vulnerable groups. BMC Med Res Methodol. 2006; 6:(1) https://doi.org/10.1186/1471-2288-6-35

Guyatt GH, Sackett DL, Sinclair JC Users' guides to the medical literature IX. A method for grading health care recommendations. JAMA. 1995; 274:(22)1800-1804 https://doi.org/10.1001/jama.1995.03530220066035

Hanley T, Cutts LA. What is a systematic review? Counselling Psychology Review. 2013; 28:(4)3-6

Cochrane handbook for systematic reviews of interventions. Version 5.1.0. 2011. https://handbook-5-1.cochrane.org (accessed 23 July 2019)

Jahan N, Naveed S, Zeshan M, Tahir MA. How to conduct a systematic review: a narrative literature review. Cureus. 2016; 8:(11) https://doi.org/10.7759/cureus.864

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1997; 33:(1)159-174

Methley AM, Campbell S, Chew-Graham C, McNally R, Cheraghi-Sohi S. PICO, PICOS and SPIDER: a comparison study of specificity and sensitivity in three search tools for qualitative systematic reviews. BMC Health Serv Res. 2014; 14:(1) https://doi.org/10.1186/s12913-014-0579-0

Moher D, Liberati A, Tetzlaff J, Altman DG Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009; 6:(7) https://doi.org/10.1371/journal.pmed.1000097

Mueller J, Jay C, Harper S, Davies A, Vega J, Todd C. Web use for symptom appraisal of physical health conditions: a systematic review. J Med Internet Res. 2017; 19:(6) https://doi.org/10.2196/jmir.6755

Murad MH, Asi N, Alsawas M, Alahdab F. New evidence pyramid. Evid Based Med. 2016; 21:(4)125-127 https://doi.org/10.1136/ebmed-2016-110401

National Institute for Health and Care Excellence. Methods for the development of NICE public health guidance. 2012. http://nice.org.uk/process/pmg4 (accessed 22 July 2019)

Sambunjak D, Franic M. Steps in the undertaking of a systematic review in orthopaedic surgery. Int Orthop. 2012; 36:(3)477-484 https://doi.org/10.1007/s00264-011-1460-y

Siddaway AP, Wood AM, Hedges LV. How to do a systematic review: a best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses. Annu Rev Psychol. 2019; 70:747-770 https://doi.org/0.1146/annurev-psych-010418-102803

Thomas J, Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med Res Methodol. 2008; 8:(1) https://doi.org/10.1186/1471-2288-8-45

Wallace J, Nwosu B, Clarke M. Barriers to the uptake of evidence from systematic reviews and meta-analyses: a systematic review of decision makers' perceptions. BMJ Open. 2012; 2:(5) https://doi.org/10.1136/bmjopen-2012-001220

Carrying out systematic literature reviews: an introduction

Alan Davies

Lecturer in Health Data Science, School of Health Sciences, University of Manchester, Manchester

View articles · Email Alan

Systematic reviews provide a synthesis of evidence for a specific topic of interest, summarising the results of multiple studies to aid in clinical decisions and resource allocation. They remain among the best forms of evidence, and reduce the bias inherent in other methods. A solid understanding of the systematic review process can be of benefit to nurses that carry out such reviews, and for those who make decisions based on them. An overview of the main steps involved in carrying out a systematic review is presented, including some of the common tools and frameworks utilised in this area. This should provide a good starting point for those that are considering embarking on such work, and to aid readers of such reviews in their understanding of the main review components, in order to appraise the quality of a review that may be used to inform subsequent clinical decision making.

Since their inception in the late 1970s, systematic reviews have gained influence in the health professions ( Hanley and Cutts, 2013 ). Systematic reviews and meta-analyses are considered to be the most credible and authoritative sources of evidence available ( Cognetti et al, 2015 ) and are regarded as the pinnacle of evidence in the various ‘hierarchies of evidence’. Reviews published in the Cochrane Library ( https://www.cochranelibrary.com) are widely considered to be the ‘gold’ standard. Since Guyatt et al (1995) presented a users' guide to medical literature for the Evidence-Based Medicine Working Group, various hierarchies of evidence have been proposed. Figure 1 illustrates an example.

Systematic reviews can be qualitative or quantitative. One of the criticisms levelled at hierarchies such as these is that qualitative research is often positioned towards or even is at the bottom of the pyramid, thus implying that it is of little evidential value. This may be because of traditional issues concerning the quality of some qualitative work, although it is now widely recognised that both quantitative and qualitative research methodologies have a valuable part to play in answering research questions, which is reflected by the National Institute for Health and Care Excellence (NICE) information concerning methods for developing public health guidance. The NICE (2012) guidance highlights how both qualitative and quantitative study designs can be used to answer different research questions. In a revised version of the hierarchy-of-evidence pyramid, the systematic review is considered as the lens through which the evidence is viewed, rather than being at the top of the pyramid ( Murad et al, 2016 ).

Both quantitative and qualitative research methodologies are sometimes combined in a single review. According to the Cochrane review handbook ( Higgins and Green, 2011 ), regardless of type, reviews should contain certain features, including:

- Clearly stated objectives

- Predefined eligibility criteria for inclusion or exclusion of studies in the review

- A reproducible and clearly stated methodology

- Validity assessment of included studies (eg quality, risk, bias etc).

The main stages of carrying out a systematic review are summarised in Box 1 .

Formulating the research question

Before undertaking a systemic review, a research question should first be formulated ( Bashir and Conlon, 2018 ). There are a number of tools/frameworks ( Table 1 ) to support this process, including the PICO/PICOS, PEO and SPIDER criteria ( Bowers et al, 2011 ). These frameworks are designed to help break down the question into relevant subcomponents and map them to concepts, in order to derive a formalised search criterion ( Methley et al, 2014 ). This stage is essential for finding literature relevant to the question ( Jahan et al, 2016 ).

It is advisable to first check that the review you plan to carry out has not already been undertaken. You can optionally register your review with an international register of prospective reviews called PROSPERO, although this is not essential for publication. This is done to help you and others to locate work and see what reviews have already been carried out in the same area. It also prevents needless duplication and instead encourages building on existing work ( Bashir and Conlon, 2018 ).

A study ( Methley et al, 2014 ) that compared PICO, PICOS and SPIDER in relation to sensitivity and specificity recommended that the PICO tool be used for a comprehensive search and the PICOS tool when time/resources are limited.

The use of the SPIDER tool was not recommended due to the risk of missing relevant papers. It was, however, found to increase specificity.

These tools/frameworks can help those carrying out reviews to structure research questions and define key concepts in order to efficiently identify relevant literature and summarise the main objective of the review ( Jahan et al, 2016 ). A possible research question could be: Is paracetamol of benefit to people who have just had an operation? The following examples highlight how using a framework may help to refine the question:

- What form of paracetamol? (eg, oral/intravenous/suppository)

- Is the dosage important?

- What is the patient population? (eg, children, adults, Europeans)

- What type of operation? (eg, tonsillectomy, appendectomy)

- What does benefit mean? (eg, reduce post-operative pyrexia, analgesia).

An example of a more refined research question could be: Is oral paracetamol effective in reducing pain following cardiac surgery for adult patients? A number of concepts for each element will need to be specified. There will also be a number of synonyms for these concepts ( Table 2 ).

Table 2 shows an example of concepts used to define a search strategy using the PICO statement. It is easy to see even with this dummy example that there are many concepts that require mapping and much thought required to capture ‘good’ search criteria. Consideration should be given to the various terms to describe the heart, such as cardiac, cardiothoracic, myocardial, myocardium, etc, and the different names used for drugs, such as the equivalent name used for paracetamol in other countries and regions, as well as the various brand names. Defining good search criteria is an important skill that requires a lot of practice. A high-quality review gives details of the search criteria that enables the reader to understand how the authors came up with the criteria. A specific, well-defined search criterion also aids in the reproducibility of a review.

Search criteria

Before the search for papers and other documents can begin it is important to explicitly define the eligibility criteria to determine whether a source is relevant to the review ( Hanley and Cutts, 2013 ). There are a number of database sources that are searched for medical/health literature including those shown in Table 3 .

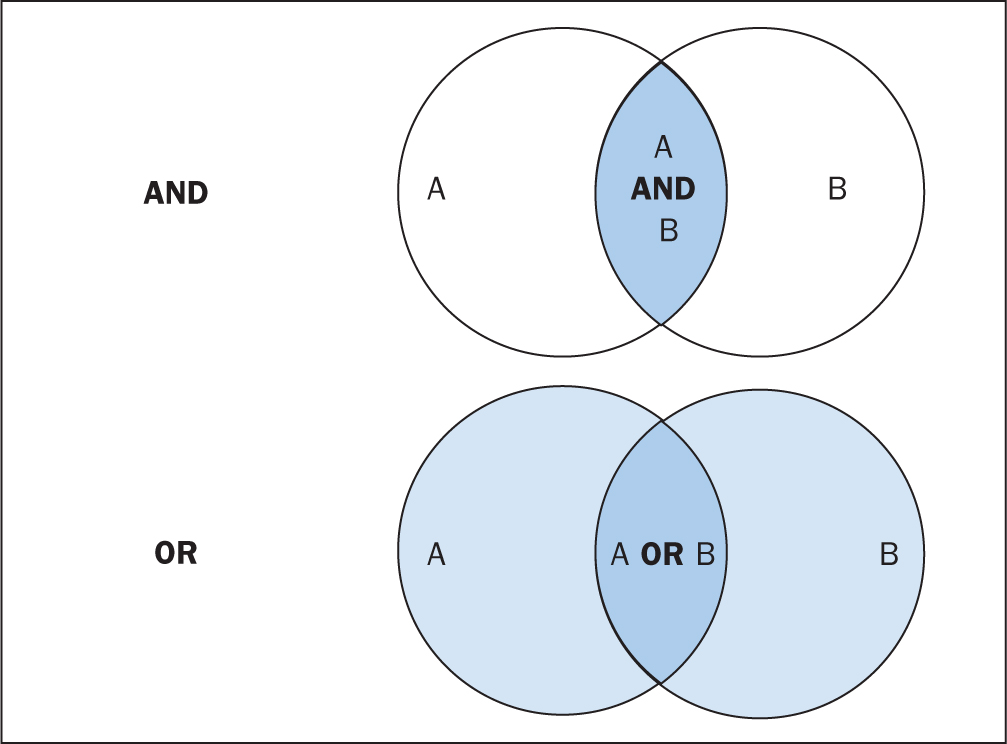

The various databases can be searched using common Boolean operators to combine or exclude search terms (ie AND, OR, NOT) ( Figure 2 ).

Although most literature databases use similar operators, it is necessary to view the individual database guides, because there are key differences between some of them. Table 4 details some of the common operators and wildcards used in the databases for searching. When developing a search criteria, it is a good idea to check concepts against synonyms, as well as abbreviations, acronyms and plural and singular variations ( Cognetti et al, 2015 ). Reading some key papers in the area and paying attention to the key words they use and other terms used in the abstract, and looking through the reference lists/bibliographies of papers, can also help to ensure that you incorporate relevant terms. Medical Subject Headings (MeSH) that are used by the National Library of Medicine (NLM) ( https://www.nlm.nih.gov/mesh/meshhome.html) to provide hierarchical biomedical index terms for NLM databases (Medline and PubMed) should also be explored and included in relevant search strategies.

Searching the ‘grey literature’ is also an important factor in reducing publication bias. It is often the case that only studies with positive results and statistical significance are published. This creates a certain bias inherent in the published literature. This bias can, to some degree, be mitigated by the inclusion of results from the so-called grey literature, including unpublished work, abstracts, conference proceedings and PhD theses ( Higgins and Green, 2011 ; Bettany-Saltikov, 2012 ; Cognetti et al, 2015 ). Biases in a systematic review can lead to overestimating or underestimating the results ( Jahan et al, 2016 ).

An example search strategy from a published review looking at web use for the appraisal of physical health conditions can be seen in Box 2 . High-quality reviews usually detail which databases were searched and the number of items retrieved from each.

A balance between high recall and high precision is often required in order to produce the best results. An oversensitive search, or one prone to including too much noise, can mean missing important studies or producing too many search results ( Cognetti et al, 2015 ). Following a search, the exported citations can be added to citation management software (such as Mendeley or Endnote) and duplicates removed.

Title and abstract screening

Initial screening begins with the title and abstracts of articles being read and included or excluded from the review based on their relevance. This is usually carried out by at least two researchers to reduce bias ( Bashir and Conlon, 2018 ). After screening any discrepancies in agreement should be resolved by discussion, or by an additional researcher casting the deciding vote ( Bashir and Conlon, 2018 ). Statistics for inter-rater reliability exist and can be reported, such as percentage of agreement or Cohen's kappa ( Box 3 ) for two reviewers and Fleiss' kappa for more than two reviewers. Agreement can depend on the background and knowledge of the researchers and the clarity of the inclusion and exclusion criteria. This highlights the importance of providing clear, well-defined criteria for inclusion that are easy for other researchers to follow.

Full-text review

Following title and abstract screening, the remaining articles/sources are screened in the same way, but this time the full texts are read in their entirety and included or excluded based on their relevance. Reasons for exclusion are usually recorded and reported. Extraction of the specific details of the studies can begin once the final set of papers is determined.

Data extraction

At this stage, the full-text papers are read and compared against the inclusion criteria of the review. Data extraction sheets are forms that are created to extract specific data about a study (12 Jahan et al, 2016 ) and ensure that data are extracted in a uniform and structured manner. Extraction sheets can differ between quantitative and qualitative reviews. For quantitative reviews they normally include details of the study's population, design, sample size, intervention, comparisons and outcomes ( Bettany-Saltikov, 2012 ; Mueller et al, 2017 ).

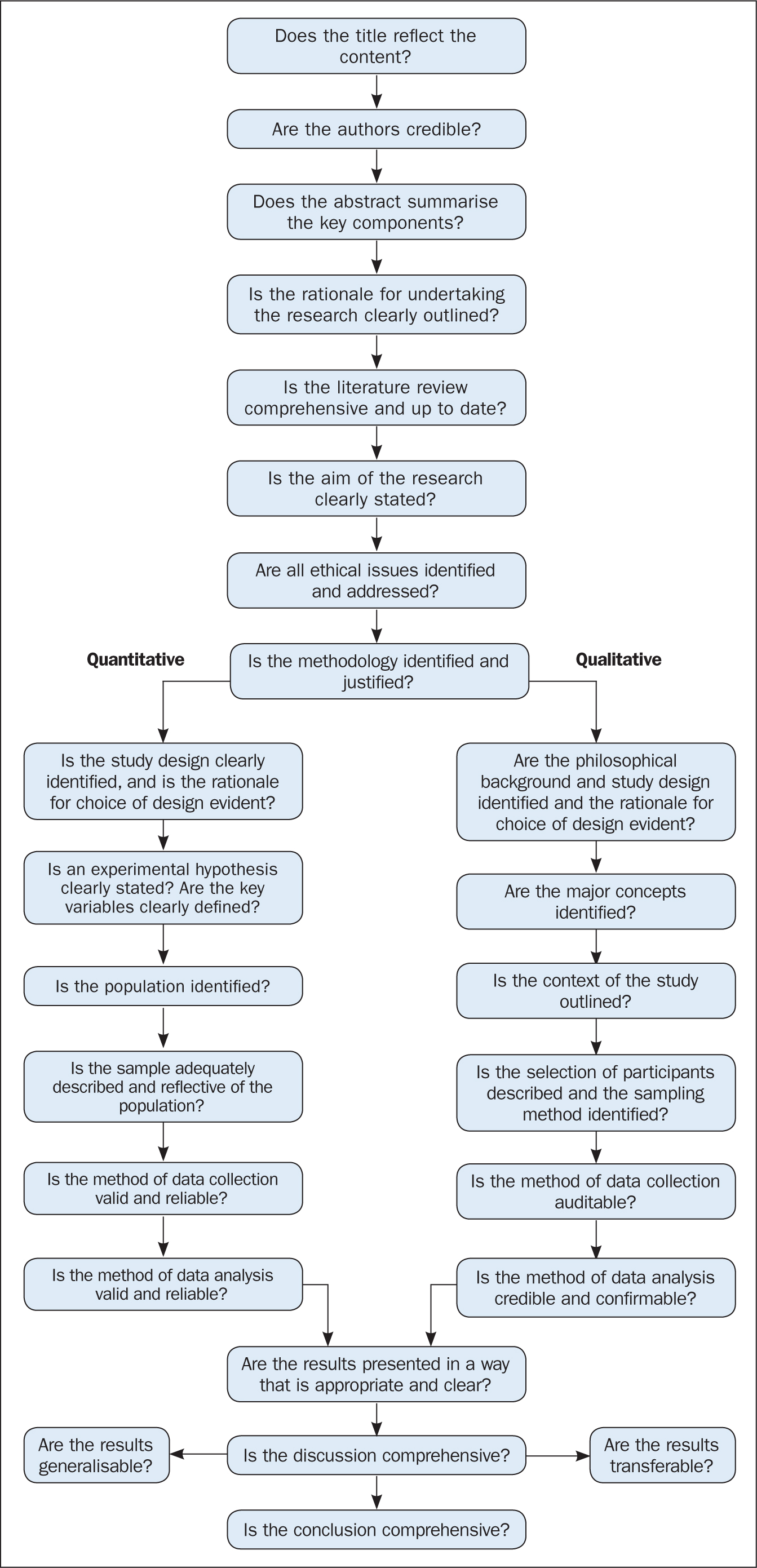

Quality appraisal

The quality of the studies used in the review should also be appraised. Caldwell et al (2005) discussed the need for a health research evaluation framework that could be used to evaluate both qualitative and quantitative work. The framework produced uses features common to both research methodologies, as well as those that differ ( Caldwell et al, 2005 ; Dixon-Woods et al, 2006 ). Figure 3 details the research critique framework. Other quality appraisal methods do exist, such as those presented in Box 4 . Quality appraisal can also be used to weight the evidence from studies. For example, more emphasis can be placed on the results of large randomised controlled trials (RCT) than one with a small sample size. The quality of a review can also be used as a factor for exclusion and can be specified in inclusion/exclusion criteria. Quality appraisal is an important step that needs to be undertaken before conclusions about the body of evidence can be made ( Sambunjak and Franic, 2012 ). It is also important to note that there is a difference between the quality of the research carried out in the studies and the quality of how those studies were reported ( Sambunjak and Franic, 2012 ).

The quality appraisal is different for qualitative and quantitative studies. With quantitative studies this usually focuses on their internal and external validity, such as how well the study has been designed and analysed, and the generalisability of its findings. Qualitative work, on the other hand, is often evaluated in terms of trustworthiness and authenticity, as well as how transferable the findings may be ( Bettany-Saltikov, 2012 ; Bashir and Conlon, 2018 ; Siddaway et al, 2019 ).

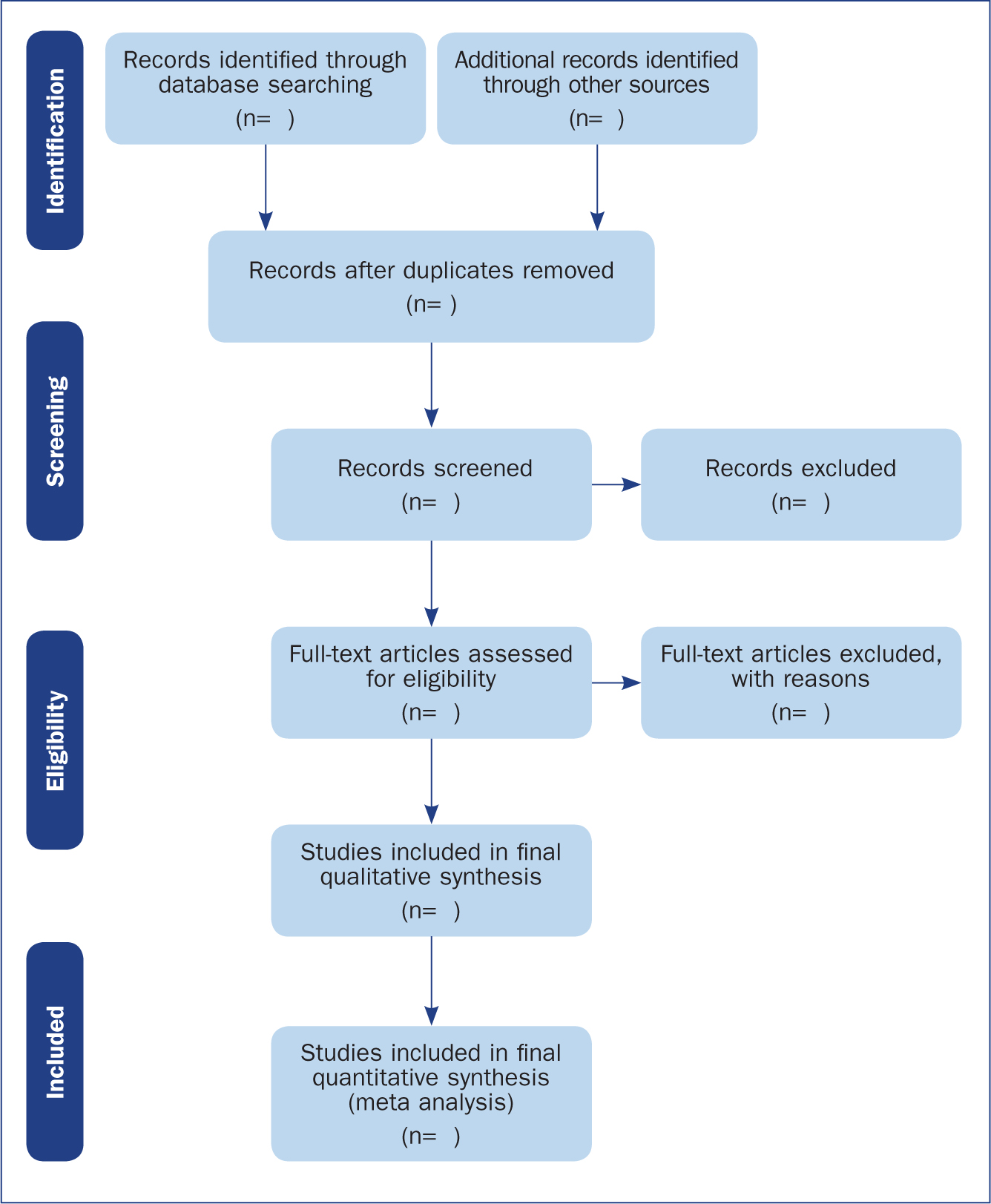

Reporting a review (the PRISMA statement)

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) provides a reporting structure for systematic reviews/meta-analysis, and consists of a checklist and diagram ( Figure 4 ). The stages of identifying potential papers/sources, screening by title and abstract, determining eligibility and final inclusion are detailed with the number of articles included/excluded at each stage. PRISMA diagrams are often included in systematic reviews to detail the number of papers included at each of the four main stages (identification, screening, eligibility and inclusion) of the review.

Data synthesis

The combined results of the screened studies can be analysed qualitatively by grouping them together under themes and subthemes, often referred to as meta-synthesis or meta-ethnography ( Siddaway et al, 2019 ). Sometimes this is not done and a summary of the literature found is presented instead. When the findings are synthesised, they are usually grouped into themes that were derived by noting commonality among the studies included. Inductive (bottom-up) thematic analysis is frequently used for such purposes and works by identifying themes (essentially repeating patterns) in the data, and can include a set of higher-level and related subthemes (Braun and Clarke, 2012). Thomas and Harden (2008) provide examples of the use of thematic synthesis in systematic reviews, and there is an excellent introduction to thematic analysis by Braun and Clarke (2012).

The results of the review should contain details on the search strategy used (including search terms), the databases searched (and the number of items retrieved), summaries of the studies included and an overall synthesis of the results ( Bettany-Saltikov, 2012 ). Finally, conclusions should be made about the results and the limitations of the studies included ( Jahan et al, 2016 ). Another method for synthesising data in a systematic review is a meta-analysis.

Limitations of systematic reviews

Apart from the many advantages and benefits to carrying out systematic reviews highlighted throughout this article, there remain a number of disadvantages. These include the fact that not all stages of the review process are followed rigorously or even at all in some cases. This can lead to poor quality reviews that are difficult or impossible to replicate. There also exist some barriers to the use of evidence produced by reviews, including ( Wallace et al, 2012 ):

- Lack of awareness and familiarity with reviews

- Lack of access

- Lack of direct usefulness/applicability.

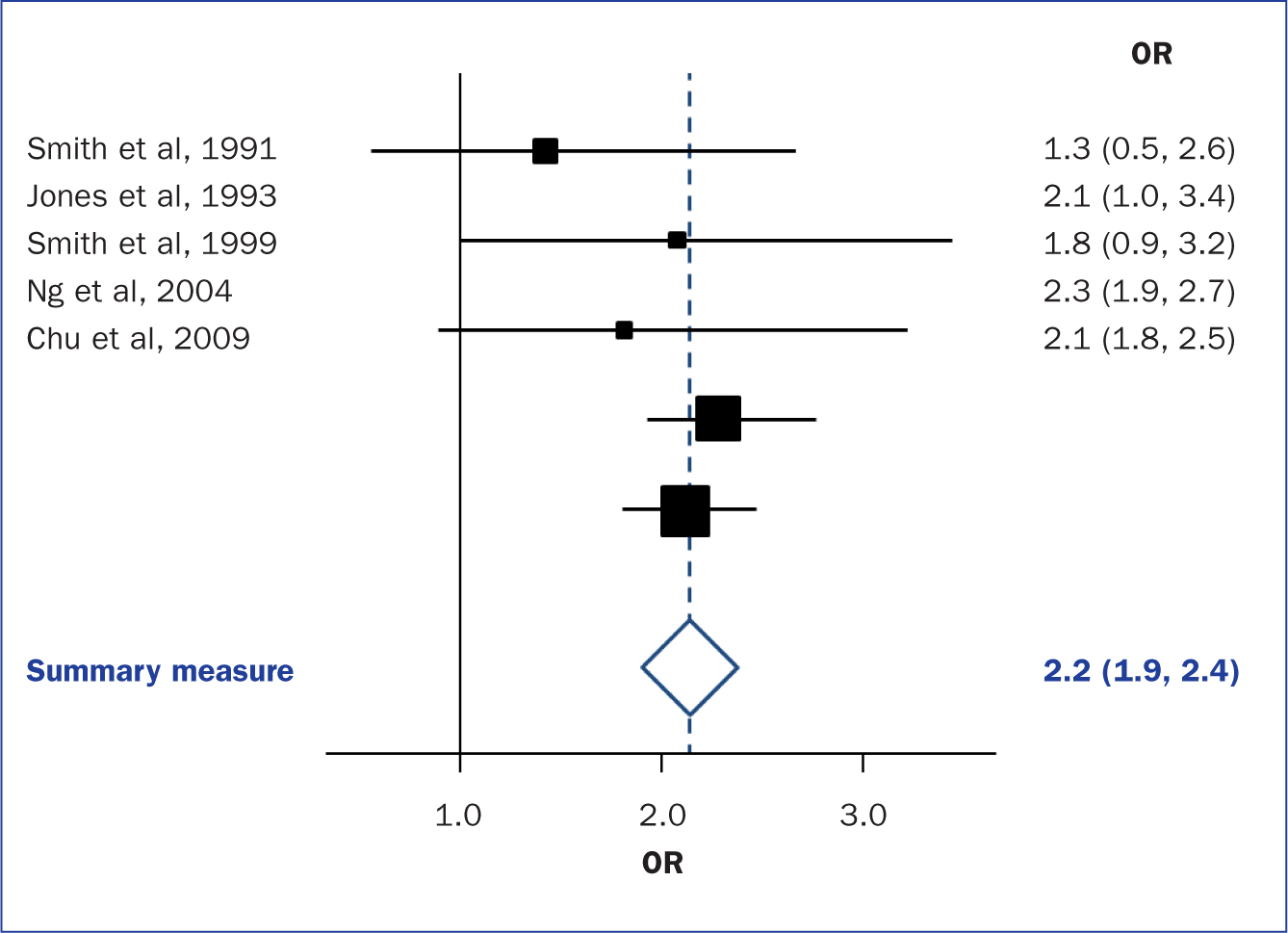

Meta-analysis

When the methods used and the analysis are similar or the same, such as in some RCTs, the results can be synthesised using a statistical approach called meta-analysis and presented using summary visualisations such as forest plots (or blobbograms) ( Figure 5 ). This can be done only if the results can be combined in a meaningful way.

Meta-analysis can be carried out using common statistical and data science software, such as the cross-platform ‘R’ ( https://www.r-project.org), or by using standalone software, such as Review Manager (RevMan) produced by the Cochrane community ( https://tinyurl.com/revman-5), which is currently developing a cross-platform version RevMan Web.

Carrying out a systematic review is a time-consuming process, that on average takes between 6 and 18 months and requires skill from those involved. Ideally, several reviewers will work on a review to reduce bias. Experts such as librarians should be consulted and included where possible in review teams to leverage their expertise.

Systematic reviews should present the state of the art (most recent/up-to-date developments) concerning a specific topic and aim to be systematic and reproducible. Reproducibility is aided by transparent reporting of the various stages of a review using reporting frameworks such as PRISMA for standardisation. A high-quality review should present a summary of a specific topic to a high standard upon which other professionals can base subsequent care decisions that increase the quality of evidence-based clinical practice.

- Systematic reviews remain one of the most trusted sources of high-quality information from which to make clinical decisions

- Understanding the components of a review will help practitioners to better assess their quality

- Many formal frameworks exist to help structure and report reviews, the use of which is recommended for reproducibility

- Experts such as librarians can be included in the review team to help with the review process and improve its quality

CPD reflective questions

- Where should high-quality qualitative research sit regarding the hierarchies of evidence?

- What background and expertise should those conducting a systematic review have, and who should ideally be included in the team?

- Consider to what extent inter-rater agreement is important in the screening process

Hierarchy of Evidence Within the Medical Literature

Affiliations.

- 1 Division of Pediatric Hospital Medicine.

- 2 Department of Pediatrics, Baylor College of Medicine, Houston, Texas.

- 3 Department of Pediatrics, Division of Hospital Medicine, Cincinnati Children's Hospital Medical Center, University of Cincinnati College of Medicine, Cincinnati, Ohio.

- PMID: 35909178

- DOI: 10.1542/hpeds.2022-006690

The quality of evidence from medical research is partially deemed by the hierarchy of study designs. On the lowest level, the hierarchy of study designs begins with animal and translational studies and expert opinion, and then ascends to descriptive case reports or case series, followed by analytic observational designs such as cohort studies, then randomized controlled trials, and finally systematic reviews and meta-analyses as the highest quality evidence. This hierarchy of evidence in the medical literature is a foundational concept for pediatric hospitalists, given its relevance to key steps of evidence-based practice, including efficient literature searches and prioritization of the highest-quality designs for critical appraisal, to address clinical questions. Consideration of the hierarchy of evidence can also aid researchers in designing new studies by helping them determine the next level of evidence needed to improve upon the quality of currently available evidence. Although the concept of the hierarchy of evidence should be taken into consideration for clinical and research purposes, it is important to put this into context of individual study limitations through meticulous critical appraisal of individual articles.

Copyright © 2022 by the American Academy of Pediatrics.

- Biomedical Research*

- Cohort Studies

- Evidence-Based Medicine*

- Research Design

Best Practice for Literature Searching

- Literature Search Best Practice

- What is literature searching?

- What are literature reviews?

- Hierarchies of evidence

- 1. Managing references

- 2. Defining your research question

- 3. Where to search

- 4. Search strategy

- 5. Screening results

- 6. Paper acquisition

- 7. Critical appraisal

- Further resources

- Training opportunities and videos

- Join FSTA student advisory board This link opens in a new window

- Chinese This link opens in a new window

- Italian This link opens in a new window

- Persian This link opens in a new window

- Portuguese This link opens in a new window

- Spanish This link opens in a new window

Evidence hierarchies

Levels of hierarchies can be useful for assessing the quality of evidence. In health sciences, these are portrayed as a pyramid with levels for the different types of study design.

Understanding study designs will help you judge the limitations of what can be concluded from a particular study.

While in health-related research this hierarchy of study types can be used as a guideline, you cannot rely on the hierarchy to substitute for critical appraisal . A strong cohort study would be more useful than a flawed systematic review. There are times when evidence is better sorted by its usefulness for your own research question than by type of study design.

In food research, there is no consensus around the hierarchy of evidence.* Best practice is to decide, when you plan your search, on the type of research you are looking for and what study designs would be appropriate for it.

For example, if you are interested in qualitative studies of people’s behaviour towards nutrition, or in animal studies, you are unlikely to find large RCTs as it is not ethical or possible to run randomised studies in certain areas, so they may not exist. You are more likely to find qualitative studies that may include survey and interview data, write-ups from focus groups, or other types of studies that exist involving crops or animals (often observational or non-randomised studies). Similarly, you might decide that challenge studies are the most appropriate way to research packaging and shelf life.

A systematic review is a study of studies, where researchers follow a predetermined and published protocol to find all the primary research studies done on a question, weigh the reliability of each one, and, if possible, extract the data from the studies in order to draw a conclusion from the combined evidence.

Randomised control trials (RCTs) randomly allocate participants to either an intervention or a control group, so that conclusions can be drawn about the efficacy of an intervention.

Cohort studies are observational, longitudinal studies that look at a group of people with a shared experience or characteristic to see how they fare over time in regards to a particular factor.

Case-controlled studies are observational studies in which a group of cases (i.e. people with a condition or disease) is compared to an analogous group (similar to the case group except that they don't have the condition) to see if a causal attribute can be found for the case group.

Cross-sectional studies are observational studies that describe a population at a certain point in time. These studies can locate correlations but not causal relationships.

*Reported in Research Synthesis Methods and EFSA Journal.

- << Previous: What is critical appraisal?

- Next: Practical guide to literature searching >>

- Last Updated: Nov 25, 2024 2:06 PM

- URL: https://ifis.libguides.com/literature_search_best_practice

The hierarchy of evidence is a fundamental concept in evidence-based research, ranking study designs by their ability to produce reliable and unbiased results. Whether you are in the final year of high school, starting university, or conducting research in the private sector, understanding this hierarchy can help you critically evaluate studies for literature reviews, dissertations, and data-driven decisions.

What is the Hierarchy of Evidence?

The hierarchy of evidence organizes research designs into levels based on the rigor of their methodology and the reliability of their findings. This structure enables researchers to identify credible sources, assess the quality of data, and prioritize studies for critical appraisal and application.

The Levels of Evidence: Consensus Study type filters

The hierarchy of evidence is often depicted as a pyramid, with study types ranked according to their reliability and methodological rigor. Consensus’ study type filters align with these levels, making it easier to find research that matches your needs.

At the top of the pyramid are Meta-Analyses and Systematic Reviews , which represent the most reliable forms of evidence. Meta-analyses combine data from multiple studies, analyzing it as if it were one large study. This approach ensures a comprehensive synthesis of existing evidence. Systematic reviews apply rigorous methods to summarize research on a topic, adhering to strict inclusion and appraisal criteria. Together, these study types provide high-quality evidence, particularly for clinical and scientific decision-making.

Randomized Controlled Trials (RCTs) follow in the hierarchy. These experiments randomly assign participants to a treatment or control group, minimizing bias and allowing for robust causal inferences. Non-randomized trials (Non-RCTs) are also valuable but lack the randomization step, making them slightly more prone to biases. Both study types are essential for assessing interventions’ efficacy and safety.

Next are Observational Studies , which include cohort studies, case-control studies, and cross-sectional studies. These studies systematically observe groups without altering their conditions. While they are useful for identifying correlations and generating hypotheses, they cannot establish causality as robustly as experimental studies. Cohort studies track individuals over time, while case-control studies compare individuals with and without a condition. Cross-sectional studies capture data at a single point in time, offering insights into prevalence but lacking temporal depth.

Literature Reviews occupy the next level. These reviews summarize existing research but often lack systematic inclusion criteria, making them more subjective than systematic reviews. Despite this limitation, they provide valuable background information and context.

Case Reports and Case Series are positioned lower in the hierarchy. They provide detailed accounts of individual cases or a small group of cases, often highlighting rare conditions or phenomena. While they are excellent for hypothesis generation, their findings are not generalizable to larger populations.

At the base of the pyramid are Animal Studies and In-Vitro Studies , which involve preclinical research conducted in controlled environments. These studies are foundational for understanding biological mechanisms and testing hypotheses before human trials. However, their findings may not always translate directly to human contexts, limiting their applicability.

How to filter in Consensus with 'Study type'

Consensus offers a powerful set of AI-enabled search filters that allow you to refine searches based on specific study characteristics. These filters align seamlessly with the hierarchy of evidence, enabling users to prioritize research that matches their needs. For instance, filter results by the type of study design, such as meta-analyses, RCTs, literature reviews, or observational studies. This feature ensures that searches can be tailored to emphasize the most credible forms of evidence.

Other filters include the publication year, open-access availability, population studied (human, animal, or in-vitro), and study details like whether the research involved controlled trials or human participants. You can also refine searches by sample size, study duration, journal quality (measured by SJR quartile ratings), and even the country where the study took place.

For example, if you are asking a medical question and only want results from human randomized controlled trials - apply these filters to exclude other study types. Similarly, if you're interested in long-term studies on a specific topic, filter results based on study duration.

By leveraging these advanced filtering options, focus your research efforts on high-quality and contextually appropriate studies. This approach saves time and ensures that the retrieved evidence aligns with your academic, professional, or personal research goals.

Learn more about using filters in Consensus by reading our full article here.

Applying the Hierarchy

In academic research, understanding and applying the hierarchy of evidence is crucial. When conducting literature reviews, systematic reviews and RCTs should be prioritized when available, as they provide the most robust data. Lower levels of evidence, such as cohort or case-control studies, can still be valuable, especially when contextualizing findings or exploring areas where higher-level evidence is lacking. For critical appraisal, tools like the Critical Appraisal Skills Programme (CASP) or Centre for Evidence-Based Medicine (CEBM) guidelines can help ensure the quality of selected studies.

In the private sector, decision-making processes benefit from leveraging high-level evidence to ensure robust recommendations and strategies. Lower-level studies may be used to identify research gaps or generate innovative ideas, laying the groundwork for further exploration.

For students working on dissertations or research projects, selecting high-quality studies is essential to strengthen arguments and conclusions. Understanding study design and its place within the hierarchy can also guide the creation of new research projects, aiming for higher positions in the evidence pyramid to ensure impactful and reliable results.

Critical appraisal beyond the pyramid

While the hierarchy provides a valuable framework, critical thinking is essential in its application. It is important to evaluate whether a study aligns with the specific research question and to identify any potential limitations in its design or execution. Biases, whether related to selection, measurement, or publication, should always be considered when interpreting findings. Moreover, adaptability is key, as applying evidence appropriately to real-world scenarios often requires balancing the strengths and limitations of available studies.

Understanding the hierarchy of evidence equips you with the tools to navigate research effectively. By prioritizing high-quality evidence and critically evaluating sources, you can enhance the credibility and impact of your work in academic or professional settings. This systematic approach ensures that decisions and conclusions are grounded in the best available research, fostering a culture of rigorous and informed inquiry.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

The hierarchy of evidence: Levels and grades of recommendation

Ba petrisor.

- Author information

- Copyright and License information

Correspondence: Dr. BA Petrisor, Brad Petrisor MD 6North Wing, Hamilton Health Sciences, General Hospital, 237 Barton St. E, Hamilton, Ontario, Canada L8L 2X2. E-mail: [email protected]

This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Evidence-based medicine requires the integration of clinical judgment, recommendations from the best available evidence and the patient's values. 1 The “best available evidence” is used quite frequently and in order to fully understand this one needs to have a clear knowledge of the hierarchy of evidence and how the integration of this evidence can be used to formulate a grade of recommendation. 2 It is necessary to place the available literature into a hierarchy as this allows for a clearer communication when discussing studies, both in day-to-day activities such as teaching rounds or discussions with colleagues, but especially when conducting a systematic review so as to establish a recommendation for practice. 2 This necessarily requires an understanding of both study design and quality as well as other aspects which can make placing the study within the hierarchy difficult. 3 Another confounder is that there are a number of systems that can be used to place a study into a hierarchy and depending on the system a study can be placed at a different “level”. 4 However, in general the different systems rate high quality evidence as “1” or “high” and low quality evidence as “4 or 5” or “low”. Recently, some orthopedic journals have adopted the reporting of levels of evidence with the individual studies and in many cases the grading system has been adopted from the Oxford centre for evidence-based medicine system. 3 Rather than refer to any particular system we will speak in general terms of those studies deemed to be high-level evidence and relate this to those of lesser quality.

L EVELS OF E VIDENCE

Study design.

Surgical literature can be broadly classified as those articles with a primary interest in therapy, prognosis, harm, economic analysis or those focusing on overviews to name a few. 5 Within each classification there is a hierarchy of evidence, that is, some studies are better suited than others, to answer a question of therapy, for example, and may more accurately represent the “truth”. The ability of a study to do this rests on two main contributing factors, the study design and the study quality. 3 In this context we will focus for the most part on those studies addressing therapy as this is generally the most common study in the orthopedic surgical literature.

Available therapeutic literature can be broadly categorized as those studies of an observational nature and those studies that have a randomized experimental design. 2 The reason that studies are placed into a hierarchy is that those at the top are considered the “best evidence”. 5 In the case of therapeutic trials this is the randomized controlled trial (RCT) and meta-analyses of RCTs. RCTs have within them, by the nature of randomization, an ability to help control bias. 6 , 7 Bias (of which there are many types) can confound the outcome of a study such that the study may over or underestimate what the true treatment effect is. 8 Randomization is able to achieve this by not only controlling for known prognostic variables but also and more importantly controlling for the unknown prognostic variables within a sample population. 7 That is to say, the act of randomization should be able to create an equal distribution of prognostic variables (both known and unknown) within both the control and treatment groups within a study. This bias-controlling measure helps attain a more accurate estimation of the truth. 6 Those studies of a more observational nature have within their designs areas of bias not present in the randomized trial.

Meta-analyses of randomized controlled trials in effect use the data from individual RCTs and statistically pool it. 5 , 9 This effectively increases the number of patients that the data was obtained from, thereby increasing the effective sample size. The major drawback to this pooling is that it is dependent on the quality of RCTs that were used. 9 For example, if three RCTs are in favor of a treatment and two are not or if the results show wide variation between the estimates of treatment effect with large confidence intervals (i.e. the precision of the point estimate of the treatment effect is poor) between different RCTs then there is some variable (or variables) causing inconsistent results between studies (one variable may in fact be differences in study quality among others) and the quality of usable results from statistical pooling will be poor. However, if five methodologically well done RCTs are used, all of which favor a treatment and have precise measures of treatment effect (i.e., narrow confidence intervals) then the data obtained from statistical pooling is much more believable. The former studies can be termed heterogeneous and the latter homogeneous. 9

In contrast to this, the lowest level on the hierarchy (aside from expert opinion) is the case report and case series. 3 These are usually retrospective in nature and have no comparison group. They are able to provide outcomes for only one subgroup of the population (those with the intervention). There is the potential for the introduction of bias especially if there is incomplete data collection or follow-up which may happen with retrospective study designs. Also, these studies are usually based on a single surgeon's or center's experience which may raise doubts as to the generalizability of the results. Even with these drawbacks, this study design may be useful in many ways. They can be used effectively for hypothesis generation as well as potentially providing information on rare disease entities or complications that may be associated with certain procedures or implants. For example, reporting of infection rates following a large series of tibial fractures treated with a reamed intramedullary nail 10 or the rate of hardware failure of a particular implant to name a few.

The next level of study is the case-control. The case-control starts with a group who has had an outcome of interest and looks back at other similar individuals to see what factors may have been present in the study group and may be associated with the outcome. Let us take a hypothetical example. Those patients who have a nonunion following a tibial shaft fracture treated with an intramedullary nail. If one wanted to see what prognostic factors may have contributed to this, a group that was matched for the known prognostic variables such as age, treatment type, fracture pattern etc. could then be compared and an analysis of other prognostic variables such as smoking, nonsteroidal anti-inflammatory use or fracture pattern could be done to see if there was any association between these and the development of nonunion. The drawback to this design is that there may be unknown or as yet unidentified risk factors that would not be able to be analyzed. However, in those that are known, the strength of association may be determined and given in the form of odds ratios or sometimes relative risks. Other strengths of this study design are that they are usually less expensive to implement and can allow for a quicker “answer” to a specific question. They also can allow for analysis of multiple prognostic factors and relationships within these factors to help determine potential associations to the outcome of choice (in this case nonunion).

In contrast to the case-control and slightly higher on the levels of evidence hierarchy, 3 the cohort study is usually done in a prospective fashion (although it can be done retrospectively) and usually follows two groups of patients. One of these groups has a risk factor or prognostic factor of interest and the other does not. The groups are followed to see what the rate of development of a disease or specific outcome is in those with the risk factor as compared to those without. Given that this is usually done prospectively it falls higher within the hierarchy as data collection and follow-up can be more closely monitored and attempts can be made to make them as complete and accurate as possible. This type of study design can be very powerful in some instances. For example, if one wanted to see what the effect of smoking was on nonunion rates, it wouldn't be ethical or generally possible to randomize patients with fractures into those who are going to smoke and those who are not. However, by following two groups of patients, smokers and non-smokers with tibial fractures for instance, one can then document nonunion rates between the two groups. In this case, because of its prospective design, groups can at least be matched to try and limit the bias of at least those prognostic variables that are known, such as age, fracture pattern or treatment type to name a few.

It is important to understand distinctions between study designs. Some investigators argue that well-constructed observational studies lead to similar conclusions as RCTs. 11 However, others suggest that observational studies have a more significant potential to over or underestimate treatment effects. Indeed, examples are present in both medical and orthopedic surgical specialties showing that discrepant results can be found between randomized and nonrandomized trials. 6 , 8 , 12 One recent nonsurgical example of this is hormone replacement therapy in postmenopausal women. 13 , 14 Previous observational studies suggested that there was a significant effect of hormone replacement therapy on bone density with a favorable risk profile. However, a recent large RCT found an increasing incidence of detrimental cardiac and other adverse events in those undergoing hormone replacement therapy, risks which had heretofore been underestimated by observational studies. 13 , 14 As a result of this the management of postmenopausal osteoporosis has undergone a shift in first-line therapy. 13 In the orthopedic literature it has been suggested that when assessing randomized and nonrandomized trials using studies of arthroplasty vs. internal fixation, nonrandomized studies overestimated the risk of mortality following arthroplasty and underestimated the risk of revision surgery with arthroplasty. 8 Interestingly, they also found that in those nonrandomized studies that had similar results to randomized studies, patient age, gender and fracture displacement were controlled for between groups. 8 This illustrates the importance of both controlling for variables and for randomization which will control for potentially important but as yet unknown variables.

Thus the type of study design used places the study broadly into a hierarchy of evidence from the case series up to the randomized controlled trial. There is also, however, an internal hierarchy within the overall levels of evidence and that is usually based on the study methodology and overall quality.

S TUDY Q UALITY

Concepts of study methodology are important to consider when placing a study into the levels of evidence. There are some that advocate dividing the hierarchy levels into sub-levels based in part on study methodology, while others suggest that poor methodology will take a study down a level. 2 , 3 For instance, one RCT could be considered a very high-level study while another RCT because of methodological limitations may be considered lower. Do these then fall into separate categories or into sub-categories of the same level? It depends on the level of evidence system being used. The real point is that these systems acknowledge a difference in the quality and thus the “level” of these studies. In many instances however, the methodological limitations that will take a study down a level are not clearly defined and it is left to the individual to attempt to correctly categorize the study based on them. The rigor with which a study is conducted plays a role in how believable the results may be. 15 , 16 Not all case-control, cohort or randomized studies are done to the same standards and thus if done multiple times, may have different results, both due to chance or due to confounding variables and biases. Briefly, if we take the example of a RCT one needs to look at all aspects of the methodology to see how rigorously the study was conducted. We present three examples of how different aspects of methodology may affect the results of a trial. While it is important to look closely at the methods section of a paper to see how the study was conducted, it must be remembered that if something has not been reported as being done (such as the method of randomization) it does not necessarily mean it was not done. 17 This illustrates the importance of tools such as the “Consolidated Standards of Reporting” (CONSORT) statement for reporting trials which attempts to improve the quality of reporting. 18 , 19

Randomization

As randomization is the key to balancing prognostic variables, it is first necessary to determine how it was done. The most important concepts of randomization are that allocation is concealed and that the allocation is truly random. If it is known to which group a patient will be randomized it may be possible to potentially influence their allocation. Examples of this would include randomizing by chart number, birthdates or odd or even days. This necessarily introduces a selection bias which negates the effect of randomization. This makes concealment of allocation a vital component of successful randomization. Allocation can be concealed by having offsite randomization centers, web-based or phone-based randomization.

In surgical trials blinding is obviously not possible for some aspects of the trial. It is not possible (or ethical) to blind a surgeon, nor is it usually possible to blind a patient to a particular treatment. However, there are other aspects of a trial where blinding can play a role. For instance, it is possible to blind outcome assessors, the data analysts and potentially other outcomes' adjudicators. Thus it is important to understand who is doing the data collecting and ask, are they independent and were they blinded to the treatment received? If not, possible influences (either subconscious or not) on the patient and subsequent results can happen.

The number lost to follow-up is very important to know as clearly this can affect the estimate of treatment effect. While some argue that only a 0% loss to follow-up fully ensures benefits of randomization, 20 in general, the validity of a study may be threatened if more than 20% of patients are lost to follow-up. 5 Calculations of results should include a worst case scenario, that is, those that are lost to follow-up are considered to have the worst outcome in the treatment group and those lost to follow-up in the control group having the best outcome. If there is still a treatment effect seen between the groups then this makes a more compelling argument for the treatment effect observed being a valid estimate of the truth. 21

Scales have been devised that can rate a study based on its methodology and assign a score. 22 This does not always need to be done in daily practice however. Knowledge of the different areas of methodology though may affect interpretation of the results and allow for the recognition of a “strong” study which may then provide more compelling and “believable” results as compared to a “weaker” study.

Grades of recommendation

When truly does assessing the quality of a study in relation to the levels of evidence matter? It matters when a grade of recommendation is being developed. A very important concept is that a single high-level therapeutic study (in our case) does not imply a high grade of recommendation for treatment. A grade of recommendation can only be developed after a thorough systematic review of the literature and in many cases discussions with content experts. 2 , 4 , 23 When developing grades of recommendation, it becomes important to place weights on studies with more weight being given to studies of high quality and high on the hierarchy and less so to lesser quality studies. 2

The GRADE working group suggests a system for grading the quality of evidence obtained from a thorough systematic review [ Table 1 ]. This should be done for all the outcomes of interest as well as all the potential harms. They suggest that once the total evidence has been graded then recommendations for treatment can be made.

Criteria for assigning grade of evidence 22

The GRADE working group suggests that when making a recommendation for treatment four areas should be considered: 1) What are the benefits vs. harms? Are there clear benefits to an intervention or are there more harms than good? 2) The quality of the evidence, 3) Are there modifying factors affecting the clinical setting such as the proximity of qualified persons able to carry out the intervention? and 4) What is the baseline risk for the potential population being treated? 1 Once these factors are taken into consideration, the GRADE working group recommends a recommendation be placed into one of four categories. Either “do it” or “don't do it” and “probably do it” or “probably don't do it”. The grades of “do it” or “don't do it” are defined as “a judgment that most well-informed people would make”. The grades of “probably do it” or “probably don't do it” are defined as “a judgment that the majority of well-informed people would make but a substantial minority would not”. 2

Thus one can see that a grade of recommendation in contradistinction to a level of recommendation is made based on the above four criteria. Inherent in the above criteria are a thorough review of the literature and a grading of the studies through knowledge of study design and methodology. Evidence-based medicine is touted as being a decision-making based on the composite of the triumvirate of clinical experience, the best available evidence and patient values. One can see that knowledge of the levels of evidence, the pros and cons of different study designs and how study methodology can affect the placement of a study within the hierarchy encompasses one aspect of this. The development of grades of recommendation based on the GRADE working group system gives one the tools to convey the best available evidence to the patient as well as help the literature guide the busy clinician. Also, different harms and benefits of various treatments are given different value judgments by individual patients. Discussions with patients about what is important to them, mixed with surgical experience and “what works in my hands” helps round out the decision-making when developing a treatment plan.

Disclaimer: No funding was received in the development of this manuscript. Dr. Bhandari is supported in part by Canada Research Chair, McMaster University.

Source of Support: Nil.

R EFERENCES

- 1. Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: What it is and what it isn't. BMJ. 1996;312:71–2. doi: 10.1136/bmj.312.7023.71. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 2. Atkins D, Best D, Briss PA, Eccles M, Falck-Ytter Y, Flottorp S, et al. Grading quality of evidence and strength of recommendations. BMJ. 2004;328:1490. doi: 10.1136/bmj.328.7454.1490. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Phillips B, Ball C, Sackett DL, Badenoch D, Straus S, Haynes B, et al. Centre for evidence-based medicine. Oxford-centre for evidence based medicine: GENERIC; 1998. Levels of evidence and grades of recommendation. [ Google Scholar ]