Society Homepage About Public Health Policy Contact

Data-driven hypothesis generation in clinical research: what we learned from a human subject study, article sidebar.

Submit your own article

Register as an author to reserve your spot in the next issue of the Medical Research Archives.

Join the Society

The European Society of Medicine is more than a professional association. We are a community. Our members work in countries across the globe, yet are united by a common goal: to promote health and health equity, around the world.

Join Europe’s leading medical society and discover the many advantages of membership, including free article publication.

Main Article Content

Hypothesis generation is an early and critical step in any hypothesis-driven clinical research project. Because it is not yet a well-understood cognitive process, the need to improve the process goes unrecognized. Without an impactful hypothesis, the significance of any research project can be questionable, regardless of the rigor or diligence applied in other steps of the study, e.g., study design, data collection, and result analysis. In this perspective article, the authors provide a literature review on the following topics first: scientific thinking, reasoning, medical reasoning, literature-based discovery, and a field study to explore scientific thinking and discovery. Over the years, scientific thinking has shown excellent progress in cognitive science and its applied areas: education, medicine, and biomedical research. However, a review of the literature reveals the lack of original studies on hypothesis generation in clinical research. The authors then summarize their first human participant study exploring data-driven hypothesis generation by clinical researchers in a simulated setting. The results indicate that a secondary data analytical tool, VIADS—a visual interactive analytic tool for filtering, summarizing, and visualizing large health data sets coded with hierarchical terminologies, can shorten the time participants need, on average, to generate a hypothesis and also requires fewer cognitive events to generate each hypothesis. As a counterpoint, this exploration also indicates that the quality ratings of the hypotheses thus generated carry significantly lower ratings for feasibility when applying VIADS. Despite its small scale, the study confirmed the feasibility of conducting a human participant study directly to explore the hypothesis generation process in clinical research. This study provides supporting evidence to conduct a larger-scale study with a specifically designed tool to facilitate the hypothesis-generation process among inexperienced clinical researchers. A larger study could provide generalizable evidence, which in turn can potentially improve clinical research productivity and overall clinical research enterprise.

Article Details

The Medical Research Archives grants authors the right to publish and reproduce the unrevised contribution in whole or in part at any time and in any form for any scholarly non-commercial purpose with the condition that all publications of the contribution include a full citation to the journal as published by the Medical Research Archives .

Hypothesis Generation: The Key to Unlocking Insights in Data Science

As data scientists, we‘re often eager to flex our modeling muscles and dive straight into the algorithmic deep end. But in our haste to get to the "fun stuff", we risk overlooking one of the most pivotal steps in any successful data science project: thorough hypothesis generation.

Seasoned data scientist and author Scott Wentworth sums it up well: "Hypotheses are the scaffolding upon which data science is built. Without them, you‘re just rearranging deck chairs on the Titanic."

In this post, we‘ll explore what hypothesis generation is and why it‘s so critical, walk through an applied example, and share some best practices for making it an integral part of your data science workflow.

What is Hypothesis Generation?

At its core, hypothesis generation is the process of leveraging your domain expertise and business intuition to identify educated guesses about the key factors influencing your outcome of interest. These hypotheses could pertain to:

- Predictor variables likely to be important in a supervised learning model

- Suspected segments or cohorts of interest in unsupervised clustering

- Speculated root causes to investigate in anomaly detection

- Potential confounding factors to control for in causal inference

The key is that you‘re making these suppositions before looking at the data or knowing the actual outcomes. You‘re tapping into your subject matter knowledge to surface areas of high potential impact.

Consider this eye-opening statistic: a 2021 McKinsey global survey found that 60% of respondents consider hypotheses generation and asking the right business questions to be one of the top three most important skills for data scientists. Yet less than a third felt this muscle was well-developed in their organizations.

Distinguishing Hypothesis Generation from Testing

One common point of confusion, especially for those new to data science, is the difference between hypothesis generation and hypothesis testing. While the two are closely linked, they are distinct steps in the scientific process.

Hypothesis generation happens first and is about creatively brainstorming ideas based on your mental models and assumptions. You‘re not proving anything yet, just articulating possibilities grounded in reason.

Once armed with a set of hypotheses, you can then shift into hypothesis testing mode. This is where you statistically analyze the data to determine which hypotheses have merit. Common techniques include:

- Significance testing of regression coefficients

- Chi-squared tests for independence

- Analysis of variance (ANOVA)

- Bayesian posterior probabilities

The gold standard is to design controlled experiments to test your hypotheses whenever possible. Randomly assigning subjects to treatment and control groups allows you to isolate causal effects and rule out confounding factors.

While absolutely critical, be careful not to shortchange hypothesis generation in your eagerness to test. Insufficient hypothesis generation often leads to flawed experimental designs and false conclusions down the road.

The Power of Hypothesis Generation Done Right

When done thoroughly and intentionally, hypothesis generation yields several powerful benefits:

Enhanced problem understanding . The process of generating hypotheses compels you to think deeply about the dynamics and nuances of the problem at hand. You‘ll often uncover new angles and considerations that deepen your overall understanding.

Targeted data collection and feature engineering . Your hypotheses directly inform what data to collect and how to process it. By identifying key factors upfront, you can be much more intentional and efficient in your data sourcing and transformation efforts.

Structured, efficient exploration . With a robust set of hypotheses, you have a logical roadmap for your exploratory data analysis. Rather than aimlessly fishing for correlations, you can systematically test each hypothesis and prune your feature space accordingly.

Stronger stakeholder alignment . Articulating and socializing your hypotheses builds shared context with business partners. Discussing hypotheses creates a forum to pressure test assumptions and align on what success looks like.

The value of a hypothesis-driven approach really shines through when you look at the aggregate impact. The State of Data Science 2021 report by Anaconda found that teams that consistently use hypothesis generation and testing are:

- 28% more likely to deliver projects on schedule

- 31% more likely to have their work positively impact decision-making

- 43% more likely to see their models reliably deployed to production

Hypothesis Generation in Practice: NYC Taxi Trip Duration

To make things concrete, let‘s illustrate hypothesis generation in the context of a real data science problem: predicting taxi trip durations in New York City.

Imagine you‘re a data scientist at the NYC Taxi & Limousine Commission. With over 200 million taxi trips per year, even small improvements in dispatching and routing efficiency could yield huge dividends in terms of driver utilization and passenger satisfaction. Your task is to build a predictive model for trip duration to help optimize the dispatcher algorithms.

Before even looking at the data, you‘d want to generate an extensive set of hypotheses across categories like:

Pickup and dropoff locations

- Trips between boroughs will be significantly longer than those within the same borough

- Pickups or dropoffs at airports will have longer durations due to traffic and loading times

- Trips ending in Manhattan will be longer than those ending in outer boroughs

Time & seasonality factors

- Trips during weekday rush hours (7-9am, 4-6pm) will be longest

- Trip durations will be higher in summer months due to increased tourist traffic

- Trips on evenings before major holidays will be longer due to high demand

Weather conditions

- Trips during periods of rain or snow will take markedly longer

- Extreme temperatures (>90°F or <30°F) will lead to longer trips as drivers and passengers adjust

Driver attributes

- More experienced drivers, as measured by lifetime trips, will have shorter durations

- Drivers who have taken breaks recently will complete trips faster

- Drivers with higher average rating scores will have shorter trip times

Passenger characteristics

- Trips with more than 2 passengers will have longer durations due to loading/unloading

- Trips where the passenger indicates they‘re in a hurry will be faster

- Trips booked via a corporate account may be longer due to expense account usage

The goal is to be as exhaustive as possible in brainstorming potential influencing factors. You‘re not aiming for perfection, but rather to cast a wide net and engage your creativity.

With your set of hypotheses defined, you‘d then translate each into a testable statistical statement. For example:

- Null hypothesis: Average trip duration is equal for pickups in Manhattan vs. outer boroughs

- Alternative hypothesis: Average trip duration is greater for pickups in Manhattan vs. outer boroughs

You‘d then conduct rigorous exploratory data analysis to assess the signal in each hypothesized relationship. Techniques like correlation matrices, bivariate plots, and feature importance scores would help identify the most promising hypotheses to carry forward into modeling.

Advanced Techniques and Considerations

As you become more experienced with hypothesis generation, there are some advanced techniques to keep in mind:

Causal inference . When your goal is to uncover causal relationships (e.g. what levers actually drive customer churn), using causal graphs and directed acyclic graphs (DAGs) can yield crisper hypotheses grounded in causal theory.

Bayesian priors . Incorporating prior probabilities into your hypotheses can help quantify your pre-existing beliefs. Tools like pymc allow you to specify distributional priors and update them with data.

Partial pooling . If you have hierarchical or grouped data (e.g. customers within regions), using partial pooling via mixed effects models can help surface hypotheses about group-level differences.

Transfer learning . When working in a domain related to a previous project, consider starting with the most successful hypotheses from that work. While not guaranteed to hold, they can accelerate idea generation.

It‘s also important to be mindful of some common pitfalls:

Confirmation bias . It‘s easy to gravitate towards hypotheses that conform to our pre-existing notions. Make a conscious effort to generate counter-hypotheses that challenge your assumptions.

Failure to iterate . Hypothesis generation is not a one-and-done exercise. As you explore the data and test your initial hypotheses, new ideas should emerge. Build in time to brainstorm additional hypotheses throughout your project lifecycle.

Prioritization paralysis . Analysis is often subject to time and resource constraints. Be strategic in prioritizing hypotheses that are both high potential impact and feasible to test given the available data.

Continuing Your Hypothesis Generation Journey

We‘ve covered a lot of ground in this post, but hypothesis generation is a skill that requires continuous practice and refinement. If you‘re looking to further sharpen your abilities, here are some additional resources to check out:

- The free " Hypothesis Generation for Startups " course on Coursera

- The " Mastering Hypothesis-Driven Development " course on Pluralsight

- " How to Frame and Communicate Your Data Science Projects " post on Towards Data Science

- " The Art of Hypothesis Testing: A Psychology Perspective " chapter from the Wiley Handbook of Psychometric Testing

Remember, the most successful data scientists are those who balance technical acumen with deep curiosity and creative problem solving. By honing your hypothesis generation skills, you‘ll be well on your way to driving outsized impact and becoming a trusted thought partner.

How useful was this post?

Click on a star to rate it!

Average rating 0 / 5. Vote count: 0

No votes so far! Be the first to rate this post.

Share this:

You may like to read,.

- Understanding Random Forests: A Comprehensive Guide [2024 Update]

- Wheel-y Interesting Insights: How I Cycled to a Top 5% Finish in Kaggle‘s Bike Sharing Demand Competition

- Using Data? Master the Science in Data Science

- A Practical Guide to Hypothesis Testing: Z-Test vs T-Test

- 10 Essential Things to Know Before Diving into Your First Data Science Project

- A Detailed History of Neural Networks: From Simple Circuits to Deep Learning

- The AI Video Editor – Autopod.fm‘s Revolution in Podcast Creation Workflows

- Which Machine Learning Algorithms Do Not Have a "Learning Rate" Hyperparameter?

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Scientific Hypotheses: Writing, Promoting, and Predicting Implications

Armen yuri gasparyan, lilit ayvazyan, ulzhan mukanova, marlen yessirkepov, george d kitas.

- Author information

- Article notes

- Copyright and License information

Address for Correspondence: Armen Yuri Gasparyan, MD. Departments of Rheumatology and Research and Development, Dudley Group NHS Foundation Trust (Teaching Trust of the University of Birmingham, UK), Russells Hall Hospital, Pensnett Road, Dudley, West Midlands DY1 2HQ, UK. [email protected]

Corresponding author.

Received 2019 Sep 2; Accepted 2019 Oct 28; Collection date 2019 Nov 25.

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License ( https://creativecommons.org/licenses/by-nc/4.0/ ) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Scientific hypotheses are essential for progress in rapidly developing academic disciplines. Proposing new ideas and hypotheses require thorough analyses of evidence-based data and predictions of the implications. One of the main concerns relates to the ethical implications of the generated hypotheses. The authors may need to outline potential benefits and limitations of their suggestions and target widely visible publication outlets to ignite discussion by experts and start testing the hypotheses. Not many publication outlets are currently welcoming hypotheses and unconventional ideas that may open gates to criticism and conservative remarks. A few scholarly journals guide the authors on how to structure hypotheses. Reflecting on general and specific issues around the subject matter is often recommended for drafting a well-structured hypothesis article. An analysis of influential hypotheses, presented in this article, particularly Strachan's hygiene hypothesis with global implications in the field of immunology and allergy, points to the need for properly interpreting and testing new suggestions. Envisaging the ethical implications of the hypotheses should be considered both by authors and journal editors during the writing and publishing process.

Keywords: Bibliographic Databases, Peer Review, Writing, Research Ethics, Hypothesis, Impact

INTRODUCTION

We live in times of digitization that radically changes scientific research, reporting, and publishing strategies. Researchers all over the world are overwhelmed with processing large volumes of information and searching through numerous online platforms, all of which make the whole process of scholarly analysis and synthesis complex and sophisticated.

Current research activities are diversifying to combine scientific observations with analysis of facts recorded by scholars from various professional backgrounds. 1 Citation analyses and networking on social media are also becoming essential for shaping research and publishing strategies globally. 2 Learning specifics of increasingly interdisciplinary research studies and acquiring information facilitation skills aid researchers in formulating innovative ideas and predicting developments in interrelated scientific fields.

Arguably, researchers are currently offered more opportunities than in the past for generating new ideas by performing their routine laboratory activities, observing individual cases and unusual developments, and critically analyzing published scientific facts. What they need at the start of their research is to formulate a scientific hypothesis that revisits conventional theories, real-world processes, and related evidence to propose new studies and test ideas in an ethical way. 3 Such a hypothesis can be of most benefit if published in an ethical journal with wide visibility and exposure to relevant online databases and promotion platforms.

Although hypotheses are crucially important for the scientific progress, only few highly skilled researchers formulate and eventually publish their innovative ideas per se . Understandably, in an increasingly competitive research environment, most authors would prefer to prioritize their ideas by discussing and conducting tests in their own laboratories or clinical departments, and publishing research reports afterwards. However, there are instances when simple observations and research studies in a single center are not capable of explaining and testing new groundbreaking ideas. Formulating hypothesis articles first and calling for multicenter and interdisciplinary research can be a solution in such instances, potentially launching influential scientific directions, if not academic disciplines.

The aim of this article is to overview the importance and implications of infrequently published scientific hypotheses that may open new avenues of thinking and research.

Despite the seemingly established views on innovative ideas and hypotheses as essential research tools, no structured definition exists to tag the term and systematically track related articles. In 1973, the Medical Subject Heading (MeSH) of the U.S. National Library of Medicine introduced “Research Design” as a structured keyword that referred to the importance of collecting data and properly testing hypotheses, and indirectly linked the term to ethics, methods and standards, among many other subheadings.

One of the experts in the field defines “hypothesis” as a well-argued analysis of available evidence to provide a realistic (scientific) explanation of existing facts, fill gaps in public understanding of sophisticated processes, and propose a new theory or a test. 4 A hypothesis can be proven wrong partially or entirely. However, even such an erroneous hypothesis may influence progress in science by initiating professional debates that help generate more realistic ideas. The main ethical requirement for hypothesis authors is to be honest about the limitations of their suggestions. 5

EXAMPLES OF INFLUENTIAL SCIENTIFIC HYPOTHESES

Daily routine in a research laboratory may lead to groundbreaking discoveries provided the daily accounts are comprehensively analyzed and reproduced by peers. The discovery of penicillin by Sir Alexander Fleming (1928) can be viewed as a prime example of such discoveries that introduced therapies to treat staphylococcal and streptococcal infections and modulate blood coagulation. 6 , 7 Penicillin got worldwide recognition due to the inventor's seminal works published by highly prestigious and widely visible British journals, effective ‘real-world’ antibiotic therapy of pneumonia and wounds during World War II, and euphoric media coverage. 8 In 1945, Fleming, Florey and Chain got a much deserved Nobel Prize in Physiology or Medicine for the discovery that led to the mass production of the wonder drug in the U.S. and ‘real-world practice’ that tested the use of penicillin. What remained globally unnoticed is that Zinaida Yermolyeva, the outstanding Soviet microbiologist, created the Soviet penicillin, which turned out to be more effective than the Anglo-American penicillin and entered mass production in 1943; that year marked the turning of the tide of the Great Patriotic War. 9 One of the reasons of the widely unnoticed discovery of Zinaida Yermolyeva is that her works were published exclusively by local Russian (Soviet) journals.

The past decades have been marked by an unprecedented growth of multicenter and global research studies involving hundreds and thousands of human subjects. This trend is shaped by an increasing number of reports on clinical trials and large cohort studies that create a strong evidence base for practice recommendations. Mega-studies may help generate and test large-scale hypotheses aiming to solve health issues globally. Properly designed epidemiological studies, for example, may introduce clarity to the hygiene hypothesis that was originally proposed by David Strachan in 1989. 10 David Strachan studied the epidemiology of hay fever in a cohort of 17,414 British children and concluded that declining family size and improved personal hygiene had reduced the chances of cross infections in families, resulting in epidemics of atopic disease in post-industrial Britain. Over the past four decades, several related hypotheses have been proposed to expand the potential role of symbiotic microorganisms and parasites in the development of human physiological immune responses early in life and protection from allergic and autoimmune diseases later on. 11 , 12 Given the popularity and the scientific importance of the hygiene hypothesis, it was introduced as a MeSH term in 2012. 13

Hypotheses can be proposed based on an analysis of recorded historic events that resulted in mass migrations and spreading of certain genetic diseases. As a prime example, familial Mediterranean fever (FMF), the prototype periodic fever syndrome, is believed to spread from Mesopotamia to the Mediterranean region and all over Europe due to migrations and religious prosecutions millennia ago. 14 Genetic mutations spearing mild clinical forms of FMF are hypothesized to emerge and persist in the Mediterranean region as protective factors against more serious infectious diseases, particularly tuberculosis, historically common in that part of the world. 15 The speculations over the advantages of carrying the MEditerranean FeVer (MEFV) gene are further strengthened by recorded low mortality rates from tuberculosis among FMF patients of different nationalities living in Tunisia in the first half of the 20th century. 16

Diagnostic hypotheses shedding light on peculiarities of diseases throughout the history of mankind can be formulated using artefacts, particularly historic paintings. 17 Such paintings may reveal joint deformities and disfigurements due to rheumatic diseases in individual subjects. A series of paintings with similar signs of pathological conditions interpreted in a historic context may uncover mysteries of epidemics of certain diseases, which is the case with Ruben's paintings depicting signs of rheumatic hands and making some doctors to believe that rheumatoid arthritis was common in Europe in the 16th and 17th century. 18

WRITING SCIENTIFIC HYPOTHESES

There are author instructions of a few journals that specifically guide how to structure, format, and make submissions categorized as hypotheses attractive. One of the examples is presented by Med Hypotheses , the flagship journal in its field with more than four decades of publishing and influencing hypothesis authors globally. However, such guidance is not based on widely discussed, implemented, and approved reporting standards, which are becoming mandatory for all scholarly journals.

Generating new ideas and scientific hypotheses is a sophisticated task since not all researchers and authors are skilled to plan, conduct, and interpret various research studies. Some experience with formulating focused research questions and strong working hypotheses of original research studies is definitely helpful for advancing critical appraisal skills. However, aspiring authors of scientific hypotheses may need something different, which is more related to discerning scientific facts, pooling homogenous data from primary research works, and synthesizing new information in a systematic way by analyzing similar sets of articles. To some extent, this activity is reminiscent of writing narrative and systematic reviews. As in the case of reviews, scientific hypotheses need to be formulated on the basis of comprehensive search strategies to retrieve all available studies on the topics of interest and then synthesize new information selectively referring to the most relevant items. One of the main differences between scientific hypothesis and review articles relates to the volume of supportive literature sources ( Table 1 ). In fact, hypothesis is usually formulated by referring to a few scientific facts or compelling evidence derived from a handful of literature sources. 19 By contrast, reviews require analyses of a large number of published documents retrieved from several well-organized and evidence-based databases in accordance with predefined search strategies. 20 , 21 , 22

Table 1. Characteristics of scientific hypotheses and narrative and systematic reviews.

The format of hypotheses, especially the implications part, may vary widely across disciplines. Clinicians may limit their suggestions to the clinical manifestations of diseases, outcomes, and management strategies. Basic and laboratory scientists analysing genetic, molecular, and biochemical mechanisms may need to view beyond the frames of their narrow fields and predict social and population-based implications of the proposed ideas. 23

Advanced writing skills are essential for presenting an interesting theoretical article which appeals to the global readership. Merely listing opposing facts and ideas, without proper interpretation and analysis, may distract the experienced readers. The essence of a great hypothesis is a story behind the scientific facts and evidence-based data.

ETHICAL IMPLICATIONS

The authors of hypotheses substantiate their arguments by referring to and discerning rational points from published articles that might be overlooked by others. Their arguments may contradict the established theories and practices, and pose global ethical issues, particularly when more or less efficient medical technologies and public health interventions are devalued. The ethical issues may arise primarily because of the careless references to articles with low priorities, inadequate and apparently unethical methodologies, and concealed reporting of negative results. 24 , 25

Misinterpretation and misunderstanding of the published ideas and scientific hypotheses may complicate the issue further. For example, Alexander Fleming, whose innovative ideas of penicillin use to kill susceptible bacteria saved millions of lives, warned of the consequences of uncontrolled prescription of the drug. The issue of antibiotic resistance had emerged within the first ten years of penicillin use on a global scale due to the overprescription that affected the efficacy of antibiotic therapies, with undesirable consequences for millions. 26

The misunderstanding of the hygiene hypothesis that primarily aimed to shed light on the role of the microbiome in allergic and autoimmune diseases resulted in decline of public confidence in hygiene with dire societal implications, forcing some experts to abandon the original idea. 27 , 28 Although that hypothesis is unrelated to the issue of vaccinations, the public misunderstanding has resulted in decline of vaccinations at a time of upsurge of old and new infections.

A number of ethical issues are posed by the denial of the viral (human immunodeficiency viruses; HIV) hypothesis of acquired Immune deficiency Syndrome (AIDS) by Peter Duesberg, who overviewed the links between illicit recreational drugs and antiretroviral therapies with AIDS and refuted the etiological role of HIV. 29 That controversial hypothesis was rejected by several journals, but was eventually published without external peer review at Med Hypotheses in 2010. The publication itself raised concerns of the unconventional editorial policy of the journal, causing major perturbations and more scrutinized publishing policies by journals processing hypotheses.

WHERE TO PUBLISH HYPOTHESES

Although scientific authors are currently well informed and equipped with search tools to draft evidence-based hypotheses, there are still limited quality publication outlets calling for related articles. The journal editors may be hesitant to publish articles that do not adhere to any research reporting guidelines and open gates for harsh criticism of unconventional and untested ideas. Occasionally, the editors opting for open-access publishing and upgrading their ethics regulations launch a section to selectively publish scientific hypotheses attractive to the experienced readers. 30 However, the absence of approved standards for this article type, particularly no mandate for outlining potential ethical implications, may lead to publication of potentially harmful ideas in an attractive format.

A suggestion of simultaneously publishing multiple or alternative hypotheses to balance the reader views and feedback is a potential solution for the mainstream scholarly journals. 31 However, that option alone is hardly applicable to emerging journals with unconventional quality checks and peer review, accumulating papers with multiple rejections by established journals.

A large group of experts view hypotheses with improbable and controversial ideas publishable after formal editorial (in-house) checks to preserve the authors' genuine ideas and avoid conservative amendments imposed by external peer reviewers. 32 That approach may be acceptable for established publishers with large teams of experienced editors. However, the same approach can lead to dire consequences if employed by nonselective start-up, open-access journals processing all types of articles and primarily accepting those with charged publication fees. 33 In fact, pseudoscientific ideas arguing Newton's and Einstein's seminal works or those denying climate change that are hardly testable have already found their niche in substandard electronic journals with soft or nonexistent peer review. 34

CITATIONS AND SOCIAL MEDIA ATTENTION

The available preliminary evidence points to the attractiveness of hypothesis articles for readers, particularly those from research-intensive countries who actively download related documents. 35 However, citations of such articles are disproportionately low. Only a small proportion of top-downloaded hypotheses (13%) in the highly prestigious Med Hypotheses receive on average 5 citations per article within a two-year window. 36

With the exception of a few historic papers, the vast majority of hypotheses attract relatively small number of citations in a long term. 36 Plausible explanations are that these articles often contain a single or only a few citable points and that suggested research studies to test hypotheses are rarely conducted and reported, limiting chances of citing and crediting authors of genuine research ideas.

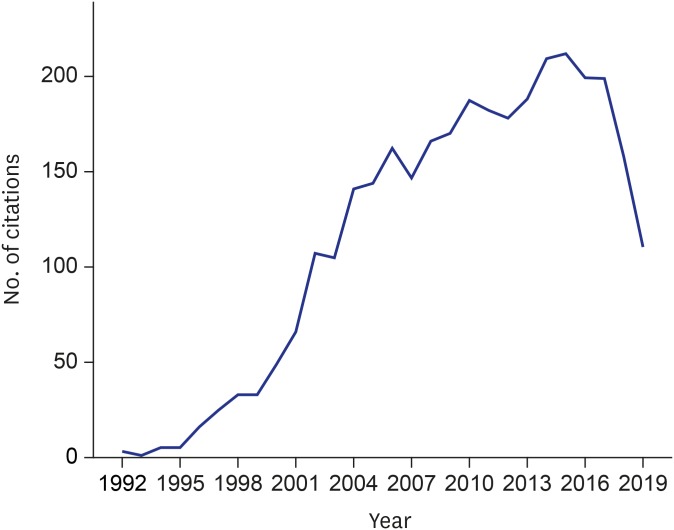

A snapshot analysis of citation activity of hypothesis articles may reveal interest of the global scientific community towards their implications across various disciplines and countries. As a prime example, Strachan's hygiene hypothesis, published in 1989, 10 is still attracting numerous citations on Scopus, the largest bibliographic database. As of August 28, 2019, the number of the linked citations in the database is 3,201. Of the citing articles, 160 are cited at least 160 times ( h -index of this research topic = 160). The first three citations are recorded in 1992 and followed by a rapid annual increase in citation activity and a peak of 212 in 2015 ( Fig. 1 ). The top 5 sources of the citations are Clin Exp Allergy (n = 136), J Allergy Clin Immunol (n = 119), Allergy (n = 81), Pediatr Allergy Immunol (n = 69), and PLOS One (n = 44). The top 5 citing authors are leading experts in pediatrics and allergology Erika von Mutius (Munich, Germany, number of publications with the index citation = 30), Erika Isolauri (Turku, Finland, n = 27), Patrick G Holt (Subiaco, Australia, n = 25), David P. Strachan (London, UK, n = 23), and Bengt Björksten (Stockholm, Sweden, n = 22). The U.S. is the leading country in terms of citation activity with 809 related documents, followed by the UK (n = 494), Germany (n = 314), Australia (n = 211), and the Netherlands (n = 177). The largest proportion of citing documents are articles (n = 1,726, 54%), followed by reviews (n = 950, 29.7%), and book chapters (n = 213, 6.7%). The main subject areas of the citing items are medicine (n = 2,581, 51.7%), immunology and microbiology (n = 1,179, 23.6%), and biochemistry, genetics and molecular biology (n = 415, 8.3%).

Fig. 1. Number of Scopus-indexed items citing Strachan's hygiene hypothesis in 1992–2019 (as of August 28, 2019).

Interestingly, a recent analysis of 111 publications related to Strachan's hygiene hypothesis, stating that the lack of exposure to infections in early life increases the risk of rhinitis, revealed a selection bias of 5,551 citations on Web of Science. 37 The articles supportive of the hypothesis were cited more than nonsupportive ones (odds ratio adjusted for study design, 2.2; 95% confidence interval, 1.6–3.1). A similar conclusion pointing to a citation bias distorting bibliometrics of hypotheses was reached by an earlier analysis of a citation network linked to the idea that β-amyloid, which is involved in the pathogenesis of Alzheimer disease, is produced by skeletal muscle of patients with inclusion body myositis. 38 The results of both studies are in line with the notion that ‘positive’ citations are more frequent in the field of biomedicine than ‘negative’ ones, and that citations to articles with proven hypotheses are too common. 39

Social media channels are playing an increasingly active role in the generation and evaluation of scientific hypotheses. In fact, publicly discussing research questions on platforms of news outlets, such as Reddit, may shape hypotheses on health-related issues of global importance, such as obesity. 40 Analyzing Twitter comments, researchers may reveal both potentially valuable ideas and unfounded claims that surround groundbreaking research ideas. 41 Social media activities, however, are unevenly distributed across different research topics, journals and countries, and these are not always objective professional reflections of the breakthroughs in science. 2 , 42

Scientific hypotheses are essential for progress in science and advances in healthcare. Innovative ideas should be based on a critical overview of related scientific facts and evidence-based data, often overlooked by others. To generate realistic hypothetical theories, the authors should comprehensively analyze the literature and suggest relevant and ethically sound design for future studies. They should also consider their hypotheses in the context of research and publication ethics norms acceptable for their target journals. The journal editors aiming to diversify their portfolio by maintaining and introducing hypotheses section are in a position to upgrade guidelines for related articles by pointing to general and specific analyses of the subject, preferred study designs to test hypotheses, and ethical implications. The latter is closely related to specifics of hypotheses. For example, editorial recommendations to outline benefits and risks of a new laboratory test or therapy may result in a more balanced article and minimize associated risks afterwards.

Not all scientific hypotheses have immediate positive effects. Some, if not most, are never tested in properly designed research studies and never cited in credible and indexed publication outlets. Hypotheses in specialized scientific fields, particularly those hardly understandable for nonexperts, lose their attractiveness for increasingly interdisciplinary audience. The authors' honest analysis of the benefits and limitations of their hypotheses and concerted efforts of all stakeholders in science communication to initiate public discussion on widely visible platforms and social media may reveal rational points and caveats of the new ideas.

Disclosure: The authors have no potential conflicts of interest to disclose.

- Conceptualization: Gasparyan AY, Yessirkepov M, Kitas GD.

- Methodology: Gasparyan AY, Mukanova U, Ayvazyan L.

- Writing - original draft: Gasparyan AY, Ayvazyan L, Yessirkepov M.

- Writing - review & editing: Gasparyan AY, Yessirkepov M, Mukanova U, Kitas GD.

- 1. O'Shea P. Future medicine shaped by an interdisciplinary new biology. Lancet. 2012;379(9825):1544–1550. doi: 10.1016/S0140-6736(12)60476-0. [ DOI ] [ PubMed ] [ Google Scholar ]

- 2. Kolahi J, Khazaei S, Iranmanesh P, Soltani P. Analysis of highly tweeted dental journals and articles: a science mapping approach. Br Dent J. 2019;226(9):673–678. doi: 10.1038/s41415-019-0212-z. [ DOI ] [ PubMed ] [ Google Scholar ]

- 3. Heidary F, Gharebaghi R. Surgical innovation, a niche and a need. Med Hypothesis Discov Innov Ophthalmol. 2012;1(4):65–66. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 4. Bains W. Hypotheses, limits, models and life. Life (Basel) 2014;5(1):1–3. doi: 10.3390/life5010001. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 5. Bains W. Hypotheses and humility: Ideas do not have to be right to be useful. Biosci Hypotheses. 2009;2(1):1–2. [ Google Scholar ]

- 6. Fleming A, Fish EW. Influence of penicillin on the coagulation of blood with especial reference to certain dental operations. BMJ. 1947;2(4519):242–243. doi: 10.1136/bmj.2.4519.242. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 7. Bentley R. The development of penicillin: genesis of a famous antibiotic. Perspect Biol Med. 2005;48(3):444–452. doi: 10.1353/pbm.2005.0068. [ DOI ] [ PubMed ] [ Google Scholar ]

- 8. Shama G. The role of the media in influencing public attitudes to penicillin during World War II. Dynamis. 2015;35(1):131–152. doi: 10.4321/s0211-95362015000100006. [ DOI ] [ PubMed ] [ Google Scholar ]

- 9. The appearance of penicillin. Antibiotics killers. [Accessed August 28, 2019]. https://btvar.ru/en/faringit/the-appearance-of-penicillin-antibioticskillers.html .

- 10. Strachan DP. Hay fever, hygiene, and household size. BMJ. 1989;299(6710):1259–1260. doi: 10.1136/bmj.299.6710.1259. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 11. Bach JF. The effect of infections on susceptibility to autoimmune and allergic diseases. N Engl J Med. 2002;347(12):911–920. doi: 10.1056/NEJMra020100. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Bach JF. The hygiene hypothesis in autoimmunity: the role of pathogens and commensals. Nat Rev Immunol. 2018;18(2):105–120. doi: 10.1038/nri.2017.111. [ DOI ] [ PubMed ] [ Google Scholar ]

- 13. Hygiene hypothesis. [Accessed August 28, 2019]. https://www.ncbi.nlm.nih.gov/mesh/?term=hygiene+hypothesis .

- 14. Ben-Chetrit E, Levy M. Familial Mediterranean fever. Lancet. 1998;351(9103):659–664. doi: 10.1016/S0140-6736(97)09408-7. [ DOI ] [ PubMed ] [ Google Scholar ]

- 15. Ozen S, Balci B, Ozkara S, Ozcan A, Yilmaz E, Besbas N, et al. Is there a heterozygote advantage for familial Mediterranean fever carriers against tuberculosis infections: speculations remain? Clin Exp Rheumatol. 2002;20(4) Suppl 26:S57–S58. [ PubMed ] [ Google Scholar ]

- 16. Cattan D. Familial Mediterranean fever: is low mortality from tuberculosis a specific advantage for MEFV mutations carriers? Mortality from tuberculosis among Muslims, Jewish, French, Italian and Maltese patients in Tunis (Tunisia) in the first half of the 20th century. Clin Exp Rheumatol. 2003;21(4) Suppl 30:S53–S54. [ PubMed ] [ Google Scholar ]

- 17. Chatzidionysiou K. Rheumatic disease and artistic creativity. Mediterr J Rheumatol. 2019;30(2):103–109. doi: 10.31138/mjr.30.2.103. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 18. Appelboom T. Hypothesis: Rubens--one of the first victims of an epidemic of rheumatoid arthritis that started in the 16th-17th century? Rheumatology (Oxford) 2005;44(5):681–683. doi: 10.1093/rheumatology/keh252. [ DOI ] [ PubMed ] [ Google Scholar ]

- 19. Wardle J, Rossi V. Medical hypotheses: a clinician's guide to publication. Adv Intern Med. 2016;3(1):37–40. [ Google Scholar ]

- 20. Gasparyan AY, Ayvazyan L, Blackmore H, Kitas GD. Writing a narrative biomedical review: considerations for authors, peer reviewers, and editors. Rheumatol Int. 2011;31(11):1409–1417. doi: 10.1007/s00296-011-1999-3. [ DOI ] [ PubMed ] [ Google Scholar ]

- 21. Methley AM, Campbell S, Chew-Graham C, McNally R, Cheraghi-Sohi S. PICO, PICOS and SPIDER: a comparison study of specificity and sensitivity in three search tools for qualitative systematic reviews. BMC Health Serv Res. 2014;14(1):579. doi: 10.1186/s12913-014-0579-0. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 22. Misra DP, Agarwal V. Systematic reviews: challenges for their justification, related comprehensive searches, and implications. J Korean Med Sci. 2018;33(12):e92. doi: 10.3346/jkms.2018.33.e92. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 23. Heidary F, Gharebaghi R. Welcome to beautiful mind; a call to action. Med Hypothesis Discov Innov Ophthalmol. 2012;1(1):1–2. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 24. Erren TC, Shaw DM, Groß JV. How to avoid haste and waste in occupational, environmental and public health research. J Epidemiol Community Health. 2015;69(9):823–825. doi: 10.1136/jech-2015-205543. [ DOI ] [ PubMed ] [ Google Scholar ]

- 25. Ruxton GD, Mulder T. Unethical work must be filtered out or flagged. Nature. 2019;572(7768):171–172. doi: 10.1038/d41586-019-02378-x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 26. Rosenblatt-Farrell N. The landscape of antibiotic resistance. Environ Health Perspect. 2009;117(6):A244–50. doi: 10.1289/ehp.117-a244. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 27. Patki A. Eat dirt and avoid atopy: the hygiene hypothesis revisited. Indian J Dermatol Venereol Leprol. 2007;73(1):2–4. doi: 10.4103/0378-6323.30642. [ DOI ] [ PubMed ] [ Google Scholar ]

- 28. Bloomfield SF, Rook GA, Scott EA, Shanahan F, Stanwell-Smith R, Turner P. Time to abandon the hygiene hypothesis: new perspectives on allergic disease, the human microbiome, infectious disease prevention and the role of targeted hygiene. Perspect Public Health. 2016;136(4):213–224. doi: 10.1177/1757913916650225. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 29. Goodson P. Questioning the HIV-AIDS hypothesis: 30 years of dissent. Front Public Health. 2014;2:154. doi: 10.3389/fpubh.2014.00154. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ] [ Retracted ]

- 30. Abatzopoulos TJ. A new era for Journal of Biological Research-Thessaloniki . J Biol Res (Thessalon) 2014;21(1):1. doi: 10.1186/2241-5793-21-1. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 31. Rosen J. Research protocols: a forest of hypotheses. Nature. 2016;536(7615):239–241. doi: 10.1038/nj7615-239a. [ DOI ] [ PubMed ] [ Google Scholar ]

- 32. Steinhauser G, Adlassnig W, Risch JA, Anderlini S, Arguriou P, Armendariz AZ, et al. Peer review versus editorial review and their role in innovative science. Theor Med Bioeth. 2012;33(5):359–376. doi: 10.1007/s11017-012-9233-1. [ DOI ] [ PubMed ] [ Google Scholar ]

- 33. Eriksson S, Helgesson G. The false academy: predatory publishing in science and bioethics. Med Health Care Philos. 2017;20(2):163–170. doi: 10.1007/s11019-016-9740-3. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 34. Beall J. Dangerous predatory publishers threaten medical research. J Korean Med Sci. 2016;31(10):1511–1513. doi: 10.3346/jkms.2016.31.10.1511. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 35. Bazrafshan A, Haghdoost AA, Zare M. A comparison of downloads, readership and citations data for the Journal of Medical Hypotheses and Ideas . J Med Hypotheses Ideas. 2015;9(1):1–4. [ Google Scholar ]

- 36. Zavos C, Kountouras J, Zavos N, Paspatis GA, Kouroumalis EA. Predicting future citations of a research paper from number of its internet downloads: the Medical Hypotheses case. Med Hypotheses. 2008;70(2):460–461. doi: 10.1016/j.mehy.2007.06.001. [ DOI ] [ PubMed ] [ Google Scholar ]

- 37. Duyx B, Urlings MJ, Swaen GM, Bouter LM, Zeegers MP. Selective citation in the literature on the hygiene hypothesis: a citation analysis on the association between infections and rhinitis. BMJ Open. 2019;9(2):e026518. doi: 10.1136/bmjopen-2018-026518. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 38. Greenberg SA. How citation distortions create unfounded authority: analysis of a citation network. BMJ. 2009;339:b2680. doi: 10.1136/bmj.b2680. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 39. Duyx B, Urlings MJ, Swaen GM, Bouter LM, Zeegers MP. Scientific citations favor positive results: a systematic review and meta-analysis. J Clin Epidemiol. 2017;88:92–101. doi: 10.1016/j.jclinepi.2017.06.002. [ DOI ] [ PubMed ] [ Google Scholar ]

- 40. Bevelander KE, Kaipainen K, Swain R, Dohle S, Bongard JC, Hines PD, et al. Crowdsourcing novel childhood predictors of adult obesity. PLoS One. 2014;9(2):e87756. doi: 10.1371/journal.pone.0087756. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 41. Castelvecchi D. Physicists doubt bold superconductivity claim following social-media storm. Nature. 2018;560(7720):539–540. doi: 10.1038/d41586-018-06023-x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 42. Kolahi J, Khazaei S. Altmetric analysis of contemporary dental literature. Br Dent J. 2018;225(1):68–72. doi: 10.1038/sj.bdj.2018.521. [ DOI ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (955.6 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

This is a preprint.

It has not yet been peer reviewed by a journal.

The National Library of Medicine is running a pilot to include preprints that result from research funded by NIH in PMC and PubMed.

Data-driven hypothesis generation among inexperienced clinical researchers: A comparison of secondary data analyses with visualization (VIADS) and other tools

James j cimino, vimla l patel, yuchun zhou, jay h shubrook, sonsoles de lacalle, brooke n draghi, mytchell a ernst, aneesa weaver, shriram sekar.

- Author information

- Copyright and License information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License , which allows reusers to copy and distribute the material in any medium or format in unadapted form only, for noncommercial purposes only, and only so long as attribution is given to the creator.

Objectives:

To compare how clinical researchers generate data-driven hypotheses with a visual interactive analytic tool (VIADS, a v isual i nteractive a nalysis tool for filtering and summarizing large d ata s ets coded with hierarchical terminologies) or other tools.

We recruited clinical researchers and separated them into “experienced” and “inexperienced” groups. Participants were randomly assigned to a VIADS or control group within the groups. Each participant conducted a remote 2-hour study session for hypothesis generation with the same study facilitator on the same datasets by following a think-aloud protocol. Screen activities and audio were recorded, transcribed, coded, and analyzed. Hypotheses were evaluated by seven experts on their validity, significance, and feasibility. We conducted multilevel random effect modeling for statistical tests.

Eighteen participants generated 227 hypotheses, of which 147 (65%) were valid. The VIADS and control groups generated a similar number of hypotheses. The VIADS group took a significantly shorter time to generate one hypothesis (e.g., among inexperienced clinical researchers, 258 seconds versus 379 seconds, p = 0.046, power = 0.437, ICC = 0.15). The VIADS group received significantly lower ratings than the control group on feasibility and the combination rating of validity, significance, and feasibility.

Conclusion:

The role of VIADS in hypothesis generation seems inconclusive. The VIADS group took a significantly shorter time to generate each hypothesis. However, the combined validity, significance, and feasibility ratings of their hypotheses were significantly lower. Further characterization of hypotheses, including specifics on how they might be improved, could guide future tool development.

Keywords: scientific hypothesis generation, clinical research, VIADS, utility study, secondary data analysis tools

Introduction

A scientific hypothesis is an educated guess regarding the relationships among several variables [ 1 , 2 ]. A hypothesis is a fundamental component of a research question [ 3 ], which typically can be answered by testing one or several hypotheses [ 4 ]. A hypothesis is critical for any research project; it determines its direction and impact. Many studies focusing on scientific research have made significant progress in scientific [ 5 , 6 ] and medical reasoning [ 7 – 11 ], problem-solving, analogy, working memory, and learning and thinking in educational contexts [ 12 ]. However, most of these studies begin with a question and focus on scientific reasoning [ 14 ], medical diagnosis, or differential diagnosis [ 10 , 15 , 16 ]. Henry and colleagues named them as open or closed discoveries in the literature-mining context [ 18 ]. The reasoning mechanisms and processes used in solving an existing puzzle are critical; however, the current literature provides limited information about the scientific hypothesis generation process [ 4 – 6 ], which is to identify the focused area to start with, not the hypotheses generated to solve existing problems.

There have been attempts to generate hypotheses automatically using text mining, literature mining, knowledge discovery, natural language processing techniques, Semantic Web technology, or machine learning methods to reveal new relationships among diseases, genes, proteins, and conditions [ 19 – 23 ]. Many of these efforts were based on Swanson’s ABC Model [ 24 – 26 ]. Several research teams explored automatic literature systems for generating [ 28 , 29 ] and validating [ 30 ] or enriching hypotheses [ 31 ]. However, the studies recognized the complexity of the hypothesis generation process and concluded that it does not seem feasible to generate hypotheses completely automatically [ 19 – 21 , 24 , 32 ]. In addition, hypothesis generation is not just identifying new relationships although a new connection is a critical component of hypothesis generation. Other literature-related efforts include adding temporal dimensions to machine learning models to predict connections between terms [ 33 , 34 ] or evaluating hypotheses using knowledge bases and Semantic Web technology [ 32 , 35 ]. To understand how humans use such systems to generate hypotheses in practice may provide unique insights into our understanding of scientific hypothesis generation, which can help system developers to better automate systems to facilitate the process.

Many researchers believe that their secondary data analytical tools (such as a v isual i nteractive a nalytic tool for filtering and summarizing large health d ata s ets coded with hierarchical terminologies —VIADS [ 36 – 39 ]) can facilitate hypothesis generation [ 40 , 41 ]. Whether these tools work as expected, and how, has not been systematically investigated. Data-driven hypothesis generation is critical and the first step in clinical and translational research projects [ 42 ]. Therefore, we conducted a study to investigate if and how VIADS can facilitate generating data-driven hypotheses among clinical researchers. We recorded their hypothesis generation process and compared the results of those who used and did not use VIADS. Hypothesis quality evaluation is usually conducted as part of a larger work, e.g., the evaluation of a scientific paper or a research grant proposal. Therefore, there are no existing metrics for hypothesis quality evaluation. We developed our quality metrics to evaluate hypotheses [ 1 , 3 , 4 , 43 – 49 ] through iterative internal and external validation [ 43 , 44 ]. This paper is a study of scientific hypothesis generation by clinical researchers with or without VIADS, including the quality evaluation, quantitative measurement of the hypotheses, and an analysis of the responses to follow-up questions. This is a randomized human participant study; however, per the definition of the National Institutes of Health, it is not a clinical trial. Therefore, we did not register it on ClinicalTrials.gov .

Research question and hypothesis

Can secondary data analytic tools, e.g., VIADS, facilitate the hypothesis generation process?

We hypothesize there will be group differences between clinical researchers who use VIADS in generating hypotheses and those who do not.

Rationale of the research question

Many researchers believe that new analytical tools offer opportunities to reveal further insights and new patterns in existing data to facilitate hypothesis generation [ 1 , 28 , 41 , 42 , 50 ]. We developed the underlying algorithms (determine what VIADS can do) [ 38 , 39 ] and the publicly accessible online tool—VIADS [ 37 , 51 , 52 ] to provide new ways of summarizing, comparing, and visualizing datasets. In this study, we explored the utility of VIADS.

Study design

We conducted a 2 × 2 study. We divided participants into four groups: inexperienced clinical researchers without VIADS (group 1, participants were free to use any other analytical tools), and with VIADS (group 2). Experienced clinical researchers without VIADS (group 3), and with VIADS (group 4). The main differences between experienced and inexperienced clinical researchers were years of experience in conducting clinical research and the number of publications as significant contributors [ 53 ].

A pilot study, involving two participants and four study sessions, was conducted before we finalized the study datasets ( Supplemental Material 1 ), training material ( Supplemental Material 2 ), study scripts ( Supplemental Material 3 ), follow-up surveys ( Supplemental Material 4 & 5 ), and study session flow. Afterward, we recruited clinical researchers for the study sessions.

Recruitment

We recruited study participants through local, national, and international platforms, including American Medical Informatics Association (AMIA) mailing lists for working groups (e.g., clinical research informatics, clinical information system, implementation, clinical decision support, and Women in AMIA), N3C [ 54 ] study network Slack channels, South Carolina Clinical and Translational Research Institute newsletter, guest lectures and invited presentations in peer-reviewed conferences (e.g., MIE 2022), and other internal research related newsletters. All collaborators of the investigation team shared the recruitment invitations with their colleagues. Based on the experience level and our block randomization list, the participants were assigned to the VIADS or non-VIADS groups. After scheduling, the study script and IRB-approved consent forms were shared with participants. The datasets were shared on the study date. All participants received compensation based on the time they spent.

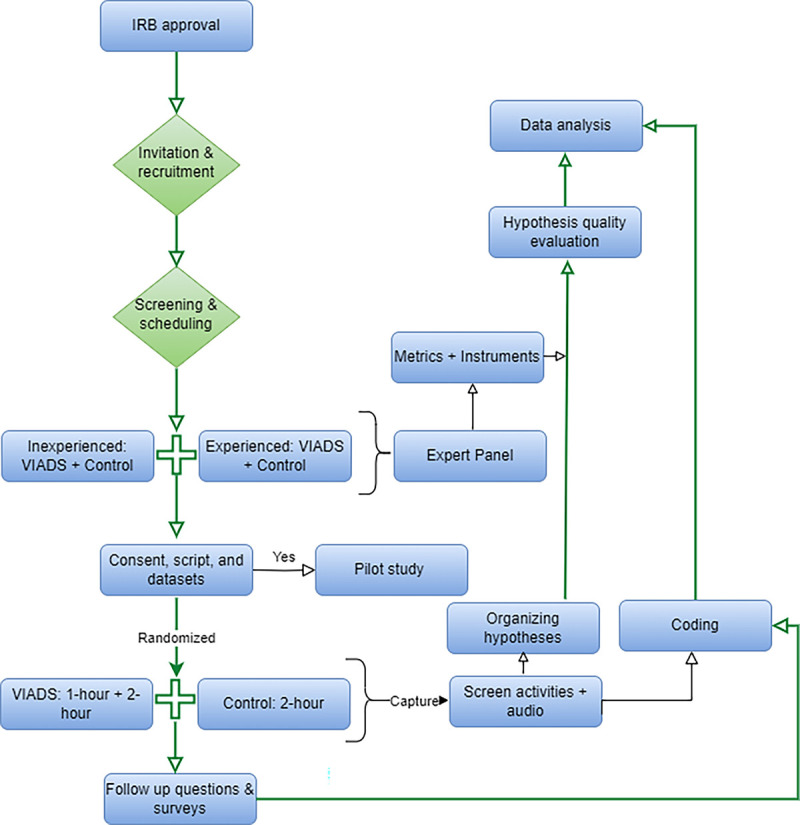

Every study participant used the same datasets and followed the same study scripts. The same study facilitator conducted all study sessions. For the VIADS groups, we scheduled a training session (one-hour). All groups had a study session lasting a maximum of 2 hours. During the training session, the study facilitator demonstrated how to use VIADS and then the participants demonstrated the use of VIADS. During the study session, participants analyzed datasets with VIADS or other tools to develop hypotheses by following the think-aloud protocol. During the study sessions, the study facilitator asked questions, provided reminders, and acted as a colleague to the participants. All training and study sessions were conducted remotely via WebEx meetings. Figure 1 shows the study flow.

Study flow for the data-driven hypothesis generation

During the study session, all the screen activities and conversations were recorded via FlashBB and converted to audio files for professional transcription. At the end of each study session, the study facilitator asked follow-up questions about the participants’ experiences creating and capturing new research ideas. The participants in the VIADS groups also completed two follow-up Qualtrics surveys; one was about the participant and questions on how to facilitate the hypothesis generation process better ( Supplemental Material 4 ), and the other evaluated VIADS usability with a modified version of the System Usability Scale ( Supplemental Material 5 ). The participants in the non-VIADS groups received one follow-up Qualtrics survey ( Supplemental Material 4 ).

Hypothesis evaluation

We developed a complete version and a brief version of hypothesis quality evaluation instrument ( Supplemental Material 6 ) based on hypothesis quality evaluation metrics. We recruited a clinical research expert panel with four external members and three senior project advisors from our investigation team with clinical research backgrounds to validate the instruments. Their detailed eligibility criteria were published [ 53 ]. The expert panel evaluated the quality of all hypotheses generated by participants. In Phase 1, the full version of the instrument was used to evaluate randomly selected 30 hypotheses, and the evaluation results enabled us to develop a brief version of the instrument [ 43 ] ( Supplemental Material 7 , including three dimensions: validity, significance, and feasibility); Phase 2 used the brief instrument to evaluate the remaining hypotheses. Each dimension used a 5-point scale, from 1 (the lowest) to 5 (the highest). Therefore, for each hypothesis, the total raw score could range between 3 and 15. In our descriptive results and statistical tests, we used the averages of these scores (i.e., total raw score/3). Like the scores for each dimension, the results ranged from 1 to 5.

We generated a random list for all hypotheses. Then, based on the random list, we put ten randomly selected hypotheses into one Qualtrics survey for quality evaluation. We initiated the quality evaluation process after the completion of all the study sessions, allowing all hypotheses to be included in the generation of the random list.

Data analysis plan on hypothesis quality evaluation

Our data analysis focuses on the quality and quantity of hypotheses generated by participants. We conducted multilevel random intercept effect modeling in MPlus 7 to compare the VIADS group and the control group on the following items: the quality of the hypotheses, the number of hypotheses, and the time needed to generate each hypothesis by each participant. We also examined the correlations between the hypothesis quality ratings and the participant’s self-perceived creativity.

We first analyzed all hypotheses to explore the aggregated results. A second analysis was conducted by using only valid hypotheses after removing any hypothesis that was scored at “1” (the lowest rating) for validity by three or more experts. However, we include both sets of results in this paper. The usability results of VIADS were published separately [ 36 ].

All hypotheses were coded by two research assistants who worked separately and independently. They coded the time needed for each hypothesis and cognitive events during hypothesis generation. The coding principles ( Supplemental Material 8 ) were developed as the two research assistants worked. Whenever there was a discrepancy, a third member of the investigation team joined the discussion to reach a consensus by refining the coding principles.

Ethical statement

Our study was approved by the Institutional Review Boards (IRB) of Clemson University, South Carolina (IRB2020-056) and Ohio University (18-X-192).

Participant demographics

We screened 39 researchers, among whom 20 participated, of which two were in the pilot study. Participants were from different locations and institutions in the United States. Among the 18 study participants, 15 were inexperienced clinical researchers and three were experienced. The experienced clinical researchers were underrepresented, and their results were mainly for informational purposes. Table 1 presents the background information of the participants.

Eighteen participants’ — clinical researchers’ profile

Note: -, no value.

Expert panel composition and intraclass correlation coefficient (ICC)

Seven experts validated the metrics and instruments [ 43 , 44 ] and evaluated the hypotheses using the instruments. Each expert was from a different institution in the United States. Five had medical backgrounds, three of them were in clinical practice; and two had research methodology expertise. They all had 10 years or longer clinical research experience. For the hypothesis quality evaluation, the ICC of the seven experts was moderate, at 0.49.

Hypothesis quality and quantity evaluation results

The 18 participants generated 227 hypotheses during the study sessions. There were 80 invalid hypotheses and 147 (65%) valid hypotheses. They were all used separately for further analysis and comparison. Of these 147, 121 were generated by inexperienced clinical researchers (n = 15) in the VIADS (n = 8) and control (n = 7) groups.

Table 2 shows the descriptive results of the hypothesis quality evaluation results between the VIADS and the control groups. We used four analytic strategies: valid hypotheses by inexperienced clinical researchers (n = 121), valid hypotheses by inexperienced and experienced clinical researchers (n = 147), all hypotheses by inexperienced clinical researchers (n = 192), and all hypotheses by inexperienced and experienced clinical researchers (n = 227).

Expert panel quality rating results for hypotheses generated by VIADS and control groups

Note : valid. → valid hypotheses; all. → all hypotheses; SD, standard deviation. Each hypothesis was rated on a 5-point scale, from 1 (the lowest) to 5 (the highest) in each dimension.

Table 3 shows the hypotheses’ quality evaluation results for random intercept effect modeling. The four analytic strategies generated similar results. The VIADS group received slightly lower validity, significance, and feasibility scores, but the differences in validity and significance were statistically insignificant regardless of the analytic strategy. However, the feasibility scores of the VIADS group were statistically significantly lower ( p < 0.01), regardless of the analytic strategy. Using the random intercept effect model, the combined validity, significance, and feasibility ratings of the VIADS group were statistically significantly lower than those of the control group for three of four analytic strategies ( p < 0.05). This was most likely due to the differences in feasibility ratings between the VIADS and control groups. Across all four analytic strategies, there was a statistically significant random intercept effect on validity between participants. When all hypotheses and all participants were considered, there was also a statistically significant random intercept effect on significance between participants.

Multilevel random intercept modeling results on hypotheses quality ratings for different strategies

Note : valid. → valid hypotheses; all. → all hypotheses; Parti. → participants; ICC: intraclass correlation coefficient. β , group mean difference between VIADS and control groups in their quality ratings of hypotheses; ICC (for random effect modeling) = between-participants variance/(between-participants variance + within-participants variance).

In addition to quality ratings, we also compared the number of hypotheses and the time needed for each participant to generate a hypothesis between groups. The inexperienced clinical researchers in the VIADS group and control group generated a similar number of valid hypotheses. Those in the VIADS group generated between 1 and 19 valid hypotheses in 2 hours (mean 8.43) and those in the control group generated 2 to 13 (mean 7.63). The inexperienced clinical researchers in the VIADS group took 102–610 seconds to generate a valid hypothesis (mean, 278.6 s), and those in the control group took 250–566 seconds (mean, 358.2 s).

Table 4 shows the random intercept modeling results of our comparison of the time needed to generate hypotheses using the four different strategies. On average, the VIADS group requires significantly less time ( p < 0.05) to generate a hypothesis regardless of the analytic strategy used. The results were consistent with those obtained from the analysis of all hypotheses by independent t-test [ 55 ]. There were no statistically significant random intercept effects between participants, regardless of the analytic strategy used ( Table 4 ).

Multilevel random intercept modeling results on time used to generate hypotheses for different strategies

Note : valid. → valid hypotheses; all. → all hypotheses; Parti. → participants; ICC: intraclass correlation coefficient; β , group mean difference between VIADS and control groups in the time used to generate each hypothesis on average; ICC (for random effect modeling) = between-participants variance/(between-participants variance + within-participants variance)

Experienced clinical researchers

There were three experienced clinical researchers among the participants, two in the VIADS group and one in the control group. The experienced clinical researchers in the VIADS group generated 12 (average time 215 s/hypothesis) and 3 (average time 407 s/hypothesis) valid hypotheses in 2 hours. The experienced clinical researcher in the control group generated 12 valid hypotheses (average time, 413 s/hypothesis). The experienced clinical researchers in the VIADS group received average quality scores of 8.51 (SD, 1.18) and 7.04 (SD, 0.3) while the experienced clinical researcher in the control group received a quality score of 9.84 (SD, 0.81) out of 15 per hypothesis. These results were used for informational purpose.

Follow-up questions

The follow-up questions comprise three parts: verbal questions asked by the study facilitator and a follow-up survey for all participants, and a SUS usability survey for the VIADS group participants [ 36 ]. The results from the first two parts are summarized below.

The verbal questions and the summary answers are presented in Table 5 . Reading and interactions with others were the most used activities to generate new research ideas. Attending conferences, seminars, and educational events, and conducting clinical practice were important in generating hypotheses. There were no specific tools used to initially capture hypotheses or research ideas. Most participants used text documents in Microsoft Word, text messages, emails, or sticky notes to summarize their initial ideas.

Follow-up questions (verbal) and answers after each study session (all study participants)

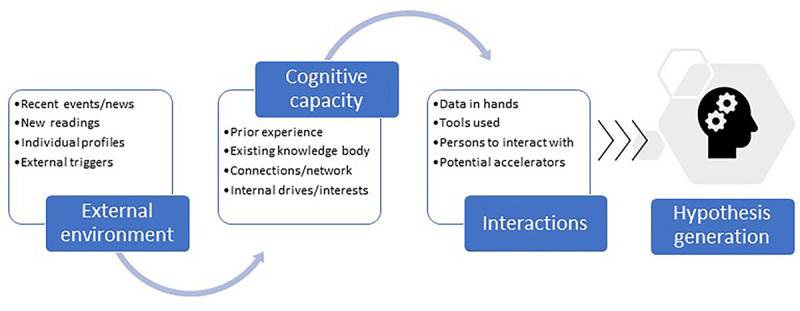

Figure 2 is a scientific hypothesis generation framework we developed based on literature [ 1 , 3 , 4 , 45 , 56 , 57 ], follow-up questions and answers after study sessions, and self-reflection on our research project trajectories. The external environment, cognitive capacity, and interactions between the individual and the external world, especially the tools used, are categories of critical factors that significantly contribute to hypothesis generation. Cognitive capacity takes a long time to change, and the external environment can be unpredictable. The tools that can interact with existing datasets are one of the modifiable factors in the hypothesis generation framework and this is what we aimed to test in this study.

Scientific hypothesis generation framework: Contributing factors

One follow-up question was about the self-perceived creativity of the study participants. The average hypothesis quality rating score per participant did not correlate with the self-perceived creativity ( p = 0.616, two-tailed Pearson correlation test) or the number of valid hypotheses generated ( p = 0.683, two-tailed Pearson correlation test) by inexperienced clinical researchers. There was no correlation between the highest and lowest 10 ratings and the individual’s self-perceived creativity, in inexperienced clinical researchers regardless of using VIADS or not.

In our follow-up survey, the questions were mainly about participants’ current roles and affiliations, their experience in clinical research, their preference for analytic tools, and their ratings of the importance of different factors (e.g., prospective study, longitudinal study, and the results will be published separately) considered routinely in clinical research study design. Most of the results have been included in Table 1 .

In our follow-up survey, one question was, “If you were provided with more detailed information about research design (e.g., focused population) during your hypothesis generation process, do you think the information would help formulate your hypothesis overall?” All 20 participants, (including two in the pilot study), selected Yes. This demonstrates the recognition and need for assistance during hypothesis generation. In the follow-up surveys, VIADS users provided overwhelmingly positive feedback on VIADS, and they all agreed (100%) that VIADS offered new perspectives on the datasets compared with the tools they currently use for the same type of datasets [ 36 ].

Interpretation of the results

We aim to discover the role of secondary data analytic tools, e.g., VIADS, in generating scientific hypotheses in clinical research and to evaluate the utility and usability of VIADS. The usability of VIADS has been published separately [ 36 ]. Regarding the role and utility of VIADS, we measured the number of hypotheses generated, the average time needed to generate each hypothesis, the quality evaluation of the hypotheses, and the user feedback on VIADS. Participants in the VIADS and control groups generated similar numbers of valid and total hypotheses among inexperienced clinical researchers. The VIADS group a) needed a significantly shorter time to generate each hypothesis on average; b) received significantly lower ratings on quality in feasibility than the control group; c) received significantly lower quality ratings (three of four analytic strategies) in the combination ratings of validity, significance, and feasibility, which most likely due to the feasibility rating differences; d) provided very positive feedback on VIADS [ 36 ] with 75% agreed that VIADS facilitates understanding, presentation, and interpretation of the underlying datasets; e) agreed (100%) that VIADS provided new perspectives on the datasets compares to other tools.

However, the current results were inconclusive in answering the research question. The direct measurements of significant differences between the VIADS and control group were mixed ( Tables 3 and 4 ). The VIADS group took significantly less time than the control group to generate each hypothesis, regardless of the analytic strategy. Considering the sample size and power (0.31 to 0.44) of the study ( Table 4 ), and the absence of significant random intercept effects on feasibility between participants, regardless of analytic strategy, this result is highly significant. The shorter hypothesis generation time in the VIADS group indicates that VIADS may facilitate participants’ thinking or understanding of the underlying datasets. While timing is not as critical in the context of clinical research as it is in clinical care, this result is still very encouraging.

On the other hand, the quality ratings of the hypotheses generated mixed and somewhat unfavorable results. The VIADS group received insignificantly lower ratings for validity and significance, and significant lower ratings for feasibility than the control group, regardless of analytic strategy. In addition, the combined validity, significance, and feasibility ratings of the VIADS group were significantly lower than those of the control group for three analytic strategies (the power ranged from 0.37 to 0.79, Table 3 ). There were significant random intercept effects on validity between participants, regardless of analytic strategy. When we considered all hypotheses among all participants, there were also significant random intercept effects on significance between participants. These results indicate that the significantly lower ratings for feasibility in the VIADS groups may not be caused by random effects. There are various possible reasons for the lower feasibility ratings of the VIADS group, e.g., VIADS facilitates the generation of less feasible hypotheses. While unfeasible ideas are not identical to creative ideas, they may be a deviation on the path to creative ideas.

Although the VIADS group received lower quality ratings than the control group, it would be an overstatement to claim that VIADS reduces the quality of generated hypotheses. We posit that the 1-hour training session received by the VIADS group likely played a critical role in the quality rating differences. Among the inexperienced clinical researchers, six underwent 3-hour sessions, i.e., 1-hour training followed by a 2-hour study session, with a 5-minute break in between. Two participants had the training and the study session on separate days. The cognitive load of the training session was not considered during the study design, therefore the training sessions were not required to be conducted on different days to the study sessions. However, in retrospect, we should have mandated that the training and study sessions take place on separate days. In addition, the VIADS group had a much higher percentage of participants with less than 2 years of research experience (75%) than the control group (43%, Table 1 ). Although we adhered strictly to the randomization list when assigning participants to the two groups, the relatively small sample sizes are likely to have amplified the effects of the research experience imbalance between the two groups.

Literature [ 58 ] suggests that learning a complex tool and performing tasks simultaneously presents extra cognitive load on the participants. This is likely the case in this study. VIADS group participants needed to learn how to use the tool and then analyze the data sets with it to come up with the hypotheses. The cognitive overload may not have been conscious. Therefore, the participants perceived VIADS as helpful in understanding datasets. However, the quality evaluation results did not support the participants’ perceptions of VIADS although the timing differences did support participants’ feedback.

The role of VIADS in the hypothesis generation process may not be linear. The 2-hour use of VIADS did not generate statistically higher average quality ratings on hypotheses; however, all participants (100%) agreed that VIADS provided new perspectives on the datasets. The true role of VIADS in hypothesis generation might be more complicated than we initially thought. Either two hours were inadequate to generate higher average quality ratings, or our evaluation was not adequately granular to capture the differences. A more natural use environment might be necessary instead of a simulated environment to demonstrate detectable differences.