- How to Convert Speech to Text in Python

Struggling with multiple programming languages? No worries. Our Code Converter has got you covered. Give it a go!

Speech recognition is the ability of computer software to identify words and phrases in spoken language and convert them to human-readable text. In this tutorial, you will learn how you can convert speech to text in Python using the SpeechRecognition library .

As a result, we do not need to build any machine learning model from scratch, this library provides us with convenient wrappers for various well-known public speech recognition APIs (such as Google Cloud Speech API, IBM Speech To Text, etc.).

Note that if you do not want to use APIs, and directly perform inference on machine learning models instead, then definitely check this tutorial , in which I'll show you how you can use the current state-of-the-art machine learning model to perform speech recognition in Python.

Also, if you want other methods to do ASR, then check this speech recognition comprehensive tutorial .

Learn also: How to Translate Text in Python .

Getting Started

Alright, let's get started, installing the library using pip :

Okay, open up a new Python file and import it:

The nice thing about this library is it supports several recognition engines:

- CMU Sphinx (offline)

- Google Speech Recognition

- Google Cloud Speech API

- Microsoft Bing Voice Recognition

- Houndify API

- IBM Speech To Text

- Snowboy Hotword Detection (offline)

We gonna use Google Speech Recognition here, as it's straightforward and doesn't require any API key.

Transcribing an Audio File

Make sure you have an audio file in the current directory that contains English speech (if you want to follow along with me, get the audio file here ):

This file was grabbed from the LibriSpeech dataset, but you can use any audio WAV file you want, just change the name of the file, let's initialize our speech recognizer:

The below code is responsible for loading the audio file, and converting the speech into text using Google Speech Recognition:

This will take a few seconds to finish, as it uploads the file to Google and grabs the output, here is my result:

The above code works well for small or medium size audio files. In the next section, we gonna write code for large files.

Transcribing Large Audio Files

If you want to perform speech recognition of a long audio file, then the below function handles that quite well:

Note: You need to install Pydub using pip for the above code to work.

The above function uses split_on_silence() function from pydub.silence module to split audio data into chunks on silence. The min_silence_len parameter is the minimum length of silence in milliseconds to be used for a split.

silence_thresh is the threshold in which anything quieter than this will be considered silence, I have set it to the average dBFS minus 14 , keep_silence argument is the amount of silence to leave at the beginning and the end of each chunk detected in milliseconds.

These parameters won't be perfect for all sound files, try to experiment with these parameters with your large audio needs.

After that, we iterate over all chunks and convert each speech audio into text, and then add them up altogether, here is an example run:

Note : You can get 7601-291468-0006.wav file here .

So, this function automatically creates a folder for us and puts the chunks of the original audio file we specified, and then it runs speech recognition on all of them.

In case you want to split the audio file into fixed intervals, we can use the below function instead:

The above function splits the large audio file into chunks of 5 minutes. You can change the minutes parameter to fit your needs. Since my audio file isn't that large, I'm trying to split it into chunks of 10 seconds:

Reading from the Microphone

This requires PyAudio to be installed on your machine, here is the installation process depending on your operating system:

You can just pip install it:

You need to first install the dependencies:

You need to first install portaudio , then you can just pip install it:

Now let's use our microphone to convert our speech:

This will hear from your microphone for 5 seconds and then try to convert that speech into text!

It is pretty similar to the previous code, but we are using the Microphone() object here to read the audio from the default microphone, and then we used the duration parameter in the record() function to stop reading after 5 seconds and then upload the audio data to Google to get the output text.

You can also use the offset parameter in the record() function to start recording after offset seconds.

Also, you can recognize different languages by passing the language parameter to the recognize_google() function. For instance, if you want to recognize Spanish speech, you would use:

Check out supported languages in this StackOverflow answer .

As you can see, it is pretty easy and simple to use this library for converting speech to text. This library is widely used out there in the wild. Check the official documentation .

If you want to convert text to speech in Python as well, check this tutorial .

Read Also: How to Recognize Optical Characters in Images in Python .

Happy Coding ♥

Why juggle between languages when you can convert? Check out our Code Converter . Try it out today!

How to Translate Languages in Python

Learn how to make a language translator and detector using Googletrans library (Google Translation API) for translating more than 100 languages with Python.

Speech Recognition using Transformers in Python

Learn how to perform speech recognition using wav2vec2 and whisper transformer models with the help of Huggingface transformers library in Python.

How to Play and Record Audio in Python

Learn how to play and record sound files using different libraries such as playsound, Pydub and PyAudio in Python.

Comment panel

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!

Join 50,000+ Python Programmers & Enthusiasts like you!

- Ethical Hacking

- Machine Learning

- General Python Tutorials

- Web Scraping

- Computer Vision

- Python Standard Library

- Application Programming Interfaces

- Game Development

- Web Programming

- Digital Forensics

- Natural Language Processing

- PDF File Handling

- Python for Multimedia

- GUI Programming

- Cryptography

- Packet Manipulation Using Scapy

New Tutorials

- Crafting Dummy Packets with Scapy Using Python

- How to Build a TCP Proxy with Python

- How to Build a Custom Netcat with Python

- 3 Best Online AI Code Generators

- How to Validate Credit Card Numbers in Python

Popular Tutorials

- How to Read Emails in Python

- How to Extract Tables from PDF in Python

- How to Make a Keylogger in Python

- How to Encrypt and Decrypt Files in Python

Claim your Free Chapter!

SpeechRecognition 3.13.0

pip install SpeechRecognition Copy PIP instructions

Released: Dec 29, 2024

Library for performing speech recognition, with support for several engines and APIs, online and offline.

Verified details

Maintainers.

Unverified details

Project links.

- License: BSD License (BSD)

- Author: Anthony Zhang (Uberi)

- Tags speech, recognition, voice, sphinx, google, wit, bing, api, houndify, ibm, snowboy

- Requires: Python >=3.9

- Provides-Extra: dev , audio , pocketsphinx , google-cloud , whisper-local , openai , groq , assemblyai

Classifiers

- 5 - Production/Stable

- OSI Approved :: BSD License

- MacOS :: MacOS X

- Microsoft :: Windows

- POSIX :: Linux

- Python :: 3

- Python :: 3.9

- Python :: 3.10

- Python :: 3.11

- Python :: 3.12

- Multimedia :: Sound/Audio :: Speech

- Software Development :: Libraries :: Python Modules

Project description

UPDATE 2022-02-09 : Hey everyone! This project started as a tech demo, but these days it needs more time than I have to keep up with all the PRs and issues. Therefore, I’d like to put out an open invite for collaborators - just reach out at me @ anthonyz . ca if you’re interested!

Speech recognition engine/API support:

Quickstart: pip install SpeechRecognition . See the “Installing” section for more details.

To quickly try it out, run python -m speech_recognition after installing.

Project links:

Library Reference

The library reference documents every publicly accessible object in the library. This document is also included under reference/library-reference.rst .

See Notes on using PocketSphinx for information about installing languages, compiling PocketSphinx, and building language packs from online resources. This document is also included under reference/pocketsphinx.rst .

You have to install Vosk models for using Vosk. Here are models avaiable. You have to place them in models folder of your project, like “your-project-folder/models/your-vosk-model”

See the examples/ directory in the repository root for usage examples:

First, make sure you have all the requirements listed in the “Requirements” section.

The easiest way to install this is using pip install SpeechRecognition .

Otherwise, download the source distribution from PyPI , and extract the archive.

In the folder, run python setup.py install .

Requirements

To use all of the functionality of the library, you should have:

The following requirements are optional, but can improve or extend functionality in some situations:

The following sections go over the details of each requirement.

The first software requirement is Python 3.9+ . This is required to use the library.

PyAudio (for microphone users)

PyAudio is required if and only if you want to use microphone input ( Microphone ). PyAudio version 0.2.11+ is required, as earlier versions have known memory management bugs when recording from microphones in certain situations.

If not installed, everything in the library will still work, except attempting to instantiate a Microphone object will raise an AttributeError .

The installation instructions on the PyAudio website are quite good - for convenience, they are summarized below:

PyAudio wheel packages for common 64-bit Python versions on Windows and Linux are included for convenience, under the third-party/ directory in the repository root. To install, simply run pip install wheel followed by pip install ./third-party/WHEEL_FILENAME (replace pip with pip3 if using Python 3) in the repository root directory .

PocketSphinx-Python (for Sphinx users)

PocketSphinx-Python is required if and only if you want to use the Sphinx recognizer ( recognizer_instance.recognize_sphinx ).

PocketSphinx-Python wheel packages for 64-bit Python 3.4, and 3.5 on Windows are included for convenience, under the third-party/ directory . To install, simply run pip install wheel followed by pip install ./third-party/WHEEL_FILENAME (replace pip with pip3 if using Python 3) in the SpeechRecognition folder.

On Linux and other POSIX systems (such as OS X), run pip install SpeechRecognition[pocketsphinx] . Follow the instructions under “Building PocketSphinx-Python from source” in Notes on using PocketSphinx for installation instructions.

Note that the versions available in most package repositories are outdated and will not work with the bundled language data. Using the bundled wheel packages or building from source is recommended.

Vosk (for Vosk users)

Vosk API is required if and only if you want to use Vosk recognizer ( recognizer_instance.recognize_vosk ).

You can install it with python3 -m pip install vosk .

You also have to install Vosk Models:

Here are models avaiable for download. You have to place them in models folder of your project, like “your-project-folder/models/your-vosk-model”

Google Cloud Speech Library for Python (for Google Cloud Speech-to-Text API users)

The library google-cloud-speech is required if and only if you want to use Google Cloud Speech-to-Text API ( recognizer_instance.recognize_google_cloud ). You can install it with python3 -m pip install SpeechRecognition[google-cloud] . (ref: official installation instructions )

Prerequisite : Create local authentication credentials for your Google account

Currently only V1 is supported. ( V2 is not supported)

FLAC (for some systems)

A FLAC encoder is required to encode the audio data to send to the API. If using Windows (x86 or x86-64), OS X (Intel Macs only, OS X 10.6 or higher), or Linux (x86 or x86-64), this is already bundled with this library - you do not need to install anything .

Otherwise, ensure that you have the flac command line tool, which is often available through the system package manager. For example, this would usually be sudo apt-get install flac on Debian-derivatives, or brew install flac on OS X with Homebrew.

Whisper (for Whisper users)

Whisper is required if and only if you want to use whisper ( recognizer_instance.recognize_whisper ).

You can install it with python3 -m pip install SpeechRecognition[whisper-local] .

OpenAI Whisper API (for OpenAI Whisper API users)

The library openai is required if and only if you want to use OpenAI Whisper API ( recognizer_instance.recognize_openai ).

You can install it with python3 -m pip install SpeechRecognition[openai] .

Please set the environment variable OPENAI_API_KEY before calling recognizer_instance.recognize_openai .

Groq Whisper API (for Groq Whisper API users)

The library groq is required if and only if you want to use Groq Whisper API ( recognizer_instance.recognize_groq ).

You can install it with python3 -m pip install SpeechRecognition[groq] .

Please set the environment variable GROQ_API_KEY before calling recognizer_instance.recognize_groq .

Troubleshooting

The recognizer tries to recognize speech even when i’m not speaking, or after i’m done speaking..

Try increasing the recognizer_instance.energy_threshold property. This is basically how sensitive the recognizer is to when recognition should start. Higher values mean that it will be less sensitive, which is useful if you are in a loud room.

This value depends entirely on your microphone or audio data. There is no one-size-fits-all value, but good values typically range from 50 to 4000.

Also, check on your microphone volume settings. If it is too sensitive, the microphone may be picking up a lot of ambient noise. If it is too insensitive, the microphone may be rejecting speech as just noise.

The recognizer can’t recognize speech right after it starts listening for the first time.

The recognizer_instance.energy_threshold property is probably set to a value that is too high to start off with, and then being adjusted lower automatically by dynamic energy threshold adjustment. Before it is at a good level, the energy threshold is so high that speech is just considered ambient noise.

The solution is to decrease this threshold, or call recognizer_instance.adjust_for_ambient_noise beforehand, which will set the threshold to a good value automatically.

The recognizer doesn’t understand my particular language/dialect.

Try setting the recognition language to your language/dialect. To do this, see the documentation for recognizer_instance.recognize_sphinx , recognizer_instance.recognize_google , recognizer_instance.recognize_wit , recognizer_instance.recognize_bing , recognizer_instance.recognize_api , recognizer_instance.recognize_houndify , and recognizer_instance.recognize_ibm .

For example, if your language/dialect is British English, it is better to use "en-GB" as the language rather than "en-US" .

The recognizer hangs on recognizer_instance.listen ; specifically, when it’s calling Microphone.MicrophoneStream.read .

This usually happens when you’re using a Raspberry Pi board, which doesn’t have audio input capabilities by itself. This causes the default microphone used by PyAudio to simply block when we try to read it. If you happen to be using a Raspberry Pi, you’ll need a USB sound card (or USB microphone).

Once you do this, change all instances of Microphone() to Microphone(device_index=MICROPHONE_INDEX) , where MICROPHONE_INDEX is the hardware-specific index of the microphone.

To figure out what the value of MICROPHONE_INDEX should be, run the following code:

This will print out something like the following:

Now, to use the Snowball microphone, you would change Microphone() to Microphone(device_index=3) .

Calling Microphone() gives the error IOError: No Default Input Device Available .

As the error says, the program doesn’t know which microphone to use.

To proceed, either use Microphone(device_index=MICROPHONE_INDEX, ...) instead of Microphone(...) , or set a default microphone in your OS. You can obtain possible values of MICROPHONE_INDEX using the code in the troubleshooting entry right above this one.

The program doesn’t run when compiled with PyInstaller .

As of PyInstaller version 3.0, SpeechRecognition is supported out of the box. If you’re getting weird issues when compiling your program using PyInstaller, simply update PyInstaller.

You can easily do this by running pip install --upgrade pyinstaller .

On Ubuntu/Debian, I get annoying output in the terminal saying things like “bt_audio_service_open: […] Connection refused” and various others.

The “bt_audio_service_open” error means that you have a Bluetooth audio device, but as a physical device is not currently connected, we can’t actually use it - if you’re not using a Bluetooth microphone, then this can be safely ignored. If you are, and audio isn’t working, then double check to make sure your microphone is actually connected. There does not seem to be a simple way to disable these messages.

For errors of the form “ALSA lib […] Unknown PCM”, see this StackOverflow answer . Basically, to get rid of an error of the form “Unknown PCM cards.pcm.rear”, simply comment out pcm.rear cards.pcm.rear in /usr/share/alsa/alsa.conf , ~/.asoundrc , and /etc/asound.conf .

For “jack server is not running or cannot be started” or “connect(2) call to /dev/shm/jack-1000/default/jack_0 failed (err=No such file or directory)” or “attempt to connect to server failed”, these are caused by ALSA trying to connect to JACK, and can be safely ignored. I’m not aware of any simple way to turn those messages off at this time, besides entirely disabling printing while starting the microphone .

On OS X, I get a ChildProcessError saying that it couldn’t find the system FLAC converter, even though it’s installed.

Installing FLAC for OS X directly from the source code will not work, since it doesn’t correctly add the executables to the search path.

Installing FLAC using Homebrew ensures that the search path is correctly updated. First, ensure you have Homebrew, then run brew install flac to install the necessary files.

To hack on this library, first make sure you have all the requirements listed in the “Requirements” section.

To install/reinstall the library locally, run python -m pip install -e .[dev] in the project root directory .

Before a release, the version number is bumped in README.rst and speech_recognition/__init__.py . Version tags are then created using git config gpg.program gpg2 && git config user.signingkey DB45F6C431DE7C2DCD99FF7904882258A4063489 && git tag -s VERSION_GOES_HERE -m "Version VERSION_GOES_HERE" .

Releases are done by running make-release.sh VERSION_GOES_HERE to build the Python source packages, sign them, and upload them to PyPI.

Prerequisite: Install pipx .

To run all the tests:

To run static analysis:

To ensure RST is well-formed:

Testing is also done automatically by GitHub Actions, upon every push.

FLAC Executables

The included flac-win32 executable is the official FLAC 1.3.2 32-bit Windows binary .

The included flac-linux-x86 and flac-linux-x86_64 executables are built from the FLAC 1.3.2 source code with Manylinux to ensure that it’s compatible with a wide variety of distributions.

The built FLAC executables should be bit-for-bit reproducible. To rebuild them, run the following inside the project directory on a Debian-like system:

The included flac-mac executable is extracted from xACT 2.39 , which is a frontend for FLAC 1.3.2 that conveniently includes binaries for all of its encoders. Specifically, it is a copy of xACT 2.39/xACT.app/Contents/Resources/flac in xACT2.39.zip .

Please report bugs and suggestions at the issue tracker !

How to cite this library (APA style):

Zhang, A. (2017). Speech Recognition (Version 3.11) [Software]. Available from https://github.com/Uberi/speech_recognition#readme .

How to cite this library (Chicago style):

Zhang, Anthony. 2017. Speech Recognition (version 3.11).

Also check out the Python Baidu Yuyin API , which is based on an older version of this project, and adds support for Baidu Yuyin . Note that Baidu Yuyin is only available inside China.

Copyright 2014-2017 Anthony Zhang (Uberi) . The source code for this library is available online at GitHub .

SpeechRecognition is made available under the 3-clause BSD license. See LICENSE.txt in the project’s root directory for more information.

For convenience, all the official distributions of SpeechRecognition already include a copy of the necessary copyright notices and licenses. In your project, you can simply say that licensing information for SpeechRecognition can be found within the SpeechRecognition README, and make sure SpeechRecognition is visible to users if they wish to see it .

SpeechRecognition distributes source code, binaries, and language files from CMU Sphinx . These files are BSD-licensed and redistributable as long as copyright notices are correctly retained. See speech_recognition/pocketsphinx-data/*/LICENSE*.txt and third-party/LICENSE-Sphinx.txt for license details for individual parts.

SpeechRecognition distributes source code and binaries from PyAudio . These files are MIT-licensed and redistributable as long as copyright notices are correctly retained. See third-party/LICENSE-PyAudio.txt for license details.

SpeechRecognition distributes binaries from FLAC - speech_recognition/flac-win32.exe , speech_recognition/flac-linux-x86 , and speech_recognition/flac-mac . These files are GPLv2-licensed and redistributable, as long as the terms of the GPL are satisfied. The FLAC binaries are an aggregate of separate programs , so these GPL restrictions do not apply to the library or your programs that use the library, only to FLAC itself. See LICENSE-FLAC.txt for license details.

Project details

Release history release notifications | rss feed.

Dec 29, 2024

Dec 8, 2024

Oct 20, 2024

May 5, 2024

Mar 30, 2024

Mar 28, 2024

Dec 6, 2023

Mar 13, 2023

Dec 4, 2022

Dec 5, 2017

Jun 27, 2017

Apr 13, 2017

Mar 11, 2017

Jan 7, 2017

Nov 21, 2016

May 22, 2016

May 11, 2016

May 10, 2016

Apr 9, 2016

Apr 4, 2016

Apr 3, 2016

Mar 5, 2016

Mar 4, 2016

Feb 26, 2016

Feb 20, 2016

Feb 19, 2016

Feb 4, 2016

Nov 5, 2015

Nov 2, 2015

Sep 2, 2015

Sep 1, 2015

Aug 30, 2015

Aug 24, 2015

Jul 26, 2015

Jul 12, 2015

Jul 3, 2015

May 20, 2015

Apr 24, 2015

Apr 14, 2015

Apr 7, 2015

Apr 5, 2015

Apr 4, 2015

Mar 31, 2015

Dec 10, 2014

Nov 17, 2014

Sep 11, 2014

Sep 6, 2014

Aug 25, 2014

Jul 6, 2014

Jun 10, 2014

Jun 9, 2014

May 29, 2014

Apr 23, 2014

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Dec 29, 2024 Source

Built Distribution

Uploaded Dec 29, 2024 Python 3

File details

Details for the file speechrecognition-3.13.0.tar.gz .

File metadata

- Download URL: speechrecognition-3.13.0.tar.gz

- Upload date: Dec 29, 2024

- Size: 32.9 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.0.1 CPython/3.12.1

File hashes

See more details on using hashes here.

Details for the file SpeechRecognition-3.13.0-py3-none-any.whl .

- Download URL: SpeechRecognition-3.13.0-py3-none-any.whl

- Size: 32.8 MB

- Tags: Python 3

- português (Brasil)

Supported by

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

A robust, efficient, low-latency speech-to-text library with advanced voice activity detection, wake word activation and instant transcription.

KoljaB/RealtimeSTT

Folders and files, repository files navigation, realtimestt.

Easy-to-use, low-latency speech-to-text library for realtime applications

- AudioToTextRecorderClient class, which automatically starts a server if none is running and connects to it. The class shares the same interface as AudioToTextRecorder, making it easy to upgrade or switch between the two. (Work in progress, most parameters and callbacks of AudioToTextRecorder are already implemented into AudioToTextRecorderClient, but not all. Also the server can not handle concurrent (parallel) requests yet.)

- reworked CLI interface ("stt-server" to start the server, "stt" to start the client, look at "server" folder for more info)

About the Project

RealtimeSTT listens to the microphone and transcribes voice into text.

Hint: Check out Linguflex , the original project from which RealtimeSTT is spun off. It lets you control your environment by speaking and is one of the most capable and sophisticated open-source assistants currently available.

It's ideal for:

- Voice Assistants

- Applications requiring fast and precise speech-to-text conversion

Latest Version: v0.3.92

See release history .

Hint: Since we use the multiprocessing module now, ensure to include the if __name__ == '__main__': protection in your code to prevent unexpected behavior, especially on platforms like Windows. For a detailed explanation on why this is important, visit the official Python documentation on multiprocessing .

Quick Examples

Print everything being said:, type everything being said:.

Will type everything being said into your selected text box

- Voice Activity Detection : Automatically detects when you start and stop speaking.

- Realtime Transcription : Transforms speech to text in real-time.

- Wake Word Activation : Can activate upon detecting a designated wake word.

Hint : Check out RealtimeTTS , the output counterpart of this library, for text-to-voice capabilities. Together, they form a powerful realtime audio wrapper around large language models.

This library uses:

- WebRTCVAD for initial voice activity detection.

- SileroVAD for more accurate verification.

- Faster_Whisper for instant (GPU-accelerated) transcription.

- Porcupine or OpenWakeWord for wake word detection.

These components represent the "industry standard" for cutting-edge applications, providing the most modern and effective foundation for building high-end solutions.

Installation

This will install all the necessary dependencies, including a CPU support only version of PyTorch.

Although it is possible to run RealtimeSTT with a CPU installation only (use a small model like "tiny" or "base" in this case) you will get way better experience using CUDA (please scroll down).

Linux Installation

Before installing RealtimeSTT please execute:

MacOS Installation

Gpu support with cuda (recommended), updating pytorch for cuda support.

To upgrade your PyTorch installation to enable GPU support with CUDA, follow these instructions based on your specific CUDA version. This is useful if you wish to enhance the performance of RealtimeSTT with CUDA capabilities.

For CUDA 11.8:

To update PyTorch and Torchaudio to support CUDA 11.8, use the following commands:

For CUDA 12.X:

To update PyTorch and Torchaudio to support CUDA 12.X, execute the following:

Replace 2.5.1 with the version of PyTorch that matches your system and requirements.

Steps That Might Be Necessary Before

Note : To check if your NVIDIA GPU supports CUDA, visit the official CUDA GPUs list .

If you didn't use CUDA models before, some additional steps might be needed one time before installation. These steps prepare the system for CUDA support and installation of the GPU-optimized installation. This is recommended for those who require better performance and have a compatible NVIDIA GPU. To use RealtimeSTT with GPU support via CUDA please also follow these steps:

Install NVIDIA CUDA Toolkit :

- for 12.X visit NVIDIA CUDA Toolkit Archive and select latest version.

- for 11.8 visit NVIDIA CUDA Toolkit 11.8 .

- Select operating system and version.

- Download and install the software.

Install NVIDIA cuDNN :

- Click on "Download cuDNN v8.7.0 (November 28th, 2022), for CUDA 11.x".

Install ffmpeg :

Note : Installation of ffmpeg might not actually be needed to operate RealtimeSTT *thanks to jgilbert2017 for pointing this out

You can download an installer for your OS from the ffmpeg Website .

Or use a package manager:

On Ubuntu or Debian :

On Arch Linux :

On MacOS using Homebrew ( https://brew.sh/ ):

On Windows using Winget official documentation :

On Windows using Chocolatey ( https://chocolatey.org/ ):

On Windows using Scoop ( https://scoop.sh/ ):

Quick Start

Basic usage:

Manual Recording

Start and stop of recording are manually triggered.

Standalone Example:

Automatic recording.

Recording based on voice activity detection.

When running recorder.text in a loop it is recommended to use a callback, allowing the transcription to be run asynchronously:

Keyword activation before detecting voice. Write the comma-separated list of your desired activation keywords into the wake_words parameter. You can choose wake words from these list: alexa, americano, blueberry, bumblebee, computer, grapefruits, grasshopper, hey google, hey siri, jarvis, ok google, picovoice, porcupine, terminator.

You can set callback functions to be executed on different events (see Configuration ) :

Feed chunks

If you don't want to use the local microphone set use_microphone parameter to false and provide raw PCM audiochunks in 16-bit mono (samplerate 16000) with this method:

You can shutdown the recorder safely by using the context manager protocol:

Or you can call the shutdown method manually (if using "with" is not feasible):

Testing the Library

The test subdirectory contains a set of scripts to help you evaluate and understand the capabilities of the RealtimeTTS library.

Test scripts depending on RealtimeTTS library may require you to enter your azure service region within the script. When using OpenAI-, Azure- or Elevenlabs-related demo scripts the API Keys should be provided in the environment variables OPENAI_API_KEY, AZURE_SPEECH_KEY and ELEVENLABS_API_KEY (see RealtimeTTS )

simple_test.py

- Description : A "hello world" styled demonstration of the library's simplest usage.

realtimestt_test.py

- Description : Showcasing live-transcription.

wakeword_test.py

- Description : A demonstration of the wakeword activation.

translator.py

- Dependencies : Run pip install openai realtimetts .

- Description : Real-time translations into six different languages.

openai_voice_interface.py

- Description : Wake word activated and voice based user interface to the OpenAI API.

advanced_talk.py

- Dependencies : Run pip install openai keyboard realtimetts .

- Description : Choose TTS engine and voice before starting AI conversation.

minimalistic_talkbot.py

- Description : A basic talkbot in 20 lines of code.

The example_app subdirectory contains a polished user interface application for the OpenAI API based on PyQt5.

Configuration

Initialization parameters for audiototextrecorder.

When you initialize the AudioToTextRecorder class, you have various options to customize its behavior.

General Parameters

model (str, default="tiny"): Model size or path for transcription.

- Options: 'tiny', 'tiny.en', 'base', 'base.en', 'small', 'small.en', 'medium', 'medium.en', 'large-v1', 'large-v2'.

- Note: If a size is provided, the model will be downloaded from the Hugging Face Hub.

language (str, default=""): Language code for transcription. If left empty, the model will try to auto-detect the language. Supported language codes are listed in Whisper Tokenizer library .

compute_type (str, default="default"): Specifies the type of computation to be used for transcription. See Whisper Quantization

input_device_index (int, default=0): Audio Input Device Index to use.

gpu_device_index (int, default=0): GPU Device Index to use. The model can also be loaded on multiple GPUs by passing a list of IDs (e.g. [0, 1, 2, 3]).

device (str, default="cuda"): Device for model to use. Can either be "cuda" or "cpu".

on_recording_start : A callable function triggered when recording starts.

on_recording_stop : A callable function triggered when recording ends.

on_transcription_start : A callable function triggered when transcription starts.

ensure_sentence_starting_uppercase (bool, default=True): Ensures that every sentence detected by the algorithm starts with an uppercase letter.

ensure_sentence_ends_with_period (bool, default=True): Ensures that every sentence that doesn't end with punctuation such as "?", "!" ends with a period

use_microphone (bool, default=True): Usage of local microphone for transcription. Set to False if you want to provide chunks with feed_audio method.

spinner (bool, default=True): Provides a spinner animation text with information about the current recorder state.

level (int, default=logging.WARNING): Logging level.

batch_size (int, default=16): Batch size for the main transcription. Set to 0 to deactivate.

init_logging (bool, default=True): Whether to initialize the logging framework. Set to False to manage this yourself.

handle_buffer_overflow (bool, default=True): If set, the system will log a warning when an input overflow occurs during recording and remove the data from the buffer.

beam_size (int, default=5): The beam size to use for beam search decoding.

initial_prompt (str or iterable of int, default=None): Initial prompt to be fed to the transcription models.

suppress_tokens (list of int, default=[-1]): Tokens to be suppressed from the transcription output.

on_recorded_chunk : A callback function that is triggered when a chunk of audio is recorded. Submits the chunk data as parameter.

debug_mode (bool, default=False): If set, the system prints additional debug information to the console.

print_transcription_time (bool, default=False): Logs the processing time of the main model transcription. This can be useful for performance monitoring and debugging.

early_transcription_on_silence (int, default=0): If set, the system will transcribe audio faster when silence is detected. Transcription will start after the specified milliseconds. Keep this value lower than post_speech_silence_duration , ideally around post_speech_silence_duration minus the estimated transcription time with the main model. If silence lasts longer than post_speech_silence_duration , the recording is stopped, and the transcription is submitted. If voice activity resumes within this period, the transcription is discarded. This results in faster final transcriptions at the cost of additional GPU load due to some unnecessary final transcriptions.

allowed_latency_limit (int, default=100): Specifies the maximum number of unprocessed chunks in the queue before discarding chunks. This helps prevent the system from being overwhelmed and losing responsiveness in real-time applications.

no_log_file (bool, default=False): If set, the system will skip writing the debug log file, reducing disk I/O. Useful if logging to a file is not needed and performance is a priority.

Real-time Transcription Parameters

Note : When enabling realtime description a GPU installation is strongly advised. Using realtime transcription may create high GPU loads.

enable_realtime_transcription (bool, default=False): Enables or disables real-time transcription of audio. When set to True, the audio will be transcribed continuously as it is being recorded.

use_main_model_for_realtime (bool, default=False): If set to True, the main transcription model will be used for both regular and real-time transcription. If False, a separate model specified by realtime_model_type will be used for real-time transcription. Using a single model can save memory and potentially improve performance, but may not be optimized for real-time processing. Using separate models allows for a smaller, faster model for real-time transcription while keeping a more accurate model for final transcription.

realtime_model_type (str, default="tiny"): Specifies the size or path of the machine learning model to be used for real-time transcription.

- Valid options: 'tiny', 'tiny.en', 'base', 'base.en', 'small', 'small.en', 'medium', 'medium.en', 'large-v1', 'large-v2'.

realtime_processing_pause (float, default=0.2): Specifies the time interval in seconds after a chunk of audio gets transcribed. Lower values will result in more "real-time" (frequent) transcription updates but may increase computational load.

on_realtime_transcription_update : A callback function that is triggered whenever there's an update in the real-time transcription. The function is called with the newly transcribed text as its argument.

on_realtime_transcription_stabilized : A callback function that is triggered whenever there's an update in the real-time transcription and returns a higher quality, stabilized text as its argument.

realtime_batch_size : (int, default=16): Batch size for the real-time transcription model. Set to 0 to deactivate.

beam_size_realtime (int, default=3): The beam size to use for real-time transcription beam search decoding.

Voice Activation Parameters

silero_sensitivity (float, default=0.6): Sensitivity for Silero's voice activity detection ranging from 0 (least sensitive) to 1 (most sensitive). Default is 0.6.

silero_use_onnx (bool, default=False): Enables usage of the pre-trained model from Silero in the ONNX (Open Neural Network Exchange) format instead of the PyTorch format. Default is False. Recommended for faster performance.

silero_deactivity_detection (bool, default=False): Enables the Silero model for end-of-speech detection. More robust against background noise. Utilizes additional GPU resources but improves accuracy in noisy environments. When False, uses the default WebRTC VAD, which is more sensitive but may continue recording longer due to background sounds.

webrtc_sensitivity (int, default=3): Sensitivity for the WebRTC Voice Activity Detection engine ranging from 0 (least aggressive / most sensitive) to 3 (most aggressive, least sensitive). Default is 3.

post_speech_silence_duration (float, default=0.2): Duration in seconds of silence that must follow speech before the recording is considered to be completed. This ensures that any brief pauses during speech don't prematurely end the recording.

min_gap_between_recordings (float, default=1.0): Specifies the minimum time interval in seconds that should exist between the end of one recording session and the beginning of another to prevent rapid consecutive recordings.

min_length_of_recording (float, default=1.0): Specifies the minimum duration in seconds that a recording session should last to ensure meaningful audio capture, preventing excessively short or fragmented recordings.

pre_recording_buffer_duration (float, default=0.2): The time span, in seconds, during which audio is buffered prior to formal recording. This helps counterbalancing the latency inherent in speech activity detection, ensuring no initial audio is missed.

on_vad_detect_start : A callable function triggered when the system starts to listen for voice activity.

on_vad_detect_stop : A callable function triggered when the system stops to listen for voice activity.

Wake Word Parameters

wakeword_backend (str, default="pvporcupine"): Specifies the backend library to use for wake word detection. Supported options include 'pvporcupine' for using the Porcupine wake word engine or 'oww' for using the OpenWakeWord engine.

openwakeword_model_paths (str, default=None): Comma-separated paths to model files for the openwakeword library. These paths point to custom models that can be used for wake word detection when the openwakeword library is selected as the wakeword_backend.

openwakeword_inference_framework (str, default="onnx"): Specifies the inference framework to use with the openwakeword library. Can be either 'onnx' for Open Neural Network Exchange format or 'tflite' for TensorFlow Lite.

wake_words (str, default=""): Initiate recording when using the 'pvporcupine' wakeword backend. Multiple wake words can be provided as a comma-separated string. Supported wake words are: alexa, americano, blueberry, bumblebee, computer, grapefruits, grasshopper, hey google, hey siri, jarvis, ok google, picovoice, porcupine, terminator. For the 'openwakeword' backend, wake words are automatically extracted from the provided model files, so specifying them here is not necessary.

wake_words_sensitivity (float, default=0.6): Sensitivity level for wake word detection (0 for least sensitive, 1 for most sensitive).

wake_word_activation_delay (float, default=0): Duration in seconds after the start of monitoring before the system switches to wake word activation if no voice is initially detected. If set to zero, the system uses wake word activation immediately.

wake_word_timeout (float, default=5): Duration in seconds after a wake word is recognized. If no subsequent voice activity is detected within this window, the system transitions back to an inactive state, awaiting the next wake word or voice activation.

wake_word_buffer_duration (float, default=0.1): Duration in seconds to buffer audio data during wake word detection. This helps in cutting out the wake word from the recording buffer so it does not falsely get detected along with the following spoken text, ensuring cleaner and more accurate transcription start triggers. Increase this if parts of the wake word get detected as text.

on_wakeword_detected : A callable function triggered when a wake word is detected.

on_wakeword_timeout : A callable function triggered when the system goes back to an inactive state after when no speech was detected after wake word activation.

on_wakeword_detection_start : A callable function triggered when the system starts to listen for wake words

on_wakeword_detection_end : A callable function triggered when stopping to listen for wake words (e.g. because of timeout or wake word detected)

OpenWakeWord

Training models.

Look here for information about how to train your own OpenWakeWord models. You can use a simple Google Colab notebook for a start or use a more detailed notebook that enables more customization (can produce high quality models, but requires more development experience).

Convert model to ONNX format

You might need to use tf2onnx to convert tensorflow tflite models to onnx format:

Configure RealtimeSTT

Suggested starting parameters for OpenWakeWord usage:

Q: I encountered the following error: "Unable to load any of {libcudnn_ops.so.9.1.0, libcudnn_ops.so.9.1, libcudnn_ops.so.9, libcudnn_ops.so} Invalid handle. Cannot load symbol cudnnCreateTensorDescriptor." How do I fix this?

A: This issue arises from a mismatch between the version of ctranslate2 and cuDNN. The ctranslate2 library was updated to version 4.5.0, which uses cuDNN 9.2. There are two ways to resolve this issue:

- Downgrade ctranslate2 to version 4.4.0 : pip install ctranslate2==4.4.0

- Upgrade cuDNN on your system to version 9.2 or above.

Contribution

Contributions are always welcome!

Shoutout to Steven Linn for providing docker support.

Kolja Beigel Email: [email protected] GitHub

Releases 30

Contributors 16.

- Python 95.7%

- JavaScript 1.1%

Speech-to-Text in Python: A Deep Dive

Speech recognition technology has made remarkable strides in recent years, with accuracy rates now approaching human parity for many languages and domains. At the heart of this progress lie advances in machine learning, especially deep learning, which have revolutionized how we teach computers to understand spoken language. Python, with its extensive ecosystem of speech and language libraries, has emerged as a go-to platform for developing speech-to-text systems. In this article, we‘ll take a deep dive into speech-to-text in Python, covering the underlying technologies, available tools, and practical tips for building high-performing systems.

The Building Blocks of Speech Recognition

At its core, speech recognition involves translating acoustic signals into textual transcripts. This complex process relies on several key components:

Acoustic Model : The acoustic model is responsible for mapping audio features to phonemes, the basic units of speech. Traditionally, acoustic models were built using Gaussian Mixture Models (GMMs) combined with Hidden Markov Models (HMMs). However, deep learning has largely supplanted GMM-HMMs, with architectures like Deep Neural Networks (DNNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs) now dominating. These models take in input features like Mel-Frequency Cepstral Coefficients (MFCCs) or filterbank energies and output probability distributions over phonemes.

Language Model : The language model captures the statistical properties of word sequences in a given language. It assigns probabilities to word sequences, allowing the speech recognizer to distinguish between acoustically similar but semantically different phrases (e.g. "recognize speech" vs. "wreck a nice beach"). Traditional language models used n-grams, but modern systems often employ RNNs or transformer architectures. The language model is typically trained on large text corpora and integrated with the acoustic model during decoding.

Pronunciation Dictionary : The pronunciation dictionary, or lexicon, maps words to their phonetic pronunciations. It serves as the bridge between the acoustic and language models. Pronunciations can be hand-crafted or learned from data. Challenges include modeling pronunciation variants, proper nouns, and code-switching.

Decoder : The decoder combines the outputs of the acoustic model, language model, and pronunciation dictionary to find the most likely word sequence given the input audio. Decoding techniques include graph search algorithms like Viterbi and beam search, as well as sequence-to-sequence models that directly output word sequences without an explicit pronunciation dictionary.

Building a speech recognition system requires training each of these components on large amounts of transcribed speech data and carefully tuning their interactions. Challenges include dealing with speaker and acoustic variability, background noise, and spontaneous speech phenomena like disfluencies and partial words.

The Rise of Deep Learning for Speech Recognition

In the past decade, deep learning has revolutionized the field of speech recognition. Some key milestones include:

2009-2012 : Researchers at Microsoft, Google, and IBM demonstrate the power of DNNs for acoustic modeling, significantly outperforming GMM-HMMs [1] [2] [3] .

2015 : Baidu‘s Deep Speech system shows the potential of end-to-end deep learning for speech recognition [4] .

2016 : Google‘s Listen, Attend and Spell (LAS) model introduces attention mechanisms for end-to-end speech recognition [5] .

2018 : Facebook‘s wav2letter++ framework demonstrates the power of convolutional neural networks for fast, efficient speech recognition [6] .

2019 : Transformers, the dominant architecture for natural language processing, are successfully applied to speech recognition [7] .

2021 : Researchers explore self-supervised pre-training for speech recognition, learning rich audio representations from unlabeled data [8] .

Today, state-of-the-art speech recognition systems use deep learning for both acoustic and language modeling, with architectures like CNNs, RNNs (especially LSTMs and GRUs), and transformers. End-to-end models that directly output text sequences are becoming increasingly popular. Key ingredients for success include large training datasets, powerful computing resources (GPUs/TPUs), and careful model design and optimization.

Python Tools for Speech Recognition

Python offers a wealth of libraries and tools for building speech recognition systems. Here are some of the most popular:

- SpeechRecognition : This library provides a simple interface for performing speech recognition with various back-ends, including Google Web Speech API, CMU Sphinx, and Wit.ai. It‘s a great choice for quickly adding speech recognition capabilities to Python applications.

- DeepSpeech : Developed by Mozilla, DeepSpeech is an open-source engine for speech recognition based on Baidu‘s Deep Speech architecture. It uses a CNN-RNN acoustic model and CTC loss to directly output text sequences. DeepSpeech can be trained on custom data and deployed offline, making it a good choice for applications with privacy concerns or low-resource settings.

Kaldi : Kaldi is a popular toolkit for building speech recognition systems, widely used in the research community. It provides a comprehensive set of tools for acoustic modeling, language modeling, and decoding, with support for both traditional GMM-HMM and newer neural network architectures. Kaldi has a steeper learning curve compared to other libraries, but offers unparalleled flexibility and performance.

ESPnet : ESPnet is an end-to-end speech processing toolkit that supports speech recognition, speech synthesis, speech enhancement, and speaker diarization. It provides implementations of state-of-the-art end-to-end architectures like transformer and conformer, with an easy-to-use recipe-based interface for training and testing models.

Hugging Face Transformers : The popular Transformers library, originally developed for natural language processing, now includes support for speech recognition models. Transformers provides pre-trained models like Wav2Vec2 and HuBERT that can be fine-tuned for specific speech recognition tasks with just a few lines of code.

In addition to these libraries, Python ecosystems for scientific computing and machine learning like NumPy, SciPy, pandas, scikit-learn, and PyTorch provide a solid foundation for developing and training speech recognition models from scratch.

Performance Metrics and Evaluation

Evaluating the performance of speech recognition systems is critical for tracking progress and comparing different models. The most common metrics are:

- Word Error Rate (WER) : WER measures the edit distance between the predicted and reference transcripts, normalized by the total number of words in the reference. It‘s calculated as:

$WER = \frac{S + D + I}{N} \times 100\%$

where $S$, $D$, and $I$ are the number of substitutions, deletions, and insertions, respectively, and $N$ is the total number of words in the reference.

- Character Error Rate (CER) : CER is similar to WER, but operates at the character level instead of the word level. It‘s useful for languages without clear word boundaries, like Mandarin Chinese.

To evaluate a speech recognition system, we typically use a held-out test set that wasn‘t seen during training. We compute the WER/CER on this test set and compare it to a baseline or previous models. For more robust evaluation, we can use techniques like cross-validation, where we divide the data into multiple folds and report the average performance across folds.

Here‘s an example of how to compute WER in Python using the jiwer library:

On standard benchmarks like Switchboard and LibriSpeech, state-of-the-art speech recognition systems now achieve WERs of less than 5%, approaching human parity [9] . However, performance can degrade significantly in noisy or mismatched conditions, so it‘s important to evaluate models on diverse datasets that reflect real-world use cases.

Best Practices for Building Speech Recognition Systems

Building high-performing speech recognition systems requires careful attention to data preprocessing, model architecture, and training procedures. Here are some best practices to keep in mind:

Data Preprocessing : Clean and normalize your audio data to remove noise, silence, and other artifacts that can degrade performance. Apply techniques like volume normalization, spectral subtraction, and voice activity detection. For neural network models, make sure to normalize input features (e.g., zero mean and unit variance) and use data augmentation techniques like time stretching, pitch shifting, and adding background noise to improve robustness.

Model Architecture : Choose an appropriate model architecture based on your performance and latency requirements. CNNs are good for capturing local temporal and spectral patterns, while RNNs are better at modeling long-range dependencies. Attention mechanisms can help align acoustic frames with output tokens. Consider using pre-trained models or transfer learning to leverage knowledge from related tasks.

Training Procedures : Train your models using stochastic gradient descent with techniques like learning rate scheduling, gradient clipping, and early stopping. Monitor training and validation loss to detect overfitting. Use a large batch size to leverage parallelism and accelerate training. Consider using mixed-precision training or quantization to reduce memory footprint and speed up inference.

Domain Adaptation : If you‘re applying a pre-trained model to a new domain (e.g., from broadcast news to conversational speech), fine-tune the model on in-domain data to adapt it to the new distribution. You can also use techniques like feature-space adaptation or adversarial training to learn domain-invariant representations.

Language Model Integration : Integrate an external language model trained on domain-specific text data to improve recognition accuracy. Use techniques like shallow fusion, deep fusion, or cold fusion to combine the acoustic and language model scores during decoding.

Confidence Estimation : In addition to the recognized text, output confidence scores or word posteriors that indicate the model‘s certainty in its predictions. These scores can be used to detect and filter out low-confidence predictions, trigger prompts for user feedback, or guide downstream processing.

By following these best practices and leveraging the powerful tools and libraries available in Python, you can build speech recognition systems that are accurate, efficient, and robust to real-world variations.

Speech recognition is a rapidly evolving field with immense potential to transform how we interact with technology. As we‘ve seen, Python provides a rich ecosystem for developing speech-to-text systems, from simple libraries for quick prototyping to advanced toolkits for state-of-the-art research. By understanding the key components of speech recognition pipelines, leveraging the power of deep learning, and following best practices for data preprocessing and model training, you can build accurate and efficient speech-to-text systems that unlock the full potential of spoken language interfaces. As research continues to push the boundaries of what‘s possible, we can expect to see even more exciting developments in speech recognition in the years to come.

How useful was this post?

Click on a star to rate it!

Average rating 0 / 5. Vote count: 0

No votes so far! Be the first to rate this post.

Share this:

You may like to read,.

- Create a recognizer instance

- The Ultimate Guide to Building an AI Chatbot with NLP in Python

- An Introduction to Deep Learning in Julia

- A Deep Dive into Separable Convolutions: Foundations, Architectures, and Efficient Implementation

- Loan Risk Analysis with Supervised Machine Learning Classification

- A Comprehensive Guide to Ensemble Learning: Foundations, Frontiers, and Future

- Malaria Cell Image Classification: An End-to-End AI Solution

- A Comprehensive Guide to Human Pose Estimation in 2023

Speech-to-Text Conversion Using Python

In this tutorial from Subhasish Sarkar, learn how to build a basic speech-to-text engine using simple Python script

URL Copied to clipboard

- Share via Email

- Share on Facebook

- Tweet this post

- Share on Linkedin

- Share on Reddit

- Share on WhatsApp

In today’s world, voice technology has become very prevalent. The technology has grown, evolved and matured at a tremendous pace. Starting from voice shopping on Amazon to routine (and growingly complex) tasks performed by the personal voice assistant devices/speakers such as Amazon’s Alexa at the command of our voice, voice technology has found many practical uses in different spheres of life.

Speech-to-Text Conversion

One of the most important and critical functionalities involved with any voice technology implementation is a speech-to-text (STT) engine that performs voice recognition and speech-to-text conversion. We can build a very basic STT engine using a simple Python script. Let’s go through the sequence of steps required.

NOTE : I worked on this proof-of-concept (PoC) project on my local Windows machine and therefore, I assume that all instructions pertaining to this PoC are tried out by the readers on a system running Microsoft Windows OS.

Step 1: Installation of Specific Python Libraries

We will start by installing the Python libraries, namely: speechrecognition, wheel, pipwin and pyaudio. Open your Windows command prompt or any other terminal that you are comfortable using and execute the following commands in sequence, with the next command executed only after the previous one has completed its successful execution.

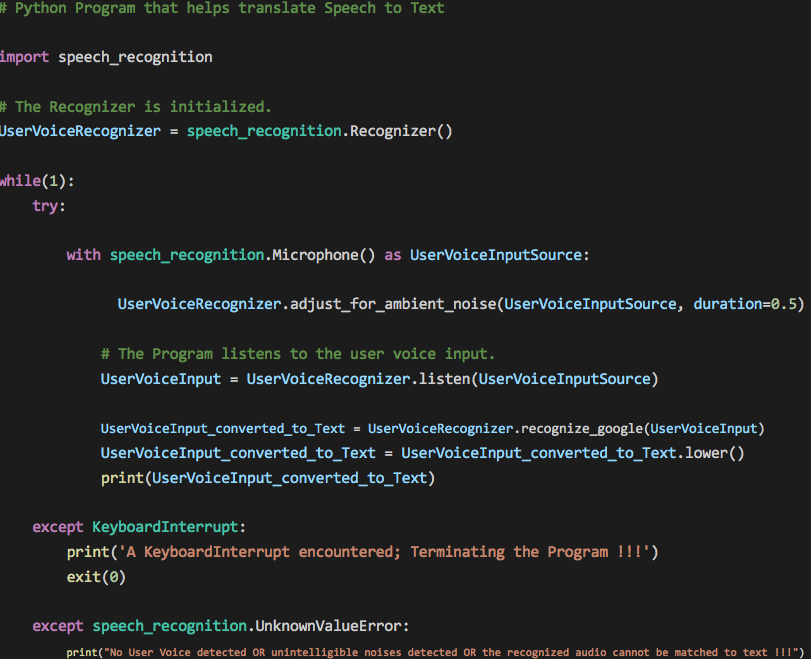

Step 2: Code the Python Script That Implements a Very Basic STT Engine

Let’s name the Python Script file STT.py . Save the file anywhere on your local Windows machine. The Python script code looks like the one referenced below in Figure 1.

Figure 1 Code:

Figure 1 Visual:

The while loop makes the script run infinitely, waiting to listen to the user voice. A KeyboardInterrupt (pressing CTRL+C on the keyboard) terminates the program gracefully. Your system’s default microphone is used as the source of the user voice input. The code allows for ambient noise adjustment.

Depending on the surrounding noise level, the script can wait for a miniscule amount of time which allows the Recognizer to adjust the energy threshold of the recording of the user voice. To handle ambient noise, we use the adjust_for_ambient_noise() method of the Recognizer class. The adjust_for_ambient_noise() method analyzes the audio source for the time specified as the value of the duration keyword argument (the default value of the argument being one second). So, after the Python script has started executing, you should wait for approximately the time specified as the value of the duration keyword argument for the adjust_for_ambient_noise() method to do its thing, and then try speaking into the microphone.

The SpeechRecognition documentation recommends using a duration no less than 0.5 seconds. In some cases, you may find that durations longer than the default of one second generate better results. The minimum value you need for the duration keyword argument depends on the microphone’s ambient environment. The default duration of one second should be adequate for most applications, though.

The translation of speech to text is accomplished with the aid of Google Speech Recognition ( Google Web Speech API ), and for it to work, you need an active internet connection.

Step 3: Test the Python Script

The Python script to translate speech to text is ready and it’s now time to see it in action. Open your Windows command prompt or any other terminal that you are comfortable using and CD to the path where you have saved the Python script file. Type in python “STT.py” and press enter. The script starts executing. Speak something and you will see your voice converted to text and printed on the console window. Figure 2 below captures a few of my utterances.

Figure 2 . A few of the utterances converted to text; the text “hai” corresponds to the actual utterance of “hi,” whereas “hay” corresponds to “hey.”

Figure 3 below shows another instance of script execution wherein user voice was not detected for a certain time interval or that unintelligible noise/audio was detected/recognized which couldn’t be matched/converted to text, resulting in outputting the message “No User Voice detected OR unintelligible noises detected OR the recognized audio cannot be matched to text !!!”

Figure 3 . The “No User Voice detected OR unintelligible noises detected OR the recognized audio cannot be matched to text !!!” output message indicates that our STT engine didn’t recognize any user voice for a certain interval of time or that unintelligible noise/audio was detected/recognized which couldn’t be matched/converted to text.

Note : The response from the Google Speech Recognition engine can be quite slow at times. One thing to note here is, so long as the script executes, your system’s default microphone is constantly in use and the message “Python is using your microphone” depicted in Figure 4 below confirms the fact.

Finally, press CTRL+C on your keyboard to terminate the execution of the Python script. Hitting CTRL+C on the keyboard generates a KeyboardInterrupt exception that has been handled in the first except block in the script which results in a graceful exit of the script. Figure 5 below shows the script’s graceful exit.

Figure 5 . Pressing CTRL+C on your keyboard results in a graceful exit of the executing Python script.

Note : I noticed that the script fails to work when the VPN is turned on. The VPN had to be turned off for the script to function as expected. Figure 6 below demonstrates the erroring out of the script with the VPN turned on.

Figure 6 . The Python script fails to work when the VPN is turned on.

When the VPN is turned on, it seems that the Google Speech Recognition API turns down the request. Anybody able to fix the issue is most welcome to get in touch with me here and share the resolution.

Related Articles See more

How to set up the robot framework for test automation.

June 13, 2024

A Next-Generation Mainframer Finds Her Way

Reg Harbeck

May 20, 2024

Video: Supercharge Your IBM i Applications With Generative AI

Patrick Behr

January 10, 2024

- Data Science

- Data Science Projects

- Data Analysis

- Data Visualization

- Machine Learning

- ML Projects

- Deep Learning

- Computer Vision

- Artificial Intelligence

Speech Recognition Module Python

Speech recognition, a field at the intersection of linguistics, computer science, and electrical engineering, aims at designing systems capable of recognizing and translating spoken language into text. Python, known for its simplicity and robust libraries, offers several modules to tackle speech recognition tasks effectively. In this article, we'll explore the essence of speech recognition in Python, including an overview of its key libraries, how they can be implemented, and their practical applications.

Key Python Libraries for Speech Recognition

- SpeechRecognition : One of the most popular Python libraries for recognizing speech. It provides support for several engines and APIs, such as Google Web Speech API, Microsoft Bing Voice Recognition, and IBM Speech to Text. It's known for its ease of use and flexibility, making it a great starting point for beginners and experienced developers alike.

- PyAudio : Essential for audio input and output in Python, PyAudio provides Python bindings for PortAudio, the cross-platform audio I/O library. It's often used alongside SpeechRecognition to capture microphone input for real-time speech recognition.

- DeepSpeech : Developed by Mozilla, DeepSpeech is an open-source deep learning-based voice recognition system that uses models trained on the Baidu's Deep Speech research project. It's suitable for developers looking to implement more sophisticated speech recognition features with the power of deep learning.

Implementing Speech Recognition with Python

A basic implementation using the SpeechRecognition library involves several steps:

- Audio Capture : Capturing audio from the microphone using PyAudio.

- Audio Processing : Converting the audio signal into data that the SpeechRecognition library can work with.

- Recognition : Calling the recognize_google() method (or another available recognition method) on the SpeechRecognition library to convert the audio data into text.

Here's a simple example:

Practical Applications

Speech recognition has a wide range of applications:

- Voice-activated Assistants: Creating personal assistants like Siri or Alexa.

- Accessibility Tools: Helping individuals with disabilities interact with technology.

- Home Automation: Enabling voice control over smart home devices.

- Transcription Services: Automatically transcribing meetings, lectures, and interviews.

Challenges and Considerations

While implementing speech recognition, developers might face challenges such as background noise interference, accents, and dialects. It's crucial to consider these factors and test the application under various conditions. Furthermore, privacy and ethical considerations must be addressed, especially when handling sensitive audio data.

Speech recognition in Python offers a powerful way to build applications that can interact with users in natural language. With the help of libraries like SpeechRecognition, PyAudio, and DeepSpeech, developers can create a range of applications from simple voice commands to complex conversational interfaces. Despite the challenges, the potential for innovative applications is vast, making speech recognition an exciting area of development in Python.

FAQ on Speech Recognition Module in Python

What is the speech recognition module in python.

The Speech Recognition module, often referred to as SpeechRecognition, is a library that allows Python developers to convert spoken language into text by utilizing various speech recognition engines and APIs. It supports multiple services like Google Web Speech API, Microsoft Bing Voice Recognition, IBM Speech to Text, and others.

How can I install the Speech Recognition module?

You can install the Speech Recognition module by running the following command in your terminal or command prompt: pip install SpeechRecognition For capturing audio from the microphone, you might also need to install PyAudio. On most systems, this can be done via pip: pip install PyAudio

Do I need an internet connection to use the Speech Recognition module?

Yes, for most of the supported APIs like Google Web Speech, Microsoft Bing Voice Recognition, and IBM Speech to Text, an active internet connection is required. However, if you use the CMU Sphinx engine, you do not need an internet connection as it operates offline.

- Python Framework

- AI-ML-DS With Python

- Python-Library

Similar Reads

- Speech Recognition Module Python Speech recognition, a field at the intersection of linguistics, computer science, and electrical engineering, aims at designing systems capable of recognizing and translating spoken language into text. Python, known for its simplicity and robust libraries, offers several modules to tackle speech rec 4 min read

- PyTorch for Speech Recognition Speech recognition is a transformative technology that enables computers to understand and interpret spoken language, fostering seamless interaction between humans and machines. By implementing algorithms and machine learning techniques, speech recognition systems transcribe spoken words into text, 5 min read

- Speech Recognition in Python using CMU Sphinx "Hey, Siri!", "Okay, Google!" and "Alexa playing some music" are some of the words that have become an integral part of our life as giving voice commands to our virtual assistants make our life a lot easier. But have you ever wondered how these devices are giving commands via voice/speech? Do applic 5 min read

- Python subprocess module The subprocess module present in Python(both 2.x and 3.x) is used to run new applications or programs through Python code by creating new processes. It also helps to obtain the input/output/error pipes as well as the exit codes of various commands. In this tutorial, we’ll delve into how to effective 9 min read

- How to Set Up Speech Recognition on Windows? Windows 11 and Windows 10, allow users to control their computer entirely with voice commands, allowing them to navigate, launch applications, dictate text, and perform other tasks. Originally designed for people with disabilities who cannot use a mouse or keyboard. In this article, We'll show you H 5 min read

- Python word2number Module Converting words to numbers becomes a quite challenging task in any programming language. Python's Word2Number module emerges as a handy solution, facilitating the conversion of words denoting numbers into their corresponding numerical values with ease. In this article, we will see what is the word2 2 min read

- 5 Best AI Tools for Speech Recognition in 2024 Voice recognition software is becoming more important across various industries, changing how we interact with technology. From transcribing meetings to controlling smart devices, these tools are integral to enhancing productivity and accessibility. Not everyone can type easily, or at all. That's wh 8 min read

- Installing Python telnetlib module In this article, we are going to see how to install the telnetlib library in Python. The telnetlib module provides a Telnet class that implements the Telnet protocol. If you have Python installed, the telnetlib library is already installed, but if it isn't, we can use the pip command to install it. 1 min read

- Audio Recognition in Tensorflow This article discusses audio recognition and also covers an implementation of a simple audio recognizer in Python using the TensorFlow library which recognizes eight different words. Audio RecognitionAudio recognition comes under the automatic speech recognition (ASR) task which works on understandi 8 min read

- How to Install a Python Module? A module is simply a file containing Python code. Functions, groups, and variables can all be described in a module. Runnable code can also be used in a module. What is a Python Module?A module can be imported by multiple programs for their application, hence a single code can be used by multiple pr 4 min read

- Python Module Index Python has a vast ecosystem of modules and packages. These modules enable developers to perform a wide range of tasks without taking the headache of creating a custom module for them to perform a particular task. Whether we have to perform data analysis, set up a web server, or automate tasks, there 4 min read

- Why is Python So Popular? One question always comes into people's minds Why Python is so popular? As we know Python, the high-level, versatile programming language, has witnessed an unprecedented surge in popularity over the years. From web development to data science and artificial intelligence, Python has become the go-to 7 min read

- Telnet Automation / Scripting Using Python Telnet is the short form of Teletype network, which is a client/server application that works based on the telnet protocol. Telnet service is associated with the well-known port number - 23. As Python supports socket programming, we can implement telnet services as well. In this article, we will lea 5 min read

- How to create modules in Python 3 ? Modules are simply python code having functions, classes, variables. Any python file with .py extension can be referenced as a module. Although there are some modules available through the python standard library which are installed through python installation, Other modules can be installed using t 4 min read

- Build a Song Transcriptor App Using Python In today's digital landscape, audio files play a significant role in various aspects of our lives, from entertainment to education. However, extracting valuable information or content from audio recordings can be challenging. In this article, we will learn how to build a Song Transcriber application 4 min read

- How to Play and Record Audio in Python? As python can mostly do everything one can imagine including playing and recording audio. This article will make you familiar with some python libraries and straight-forwards methods using those libraries for playing and recording sound in python, with some more functionalities in exchange for few e 9 min read

- Resolve "No Module Named Encoding" in Python One common error that developers may encounter is the "No Module Named 'Encodings'" error. This error can be frustrating, especially for beginners, but understanding its origins and learning how to resolve it is crucial for a smooth Python development experience. What is Module Named 'Encodings'?The 3 min read

- Where Does Python Look for Modules? Modules are simply a python .py file from which we can use functions, classes, variables in another file. To use these things in another file we need to first import that module in that file and then we can use them. Modules can exist in various directories. In this article, we will discuss where do 3 min read

- Send an SMS Message with Python In today's fastest-growing world, SMS is still a powerful tool by which we can reach billions of users and one can establish a connection globally. In this new world when instant messaging and social media are dominating you can feel our humble SMS outdated but you don't underestimate its power, it 4 min read

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Hackers Realm

Convert Speech to Text using Python | Speech Recognition | Machine Learning Project Tutorial

Updated: May 31, 2023

Unlock the power of speech-to-text conversion with Python! This comprehensive tutorial explores speech recognition techniques and machine learning. Learn to transcribe spoken words into written text using cutting-edge algorithms and models. Enhance your skills in natural language processing and optimize your applications with this hands-on project tutorial. #SpeechToText #Python #SpeechRecognition #MachineLearning #NLP

In this project tutorial we will install the Google Speech Recognition module and covert real-time audio to text and also convert an audio file to text data.

You can watch the step by step explanation video tutorial down below

Project Information

The objective of the project is to convert speech to text in real time and convert audio file to text. It uses google speech API to convert the audio to text.

speech_recognition

Google Speech API

We install the module to proceed

Requirement already satisfied: speechrecognition in c:\programdata\anaconda3\lib\site-packages (3.8.1) Collecting PyAudio Using cached PyAudio-0.2.11.tar.gz (37 kB) Building wheels for collected packages: PyAudio Building wheel for PyAudio (setup.py): started Building wheel for PyAudio (setup.py): finished with status 'error' Running setup.py clean for PyAudio Failed to build PyAudio Installing collected packages: PyAudio Running setup.py install for PyAudio: started Running setup.py install for PyAudio: finished with status 'error'

Now we import the module

We initialize the module

Convert Speech to Text in Real time

We will convert real time audio from a microphone into text

Speak now Speaker: welcome to the channel Speak now Speaker: testing speech recognition Speak now Speaker: quit

Microphone() - Receive audio input from microphone

adjust_for_ambient_noise(source, duration=0.3) - Clear any background noise from the real time input

listen(source) - Capture the audio from the source

recognize_google(audio) - Google Speech recognition function to convert audio into text

text == 'quit' - Condition to quit the while loop

Convert Audio to Text

Now we will process and convert an audio file into text

listening to audio Audio: welcome to speech recognition

Displayed text is the same as the speech in the audio file

For larger audio files you need to split them in smaller segments for better processing

Final Thoughts

Very useful tool for converting real time recordings into text which can help in chats, interviews, narration, captions, etc.